A group of British lawmakers expressed concerns on Tuesday regarding the adequacy of the country’s financial regulators in addressing the potential harms of artificial intelligence (AI) to consumers and market stability. They urged the Financial Conduct Authority (FCA) and the Bank of England to transition from a “wait and see” strategy as AI technology becomes more widespread.

The Treasury Committee, in a report addressing AI in financial services, recommended that the FCA implement AI-specific stress tests to prepare financial firms for potential market shocks arising from automated systems. In addition, the committee called for the FCA to release guidance by the end of 2026 detailing how consumer protection rules apply to AI and the necessary understanding that senior managers must have of the systems they oversee.

Committee chair Meg Hillier voiced her concerns, stating, “Based on the evidence I’ve seen, I do not feel confident that our financial system is prepared if there was a major AI-related incident and that is worrying.”

The report highlights the significant risks associated with the rapid adoption of AI in the financial sector, particularly as a competition emerges among banks to implement agentic AI—systems capable of making decisions and taking autonomous actions. The FCA informed Reuters late last year that approximately three-quarters of British financial firms are currently utilizing AI for essential functions, including processing insurance claims and conducting credit assessments.

While recognizing the benefits of AI, the report cautioned about the “significant risks” involved, which include a lack of transparency in credit decisions, the potential exclusion of vulnerable consumers through algorithmic tailoring, increased fraud, and the spread of unregulated financial advice via AI chatbots.

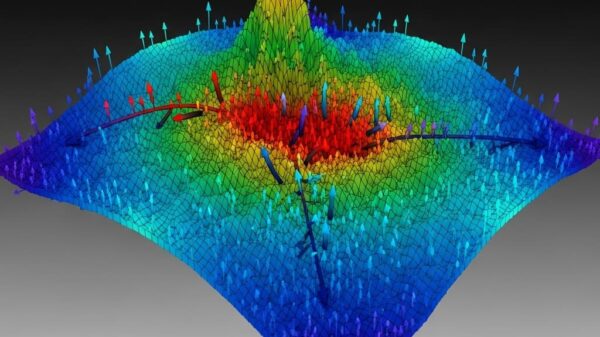

Experts who contributed evidence to the committee emphasized the dangers to financial stability stemming from an over-reliance on a small number of U.S. tech giants for AI and cloud services. Some experts noted that AI-driven trading systems could exacerbate herding behavior in markets, leading to heightened risks of a financial crisis.

A spokesperson for the FCA stated that the agency would review the committee’s report. The regulator has previously indicated skepticism toward AI-specific rules, citing the rapid pace of technological change as a significant factor.

A spokesperson for the Bank of England noted that the central bank is actively assessing AI-related risks and taking steps to strengthen the financial system’s resilience. The spokesperson added that the Bank would consider the committee’s recommendations and provide a response in due course.

Hillier remarked on the influence of increasingly sophisticated forms of generative AI on financial decision-making, warning, “If something has gone wrong in the system, that could have a very big impact on the consumer.”

In a related development, Britain’s finance ministry appointed Harriet Rees, Chief Information Officer at Starling Bank, and Rohit Dhawan from Lloyds Banking Group to guide the adoption of AI within financial services.

As the integration of AI expands in the financial sector, the call for proactive regulatory measures grows more urgent. With lawmakers advocating for concrete guidelines and stress-testing frameworks, the financial industry faces a pivotal moment to address not only the innovations AI brings but also the potential risks it poses to consumers and overall market stability.

See also Bank of America Warns of Wage Concerns Amid AI Spending Surge

Bank of America Warns of Wage Concerns Amid AI Spending Surge OpenAI Restructures Amid Record Losses, Eyes 2030 Vision

OpenAI Restructures Amid Record Losses, Eyes 2030 Vision Global Spending on AI Data Centers Surpasses Oil Investments in 2025

Global Spending on AI Data Centers Surpasses Oil Investments in 2025 Rigetti CEO Signals Caution with $11 Million Stock Sale Amid Quantum Surge

Rigetti CEO Signals Caution with $11 Million Stock Sale Amid Quantum Surge Investors Must Adapt to New Multipolar World Dynamics

Investors Must Adapt to New Multipolar World Dynamics