A recent report from the Massachusetts Institute of Technology (MIT) has unveiled a troubling reality in the realm of artificial intelligence (AI), where a staggering 95% of organizations are reporting no return on their investments in generative AI (GenAI). This revelation, derived from a comprehensive analysis of over 300 AI deployments and interviews with 52 organizations, is sending shockwaves through corporate boardrooms, prompting urgent discussions about the future viability of AI projects.

Despite substantial enterprise investments estimated between $30 billion and $40 billion, the MIT report suggests that many companies are struggling to translate these investments into meaningful business outcomes. While larger enterprises are leading the charge in AI pilot programs, allocating significant resources and building extensive teams, they are simultaneously experiencing the lowest rates of successful pilot-to-scale conversions. In contrast, mid-market firms are demonstrating more effective strategies, with top performers achieving average timelines of just 90 days from pilot to full implementation.

This paralysis mirrors the issues faced in the cybersecurity industry, where investment is high but the frequency of attacks continues to rise. While the cybersecurity market is projected to approach half a trillion dollars by 2025, the benefits of AI in this field remain elusive. The underlying problem, as highlighted by industry experts, lies in an overreliance on technology without sufficient investment in foundational capabilities necessary for effective management and adaptation.

As the discourse around the optimization of AI projects intensifies, concerns are growing about the cybersecurity risks posed by abandoned AI initiatives. The expansive digital landscape resulting from the adoption of AI has significantly increased the attack surface vulnerable to exploitation by cyber adversaries. Alarmingly, less than 1% of organizations have adopted microsegmentation strategies, which would enhance their ability to anticipate and withstand cyberattacks.

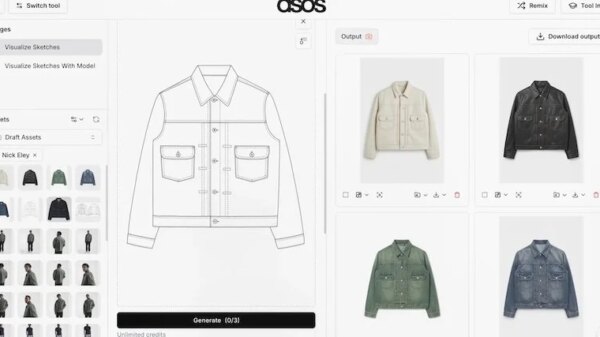

The MIT report underscores that while many organizations are enthusiastic about adopting GenAI, they are not witnessing corresponding transformative changes within their operations. “Most organizations fall on the wrong side of the GenAI Divide: adoption is high, but disruption is low,” the report states. Despite the widespread use of generic tools like ChatGPT, tailored solutions are often stalled by integration complexities and misalignment with existing workflows.

AI systems differ significantly from traditional IT systems. They are inherently data-intensive and require access to multiple sensitive datasets while spanning various platforms and environments. In sectors reliant on digital industrial systems, the challenges multiply, as many organizations operate with legacy machinery that complicates data aggregation and leads to inadequate training sets. Such systems also prioritize safety and reliability, making even a 95% accuracy rate from an AI system unacceptable.

The design of many AI projects is premised on outdated security paradigms that assume a trustworthy internal network. As business confidence wanes, many initiatives are abruptly halted, yet the remnants of these projects—deemed unmanageable due to persistent anomalies—can become entrenched. This pattern creates vulnerabilities that may be exploited through advanced AI-driven cyberattacks, including prompt injection, model inversion, and other sophisticated techniques.

Unmanaged or abandoned AI systems pose significant risks, not only because they leave behind uncontained threats but also due to the persistence of service accounts and API keys that can remain dormant yet accessible to potential attackers. These vulnerabilities are exacerbated by the presence of sensitive data in training datasets and other artifacts that often go unclassified or unencrypted.

Moreover, the erosion of vendor oversight in stalled AI initiatives increases the potential for shadow AI and supply chain attacks. These risks are particularly challenging to detect and can have devastating consequences once a breach occurs.

The urgency of addressing these vulnerabilities is underscored by recent findings from Anthropic’s research, which demonstrated that contemporary AI models can orchestrate multistage attacks using readily available open-source tools. This shift indicates that the barriers to AI-enabled cyber operations are diminishing rapidly, highlighting the necessity for organizations to focus not only on the deployment of AI but also on robust breach readiness strategies.

To mitigate these risks, experts recommend that organizations improve governance frameworks and systematically decommission any unproductive AI projects. The conventional wisdom that additional security controls can be integrated later is misleading; AI systems inherently amplify risks, given their intersection of data, automation, and trust.

In conclusion, as more organizations invest in AI, it is crucial to adopt a mindset focused on breach readiness. By assuming potential compromise and designing systems with containment in mind, organizations can significantly reduce their exposure. Prioritizing foundational strategies, such as microsegmentation, will be vital for ensuring that AI initiatives contribute positively to security rather than exacerbating vulnerabilities.

See also Anthropic’s Claims of AI-Driven Cyberattacks Raise Industry Skepticism

Anthropic’s Claims of AI-Driven Cyberattacks Raise Industry Skepticism Anthropic Reports AI-Driven Cyberattack Linked to Chinese Espionage

Anthropic Reports AI-Driven Cyberattack Linked to Chinese Espionage Quantum Computing Threatens Current Cryptography, Experts Seek Solutions

Quantum Computing Threatens Current Cryptography, Experts Seek Solutions Anthropic’s Claude AI exploited in significant cyber-espionage operation

Anthropic’s Claude AI exploited in significant cyber-espionage operation AI Poisoning Attacks Surge 40%: Businesses Face Growing Cybersecurity Risks

AI Poisoning Attacks Surge 40%: Businesses Face Growing Cybersecurity Risks