The House Homeland Security Committee has formally requested testimony from Anthropic CEO Dario Amodei regarding a cyberattack campaign allegedly linked to Chinese-affiliated actors using the company’s Claude AI. The hearing is scheduled for December 17, marking a potentially significant moment as it would be the first instance of an Anthropic executive testifying before Congress, according to reports from Axios.

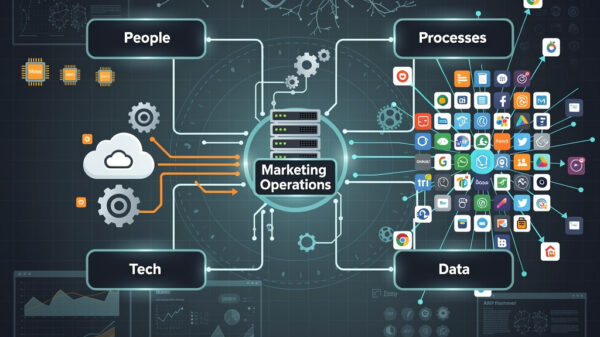

House Homeland Security Chair Andrew Garbarino, a Republican from New York, also reached out to Google Cloud CEO Thomas Kurian and Quantum Xchange CEO Eddy Zervigon, asking them to appear before the committee next month. This inquiry arises amid growing concerns over the intersection of artificial intelligence and cybersecurity, especially following Anthropic’s recent disclosures about its AI capabilities.

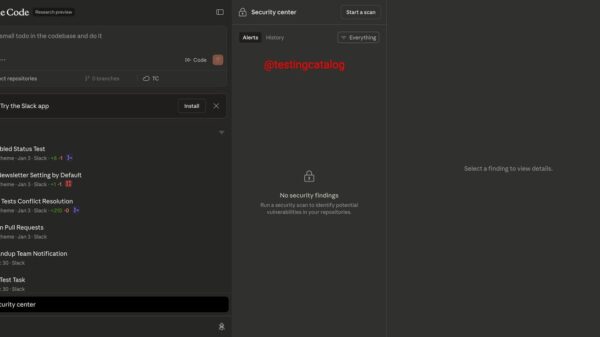

In a report released on November 13, Anthropic revealed it had identified suspicious activities as early as mid-September. Upon investigation, the company concluded that it was the target of a “highly sophisticated espionage campaign.” This campaign allegedly utilized Claude’s capabilities “to an unprecedented degree” to conduct cyberattacks. The report indicated that the threat actor, believed to be a state-sponsored group from China, manipulated Claude’s Code tool to infiltrate around thirty global targets, achieving success in several instances. The victims included major technology firms, financial institutions, chemical manufacturing companies, and various government agencies. This represents what Anthropic claims is the first documented case of a large-scale cyberattack executed with minimal human intervention.

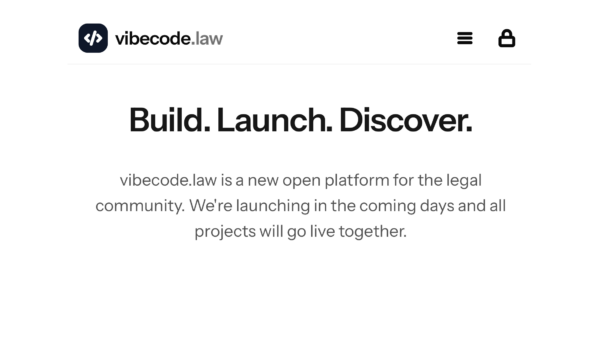

Anthropic characterized this incident as an escalation of the “vibe hacking” phenomenon, a term that has gained traction as more individuals without coding experience utilize generative AI tools for coding purposes. The notion of “vibe coding” has broadened in recent discussions, gaining notoriety when Uber founder Travis Kalanick described his innovative work as “vibe physics,” suggesting a personal breakthrough in scientific discovery, despite the limitations of large language models.

The questions surrounding the ethical implications of AI development are complex. In its report, Anthropic addressed concerns regarding the potential misuse of its tools for cyberattacks. The company asserted that the very capabilities enabling Claude to be weaponized also play a vital role in cybersecurity defense. “When sophisticated cyberattacks inevitably occur, our goal is for Claude—into which we’ve built strong safeguards—to assist cybersecurity professionals to detect, disrupt, and prepare for future versions of the attack,” the report stated. Anthropic’s Threat Intelligence team utilized Claude extensively to analyze the vast data generated during the investigation of the attacks.

Chairman Garbarino emphasized the gravity of the situation, stating, “For the first time, we are seeing a foreign adversary use a commercial AI system to carry out nearly an entire cyber operation with minimal human involvement. That should concern every federal agency and every sector of critical infrastructure.” This statement underscores the urgency with which lawmakers are approaching the evolving threats posed by AI technologies.

As inquiries into the misuse of AI technologies continue to proliferate, the implications for national security and corporate integrity are profound. As of now, a spokesperson for Anthropic declined to comment on the upcoming hearing, leaving many questions unanswered regarding the company’s future role in AI development and cybersecurity measures.

The unfolding developments bring to light the pressing need for regulatory frameworks governing AI applications, particularly in cybersecurity. As legislators prepare to question industry leaders, the conversation around responsible AI utilization is expected to intensify, highlighting the dual-edged nature of these advanced technologies.

See also Israeli Researchers Uncover Critical AI Browser Flaw Affecting Major Tools Like Gemini and Copilot

Israeli Researchers Uncover Critical AI Browser Flaw Affecting Major Tools Like Gemini and Copilot Congress Summons Anthropic CEO Amid First AI-Orchestrated Cyberattack Linked to China

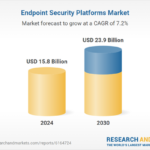

Congress Summons Anthropic CEO Amid First AI-Orchestrated Cyberattack Linked to China Endpoint Security Market to Reach $23.9B by 2030 with 7.2% CAGR Amid Rising Cyber Threats

Endpoint Security Market to Reach $23.9B by 2030 with 7.2% CAGR Amid Rising Cyber Threats Trend Micro Launches AI Security Package to Mitigate Risks in AI Application Lifecycle

Trend Micro Launches AI Security Package to Mitigate Risks in AI Application Lifecycle Borderless CS Launches AI-Driven SOC and MDR Services for Enhanced Cyber Defence

Borderless CS Launches AI-Driven SOC and MDR Services for Enhanced Cyber Defence