SOUTH CHARLESTON, W.Va. – During a recent state Higher Education Policy Commission meeting, Joel Farkas, an assistant biology professor at West Virginia University at Parkersburg, addressed the growing influence of artificial intelligence (AI) on students and faculty. Farkas, who also chairs the state Advisory Council of Faculty, presented a report detailing how students are increasingly integrating AI into their academic work.

Farkas noted that while early AI models primarily served to quickly gather facts from the internet, advancements have enabled these tools to execute more complex tasks, including reasoning and problem-solving in subjects such as mathematics and genetics. “It used to make mistakes in problems, and I could predictably beat ChatGPT, and then every year, systematically, everything I beat it on it automatically figures out how to beat me back,” he said, highlighting the evolving capabilities of AI technology.

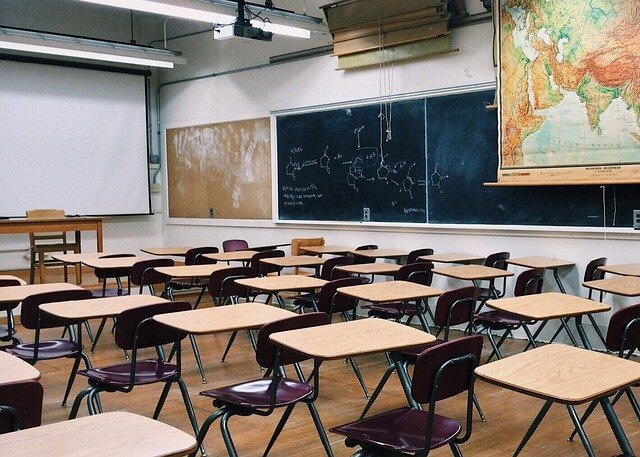

In his discussions with students, Farkas emphasizes the appropriate use of AI, encouraging them to utilize these tools for generating resources and engaging in dialogue for immediate feedback. However, he expressed concern over ongoing misuse among some students. “It’s at the point now where you can’t trust the authenticity of anything that students do unless you watch them do it in the classroom without a computer,” he remarked. This has prompted some faculty members to revert to traditional methods, utilizing “literally pencil and paper” to maintain academic integrity when assessing students’ work outside the classroom.

Farkas cited a specific case involving a math professor at WVU Parkersburg, who altered her teaching strategy as students began relying on AI to complete homework. He observed a notable shift in performance metrics over the past three years, with some students achieving perfect scores on homework while struggling significantly on tests, averaging only 40%. The professor has since adopted a new model that requires students to complete readings before class and work collaboratively on problems during instructional time.

In light of these developments, Farkas believes educators will need to reassess their teaching methodologies. “I think a lot of us are probably going to rethink our teaching in that kind of way where I think we’re going to have to go back to that more or less flipped model where every assessment is done in class,” he stated. He further suggested that traditional grading schemes, which have been in place for decades, may need revisions to account for the evolving educational landscape driven by AI.

Farkas highlighted that online classes face the most significant challenges, as students can easily utilize AI to complete their assignments. He proposed that online assessments might require stricter measures such as proctoring or time constraints to curb the likelihood of students seeking external help during tests.

He also raised concerns regarding credit transferability, suggesting that institutions may need to implement restrictions. He observed instances where students intentionally avoid challenging courses by taking them online at other schools and subsequently transferring the credits back to their home institution. “I’ve seen a lot of instances where somebody has one class they specifically want to avoid, and so they take that one class online somewhere else and transfer back,” he explained, using examples from WVU Parkersburg where students enroll in demanding courses like anatomy and macroeconomics elsewhere to bypass local challenges.

Farkas advocates for the development of formal AI policies within academic institutions. While WVU Parkersburg allows departments to create their own AI guidelines, he noted that not all have taken action. “Most people that I’ve talked to at my school don’t have any kind of statement on AI; you just kind of informally talk in the classroom about what you should do and what you shouldn’t,” he said. He expressed a desire for a more structured approach, calling for “top-down guidance” to establish clearer standards on AI usage.

As educational systems adapt to the rapid advancement of AI technologies, the need for comprehensive strategies and policies will be vital in maintaining academic integrity and ensuring that learning outcomes are met effectively. The ongoing dialogue among educators like Farkas serves as a crucial step in navigating this transformative landscape.

See also EDCAPIT Achieves Global Reach with 120K Page Views and $5M Seed Fundraising Announcement

EDCAPIT Achieves Global Reach with 120K Page Views and $5M Seed Fundraising Announcement U.S. Education Department Launches Reforms for Immigrant Rights and AI Integration in Schools

U.S. Education Department Launches Reforms for Immigrant Rights and AI Integration in Schools AI in K-12 Education Market Projected to Reach $9B by 2032, Growing at 14% CAGR

AI in K-12 Education Market Projected to Reach $9B by 2032, Growing at 14% CAGR AI’s Impact on African Education Threatened by Digital Divide, Analysis Reveals

AI’s Impact on African Education Threatened by Digital Divide, Analysis Reveals Ghanaian Students Embrace Generative AI, Enhancing Learning Autonomy and Creativity

Ghanaian Students Embrace Generative AI, Enhancing Learning Autonomy and Creativity