As enterprises increasingly explore the potential of AI data analysts to enhance revenue insights, finance executives remain steadfast in their demand for outputs that comply with the stringent controls of statutory reporting. “Enterprises are piloting AI data analysts to accelerate revenue insights, but finance leaders will not rely on outputs that skip controls they already enforce for statutory reporting,” notes Sarah Dunsby in London Loves Business. Poor data quality is a significant concern, costing organizations an average of $12.9 million annually, with an alarming 88% of spreadsheets containing errors that can adversely affect revenue decisions. The median monthly close process stretches over six days, and for less efficient organizations, it can extend to ten days or more, slowing down necessary adjustments in go-to-market strategies.

Revenue data is often fragmented across various systems, including billing platforms, CRM systems, and finance applications, each interpreting key metrics like customers and contracts differently. The lack of robust reconciliation rules and lineage tracking means that AI-generated queries may produce results that are technically accurate but financially flawed. According to the same analysis, most data leaders reported encountering at least one data quality incident last year that disrupted stakeholders. In response, finance teams have resorted to manual verification processes that, while reliable, significantly hamper speed.

A unified and governed set of revenue metrics becomes crucial in this environment. Terms such as bookings, billings, recognized revenue, net retention, and expansion often lead to confusion. Additionally, discrepancies arise in recurring revenue calculation methods, such as Annual Recurring Revenue (ARR) versus Monthly Recurring Revenue (MRR). Therefore, an AI data analyst must adhere to finance’s exact definitions for closing books, avoiding improvised SQL queries.

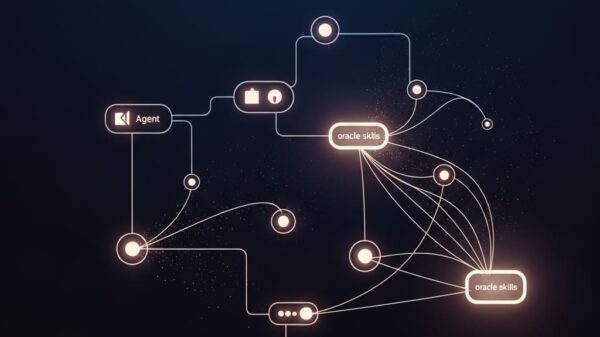

Architecture Demands Semantic Foundations

The foundation for effective AI data analysis should consist of a data warehouse or lakehouse as the central hub, complemented by a semantic layer that codifies revenue definitions into reusable metrics. This setup involves integrating standardized, deduplicated entities for customers, products, contracts, and usage. To ensure data integrity, every revenue-related transformation should be enveloped in automated tests that verify schema, referential integrity, and material thresholds, with active monitoring for data quality issues.

Positioning the AI data analyst on this infrastructure allows it to convert queries into calls guided by metrics through the semantic layer, effectively bypassing unrestricted SQL usage. A policy engine should be deployed to manage role-based and attribute-based access to sensitive data elements, including pricing and personal identifiers. Furthermore, every interaction must be logged, establishing a clear lineage back to source tables for auditing and refinement purposes.

To maintain accuracy and control costs, embedding guardrails is essential. This includes implementing validators to prevent unauthorized table joins or excessive row limits, as well as capping expenses on a per-workspace basis to manage compute and token outlay. Cost management must be treated as a fundamental requirement, alongside speed and precision.

Security and privacy compliance are paramount for AI analytics, including dashboards. Model training must be restricted to trusted feature stores or embedding pipelines that remove sensitive information. Organizations should align their data residency and retention practices with regulatory frameworks, as failures in compliance can lead to hefty fines across various jurisdictions. Maintaining stateless models that securely house conversation context is vital for adhering to corporate standards.

Human oversight is necessary, especially for high-stakes results related to revenue recognition or board presentations. Outputs should be classified either as exploratory—suitable for sales and product teams with caveats—or as production-grade, necessitating rigorous metric validations and approvals for new patterns. Notably, only about half of AI projects transition from pilot to production, highlighting the importance of uptime, lineage, and change controls beyond basic chat interfaces, as emphasized by Retail Technology Innovation Hub.

To measure the impact effectively, organizations should aim to reduce the time taken for routine revenue queries and streamline month-end reconciliations. For instance, if average closes take six days, a target of shaving one day off within two quarters could be pursued through automated billing and CRM matching processes. Tracking the reduction in incidents stemming from stale or anomalous data will also offer insights into improvements.

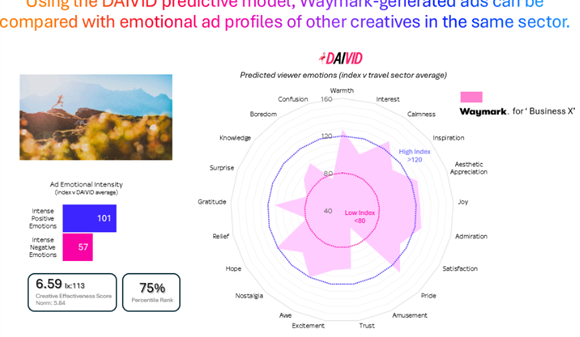

Benchmarking AI accuracy against established baselines for priority queries can help set acceptance thresholds for performance, revealing potential defects if rates fall below desired levels. A case in point is Deloitte’s AI and data operations, which assisted a global hospitality firm in deploying machine learning within finance, thereby reducing revenue leakage and expediting executive decision-making.

Cube’s AI Analyst, designed specifically for Financial Planning and Analysis (FP&A), facilitates queries across platforms like Slack and Teams, delivering instant answers and reports by connecting various data sources. Such pilots tend to flourish when focused, allowing organizations to build confidence before broader implementation.

Over a 90-day path to production trust, organizations can effectively align AI capabilities with existing practices. In the first 30 days, identifying ten key revenue questions and establishing definitions in the semantic layer can set the stage. The subsequent 30 days should involve connecting AI to these definitions and rolling out initial validations, while the final 30 days focus on activating governance measures and testing against legacy tools. Successful scaling can follow sustained improvements in accuracy and speed.

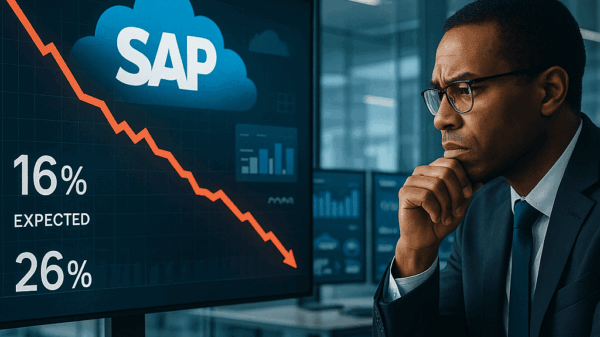

“AI can accelerate revenue analytics, but only if its outputs align with the same controls that protect your financial statements,” Dunsby concludes. As organizations like BlackLine automate reconciliations for finance teams, leaders are increasingly eyeing generative AI for potential reductions of 20-40% in ERP workloads, despite current pilots lagging at about 15%. With AI billing agents also emerging as valuable tools for forecasting revenue and churn, the evolving landscape underscores the ongoing tension between technological innovation and established workflows in financial operations.

See also Finance Ministry Alerts Public to Fake AI Video Featuring Adviser Salehuddin Ahmed

Finance Ministry Alerts Public to Fake AI Video Featuring Adviser Salehuddin Ahmed Bajaj Finance Launches 200K AI-Generated Ads with Bollywood Celebrities’ Digital Rights

Bajaj Finance Launches 200K AI-Generated Ads with Bollywood Celebrities’ Digital Rights Traders Seek Credit Protection as Oracle’s Bond Derivatives Costs Double Since September

Traders Seek Credit Protection as Oracle’s Bond Derivatives Costs Double Since September BiyaPay Reveals Strategic Upgrade to Enhance Digital Finance Platform for Global Users

BiyaPay Reveals Strategic Upgrade to Enhance Digital Finance Platform for Global Users MVGX Tech Launches AI-Powered Green Supply Chain Finance System at SFF 2025

MVGX Tech Launches AI-Powered Green Supply Chain Finance System at SFF 2025