Apple Inc. is making significant strides in artificial intelligence, focusing on multimodal large language models (MLLMs) that integrate text and visual data. These advanced systems enable devices to understand and generate images in novel ways, potentially transforming interactions across its product range from smartphones to servers. Recent research from Apple’s machine learning teams highlights breakthroughs in image generation and comprehension, emphasizing the company’s commitment to a broad AI initiative dubbed Apple Intelligence.

The exploration into MLLMs is part of Apple’s broader strategy to enhance AI functionality. According to a report from AppleInsider, researchers are delving into how these models can manage tasks related to image generation, interpretation, and multi-turn web searches featuring cropped images. This effort builds on foundational models introduced in 2025, which support multilingual and multimodal datasets, laying the groundwork for future applications.

A pivotal aspect of this research is Apple’s development of techniques that improve the models’ capabilities to process and generate images seamlessly. For example, Apple’s teams have created methods allowing MLLMs to interpret complex visual scenes and generate corresponding outputs, such as new images derived from textual descriptions. The focus on hybrid vision tokenizers, evident in initiatives like MANZANO, integrates visual understanding with generation tasks, enhancing overall performance.

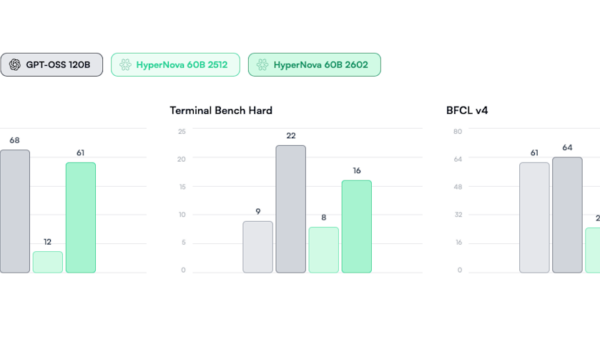

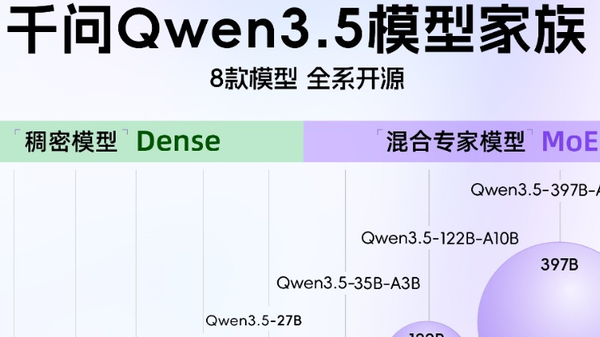

Apple’s commitment to responsible data sourcing is also noteworthy. The data used for training its models stems from a mix of web-crawled content, licensed corpora, and synthetic data. A recent technical report from Apple Machine Learning Research describes two foundation models: a 3B-parameter on-device version optimized for Apple silicon and a larger server-based model utilizing a Parallel-Track Mixture-of-Experts architecture. Both models have demonstrated competitive performance, matching or exceeding open-source alternatives in image-related tasks.

In practical applications, the ability to refine searches using cropped sections of images is particularly relevant. This feature enhances web searches, enabling a more intuitive querying process that mimics human visual processing. Apple’s pre-training strategies, including autoregressive methods, have been crucial in achieving these advancements, with earlier releases like AIM and MM1 paving the way for more sophisticated capabilities.

The models excel in image generation through text-to-image synthesis, producing high-quality outputs. The MANZANO model, for instance, merges vision understanding with generation while minimizing performance dips. This unified approach allows a single model to analyze an image’s content and create edited versions based on user prompts, broadening its utility across applications.

Scalability remains a strong point of Apple’s systems. By leveraging efficient quantization and KV-cache sharing, the on-device model operates effectively on hardware like iPhones and iPads, bringing advanced AI capabilities to everyday users without heavy reliance on cloud resources. The DeepMMSearch-R1 project empowers MLLMs for multimodal web searches, managing queries involving both text and images over multiple turns, with the potential to alter how users search for information online.

Human evaluations confirm the models’ capabilities, with the server model being built on Apple’s Private Cloud Compute, ensuring privacy while delivering reliable results. As noted in a paper available on arXiv, these models support multilingual features and tool calls, enhancing their versatility for a global user base. Safeguards such as content filtering are integrated into the system, aligning with Apple’s Responsible AI principles, ensuring the safe deployment of multimodal capabilities.

In comparison to competitors, Apple’s MLLMs are distinguished by their efficiency and integration. While open-source vision-language models are becoming more common, Apple’s proprietary optimizations position it favorably in on-device performance, a crucial factor for privacy-conscious consumers. The integration of these models into everyday applications enhances user experiences across platforms, as highlighted in recent updates from Apple Machine Learning Research.

Challenges persist, however. Issues related to the inherent unreliability of LLMs extend to multimodal variants, and while Apple’s post-training stabilizations address some of these concerns, ongoing refinements are essential. As the company looks ahead, datasets like Pico-Banana-400K, which focuses on high-quality, non-synthetic data, promise to redefine training paradigms for future models.

Emerging applications of these technologies signal potential advancements in fields such as healthcare imaging and autonomous vehicles, where multimodal understanding is critical. Apple’s emphasis on low-latency, high-accuracy models positions it well in these sectors. The integration of MLLMs into Apple’s ecosystem is set to amplify their impact, offering developers tools for guided generation and fine-tuning, thereby lowering barriers for custom AI applications.

As research progresses, innovative uses for these models are likely to emerge, including enhanced accessibility tools for visually impaired users and interactive educational aids. Ethically, Apple’s measures to address potential biases in image generation underscore its commitment to cultural sensitivities. The collaboration with Google for training models reflects a strategic decision aimed at scalability and integration, positioning Apple to lead in the evolving landscape of global AI adoption.

As Apple continues to refine its MLLMs, the fusion of modalities promises to create more intuitive human-machine interfaces. The company’s incremental yet impactful releases signal a dedication to innovation, with a vision that could redefine user interactions with technology in the future.

See also Meituan Launches 6B LongCat-Image Model, Redefining Bilingual Image Generation

Meituan Launches 6B LongCat-Image Model, Redefining Bilingual Image Generation Deepfake Fraud Losses Reach $40 Billion by 2027 Amid Rising Compliance Challenges

Deepfake Fraud Losses Reach $40 Billion by 2027 Amid Rising Compliance Challenges Higgsfield AI Achieves $1.3 Billion Valuation Under Leadership of Ex-Snap Executive

Higgsfield AI Achieves $1.3 Billion Valuation Under Leadership of Ex-Snap Executive Hayley Song Joins Berkman Klein Center to Advance AI Interpretability and Safety

Hayley Song Joins Berkman Klein Center to Advance AI Interpretability and Safety Japan Launches Investigation into X Corp’s Grok AI for Inappropriate Image Generation

Japan Launches Investigation into X Corp’s Grok AI for Inappropriate Image Generation