Researchers at the Hong Kong University of Science and Technology (HKUST) and Kuaishou Technology have made significant strides in enhancing camera control for AI-generated videos. The team, comprising Wenhang Ge, Guibao Shen, Jiawei Feng, along with Luozhou Wang, Hao Lu, and Xingye Tian, introduced a novel approach called CamPilot, which leverages reward feedback learning to improve the alignment of cameras with video content. This development is crucial as traditional methods often fail to accurately assess video-camera alignment and tend to be computationally intensive, disregarding essential 3D geometric information.

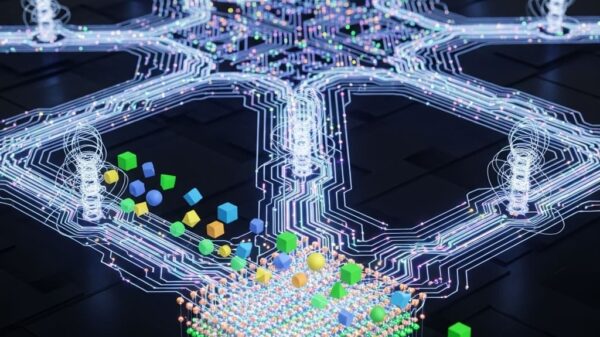

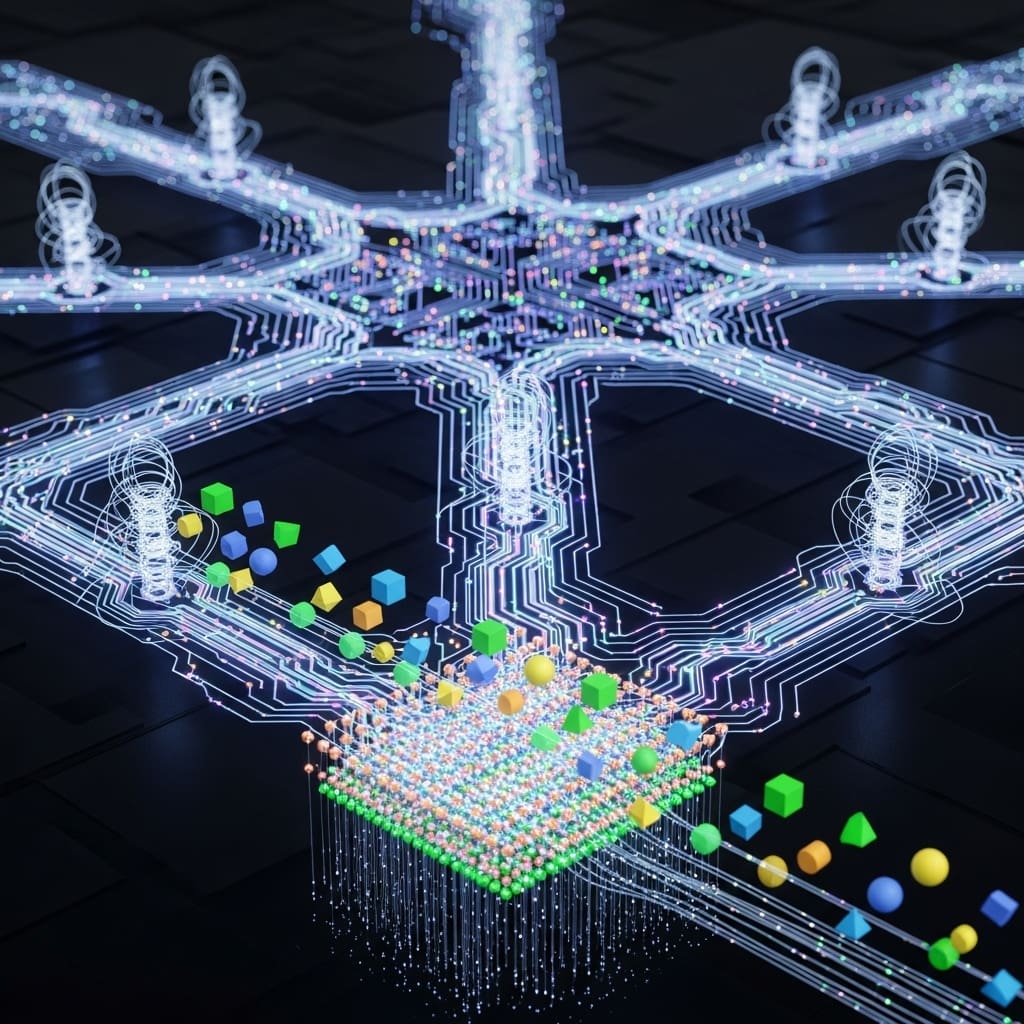

CamPilot’s innovation lies in its efficient, camera-aware 3D decoder, which translates video data into 3D representations. By optimizing pixel-level consistency, the method allows for more realistic and customizable video generation. The researchers’ approach integrates camera pose as both an input and a critical projection parameter, facilitating the detection of misalignments that can lead to blurry images. These distortions serve as a reward signal for refining the generated video, enabling a more precise assessment of video-camera alignment.

Building on existing Reward Feedback Learning (ReFL) techniques, the researchers tackled three primary limitations: the challenge of accurately assessing video-camera alignment, high computational costs associated with decoding video into RGB formats, and the neglect of 3D geometric information. The introduction of a visibility term allows the system to selectively focus on deterministic regions, thus ensuring that creative freedom is preserved while enhancing the robustness of the reward signal.

In experiments conducted on the RealEstate10K and WorldScore benchmarks, the effectiveness of CamPilot was evident, showcasing its ability to generate high-quality, temporally coherent videos while maintaining precise camera control. This breakthrough represents a novel framework for world-consistent video generation and 3D scene reconstruction. By utilizing 3D Gaussians for efficient evaluation of video-camera consistency, the team has overcome limitations of previous approaches that overlooked underlying 3D geometries.

Technical Details

The research demonstrates that decoding video latent variables into 3D representations facilitates reward quantification based on geometric distortions, specifically blurriness arising from misalignment between the video latent and camera pose. This innovative process optimizes pixel-level consistency between rendered views and ground-truth images, establishing a measurable connection between visual quality and camera alignment.

By incorporating camera pose as a projection parameter, the team was able to accurately measure misalignment, which directly manifests as geometric distortions in the 3D structure. To account for the stochastic nature of video generation, the researchers employed a visibility term that focuses supervision on deterministic regions derived through geometric warping, thus enhancing the overall quality of the generated videos.

The results affirm that minimizing pixel-level differences not only improves camera controllability but also enhances visual fidelity. The integration of a visibility-aware reward objective further refines the reward computation by focusing on visible, deterministic regions while avoiding penalties in hallucinated or occluded areas.

In addition to improving camera control, the study illustrates that depth maps can be rendered from 3D Gaussians, which, when combined with camera poses, enable the determination of pixel visibility across frames. This advancement paves the way for efficient 3D scene reconstruction in a feed-forward manner, serving as a medium for reward computation and significantly elevating the quality of AI-generated content.

The implications of this research extend to various fields including virtual reality, robotics, game development, and architectural visualization, where both visual fidelity and accurate 3D representation are critical. The CamPilot project, which is accessible at CamPilot, highlights the potential of this innovative technology to create user-friendly and highly customizable content creation tools. Future research may focus on extending this framework to accommodate more complex scenes and dynamic environments, further establishing its role in the evolution of controllable video generation.

👉 More information

🗞CamPilot: Improving Camera Control in Video Diffusion Model with Efficient Camera Reward Feedback

🧠 ArXiv: https://arxiv.org/abs/2601.16214