The Electronic Frontier Foundation (EFF) has announced that it will accept code generated by large language models (LLMs) from contributors to its open-source projects, while explicitly rejecting comments and documentation that are not produced by humans. The policy, outlined by EFF representatives Alexis Hancock and Samantha Baldwin this week, emphasizes the foundation’s commitment to high-quality software tools rather than merely increasing the volume of code produced.

Hancock and Baldwin noted that while LLMs are adept at generating code that appears human-like, they often contain underlying bugs that can be replicated at scale. “This makes LLM-generated code exhausting to review, especially with smaller, less resourced teams,” they explained, adding that submissions sometimes come from “well-intentioned people” who may submit code with hallucinations or misrepresentations.

This situation forces project maintainers to engage not just in code reviews but also in significant refactoring when contributors lack a fundamental understanding of the code generated. The EFF is concerned that a surge of AI-generated code might be “only marginally useful or potentially unreviewable.” In an effort to maintain quality, the EFF is urging contributors to thoroughly understand, review, and test their submissions before contributing. The organization also requests that contributors disclose when their code is generated by an LLM, and insists that documentation and comments must be of human origin.

According to Baldwin and Hancock, project leads retain the authority to determine the reviewability of submissions. Amanda Brock, CEO of OpenUK, highlighted that the open-source community is only beginning to recognize the implications of AI-generated code on projects and maintainers. She pointed out the challenges posed by the vast amount of content scraped by AI and the numerous bots contributing to this phenomenon, warning of an increasing volume of low-quality submissions. “We’re gonna see a lot more AI red cards in the coming weeks,” she predicted.

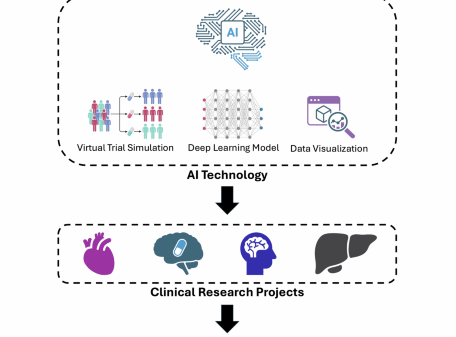

The EFF is currently managing four projects, all under GNU or Creative Commons licenses: Certbot, Privacy Badger, Boulder, and Rayhunter, each focused on privacy and security. The organization’s policy change carries a tone of cautious realism, as Hancock and Baldwin remarked, “It is worth mentioning that these tools raise privacy, censorship, ethical, and climatic concerns for many.” They emphasized that these issues are a continuation of harmful practices by tech companies that have led to the current landscape.

In a broader context, the EFF’s stance highlights ongoing concerns surrounding the use of AI in software development. As LLMs become increasingly integrated into coding practices, the risks of generating flawed or unreviewable code could compromise the integrity and security of open-source projects. The foundation’s decision to limit the use of AI-generated comments and documentation underscores a commitment to transparency and quality, reflecting a growing awareness of the ethical implications associated with AI technologies.

As the open-source community grapples with these challenges, it remains crucial for contributors to prioritize understanding and verifying their submissions. The EFF’s guidelines serve as a reminder of the importance of human oversight in maintaining the reliability of software tools that serve a broad public interest.

See also Sam Altman Praises ChatGPT for Improved Em Dash Handling

Sam Altman Praises ChatGPT for Improved Em Dash Handling AI Country Song Fails to Top Billboard Chart Amid Viral Buzz

AI Country Song Fails to Top Billboard Chart Amid Viral Buzz GPT-5.1 and Claude 4.5 Sonnet Personality Showdown: A Comprehensive Test

GPT-5.1 and Claude 4.5 Sonnet Personality Showdown: A Comprehensive Test Rethink Your Presentations with OnlyOffice: A Free PowerPoint Alternative

Rethink Your Presentations with OnlyOffice: A Free PowerPoint Alternative OpenAI Enhances ChatGPT with Em-Dash Personalization Feature

OpenAI Enhances ChatGPT with Em-Dash Personalization Feature