Executives at leading tech firms have touted advancements in artificial intelligence (AI), suggesting that machines may soon achieve a level of thinking comparable to humans. However, this claim is met with skepticism from experts like Benjamin Riley, founder of Cognitive Resonance, who argues that current AI models do not equate to genuine intelligence.

In an essay for The Verge, Riley asserts that while humans often associate language with intelligence, the two are not synonymous. He emphasizes that neuroscience indicates human thought operates independently of language. “We use language to think, but that does not make language the same as thought,” Riley writes, suggesting that this distinction is crucial for separating scientific reality from the hyperbole often espoused by AI leaders.

Artificial general intelligence (AGI), defined as an AI system capable of human-level cognition across various tasks, is often heralded as the ultimate goal of AI research. Proponents claim that AGI could tackle significant global challenges, from eradicating diseases to addressing climate change. Yet, Riley contends that the industry’s reliance on large language models (LLMs) is misplaced, warning that these tools merely mimic language without achieving true cognitive ability.

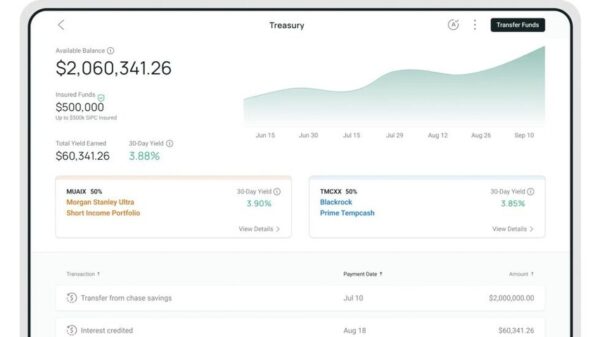

Riley highlights that the escalating capital expenditures in AI stem from an obsession with scaling—enhancing models with more data and processing power to improve their conversational and problem-solving abilities. “LLMs are simply tools that emulate the communicative function of language, not the separate and distinct cognitive process of thinking and reasoning,” he explains. This perspective invites questions about the long-term viability of LLMs as a foundation for creating AGI.

Research corroborates Riley’s claims. Functional magnetic resonance imaging (fMRI) studies have revealed that different brain regions activate during distinct cognitive tasks, such as mathematics versus language. Furthermore, individuals who have lost their language skills can still perform various cognitive functions, including math and emotional understanding. Such findings challenge the notion that language is integral to human thought.

Even some of the foremost figures in AI harbor doubts about the capabilities of LLMs. Yann LeCun, a Turing Award winner and former AI chief at Meta, has long argued that LLMs cannot achieve general intelligence. He advocates for the development of “world models” that understand the physical world through diverse data, rather than being limited to language alone. This divergence of vision may have contributed to his departure from Meta, where CEO Mark Zuckerberg is heavily investing in AI technologies aimed at creating artificial “superintelligence” through LLMs.

Recent research further underscores the limitations of LLMs. A study published in the Journal of Creative Behavior employed a mathematical framework to assess AI’s creative potential and concluded that LLMs have a hard ceiling. As probabilistic systems, these models eventually fail to generate genuinely novel outputs, rendering them effectively limited to generating “serviceable” content. “While AI can mimic creative behavior—quite convincingly at times—its actual creative capacity is capped at the level of an average human and can never reach professional or expert standards,” stated the study’s author, David H. Cropley, a professor at the University of South Australia.

These findings cast doubt on the prospects of LLM-powered AI driving groundbreaking innovations. If AI struggles to produce new and insightful concepts, how can it be expected to address pressing challenges like “new physics” or the climate crisis, as suggested by prominent figures such as Elon Musk and OpenAI CEO Sam Altman? Riley encapsulates this sentiment, remarking that while AI may blend existing knowledge in intriguing ways, it will remain confined to the vocabulary and data it has been trained upon, rendering it a “dead-metaphor machine.”

As debates around the capabilities and limitations of LLMs continue, the future of AI remains uncertain. The discourse emphasizes the need for a clearer understanding of what constitutes true intelligence and the role of language within cognitive processes, leaving the industry to reconsider its aspirations for AGI.

See also Alibaba’s Qwen3-VL Scans 2-Hour Videos with 99.5% Accuracy in Frame Detection

Alibaba’s Qwen3-VL Scans 2-Hour Videos with 99.5% Accuracy in Frame Detection Google Limits Nano Banana Pro to 2 Daily Images for Free Users Amid High Demand

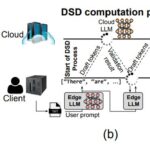

Google Limits Nano Banana Pro to 2 Daily Images for Free Users Amid High Demand Distributed Speculative Decoding Achieves 1.1x Speedup and 9.7% Throughput Gain for LLMs

Distributed Speculative Decoding Achieves 1.1x Speedup and 9.7% Throughput Gain for LLMs Synthetic Media Market Surges to $67.4B by 2034, Driven by Generative AI Innovations

Synthetic Media Market Surges to $67.4B by 2034, Driven by Generative AI Innovations OpenAI Launches GPT-5 with 10x Speed Improvement, Surpassing 1 Million Business Clients

OpenAI Launches GPT-5 with 10x Speed Improvement, Surpassing 1 Million Business Clients