A new systematic review reveals that explainability has become the weakest link in the generative AI ecosystem, as current methodologies struggle to match the complexity and societal implications of these systems. The study, titled “Explainable Generative AI: A Two-Stage Review of Existing Techniques and Future Research Directions,” was published in the journal AI and scrutinizes how explainability is conceptualized, implemented, and evaluated in generative AI systems. Using a comprehensive two-stage review process, the authors analyzed both high-level literature and empirical studies to chart the state of the field and pinpoint critical gaps that jeopardize the safe deployment of generative models.

Despite the rapid evolution of generative AI, research focused on explainability has lagged behind. Traditional explainable AI methods were primarily designed for predictive models that classify or label inputs. In contrast, generative systems operate in fundamentally different ways, producing multiple valid outputs for a single input by sampling from probability distributions and navigating latent spaces. This shift complicates the very notion of explaining an AI system.

Out of 261 initially identified studies, the authors ultimately examined 63 peer-reviewed articles published between 2020 and early 2025. The first stage synthesized insights from 18 review papers, while the second stage focused on 45 empirical studies that applied explainability techniques in real generative systems. The findings indicated that most contemporary explainability techniques are adaptations of older methods not designed for generative behavior.

Dominant feature attribution tools like SHAP, LIME, and saliency-based techniques remain prevalent, despite their limited capacity to capture the temporal aspects of output generation in generative models. These methods often focus on isolated tokens, pixels, or features, providing partial explanations that fail to accurately reflect the stochastic and multi-step nature of generation. Consequently, explanations may seem convincing without faithfully representing the model’s behavior.

The study further highlights a widespread conceptual fragmentation within the field. Researchers from different communities often employ inconsistent terminology to describe similar ideas, while identical terms may refer to fundamentally different techniques. This lack of shared definitions complicates the comparison of results, replication of findings, and development of reliable standards for regulators and practitioners. The authors contend that explainability remains more of an emerging concept than a mature discipline within generative AI research.

As the review progresses, it examines how explainability is implemented in various generative AI domains. The empirical studies span applications in healthcare decision support, educational technologies, cybersecurity, creative tools, industrial systems, and legal contexts. Transformer-based models dominate this landscape, followed by generative adversarial networks and variational autoencoders.

Despite the diversity of applications, a few common patterns emerge. Most explainability efforts are post hoc, aiming to interpret model behavior after generation occurs rather than embedding transparency into the models themselves. These explanations are typically local, addressing individual outputs rather than providing a global understanding of system behavior. While this approach may improve user understanding of specific responses, it offers little insight into how models function across distributions, contexts, or edge cases.

The review identifies three key tensions that characterize explainability in generative AI. The first involves the transparency of the generative mechanism, emphasizing insight into architectures, latent representations, and sampling processes. The second focuses on user-centered interpretability, assessing whether explanations are meaningful for users with varied expertise. The third pertains to evaluation fidelity, which questions whether explanations accurately reflect the model’s operations.

Most current methods tend to optimize for one of these dimensions at the expense of others. Technical approaches that reveal internal mechanisms often yield explanations that are inaccessible to non-experts. Conversely, user-friendly explanations, such as natural language justifications, may enhance trust and usability but lack verifiable fidelity. Evaluation practices are inconsistent; many studies rely on anecdotal evidence or limited user studies instead of standardized benchmarks.

These shortcomings raise significant concerns in high-stakes domains like healthcare, education, and law, where explainability is crucial not only for usability but also for accountability and safety. Although some studies report improved user trust and task performance with explanations, few assess whether these explanations prevent harm, reduce bias, or enable meaningful oversight.

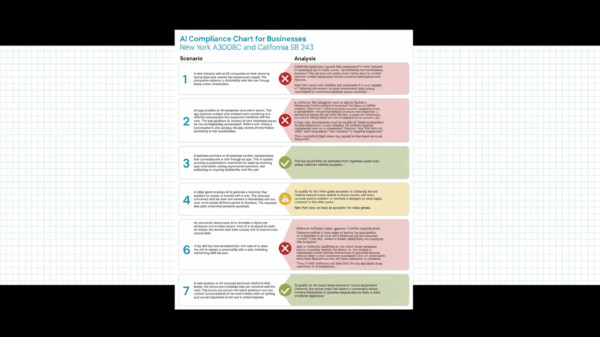

The study also notes a growing disconnect between regulatory expectations and the current technical capabilities of explainability. Policymakers worldwide are advancing frameworks that demand transparency, auditability, and accountability in AI systems, with the European Union AI Act frequently cited as a key external driver of explainability research. However, the review concludes that existing explainability techniques are not yet capable of reliably meeting these regulatory demands.

The authors argue that explainability should be reframed as a system-level property rather than as a collection of optional tools. For generative AI, explanations must encompass factors like training data influences, retrieval mechanisms, latent-space dynamics, and output variability. This necessitates moving beyond retrospective explanations toward frameworks inherently designed alongside model architectures.

Future research directions include developing model-agnostic explainability frameworks applicable across diverse generative architectures, establishing standardized evaluation protocols that balance technical fidelity with human-centered assessment, and addressing the trade-offs between interpretability and model performance. The authors highlight that high computational costs continue to hinder many advanced explainability methods, particularly in real-time or large-scale applications.

A human-centered design approach is another critical area for improvement. The review emphasizes that explanations must be tailored to various stakeholders, including developers, end users, domain experts, educators, and regulators. A one-size-fits-all approach to explainability is unlikely to succeed in systems impacting diverse populations and decision contexts. Interactive and adaptive explanation interfaces are identified as promising avenues, allowing users to actively explore model behavior rather than simply receiving passive explanations.

The authors also point to the potential of hybrid approaches that integrate statistical methods with symbolic reasoning, provenance tracking, and audit mechanisms. Such systems could bridge low-level technical transparency with high-level accountability, creating explanations that are both faithful and actionable. However, these approaches remain experimental and scarce.

See also Sam Altman Praises ChatGPT for Improved Em Dash Handling

Sam Altman Praises ChatGPT for Improved Em Dash Handling AI Country Song Fails to Top Billboard Chart Amid Viral Buzz

AI Country Song Fails to Top Billboard Chart Amid Viral Buzz GPT-5.1 and Claude 4.5 Sonnet Personality Showdown: A Comprehensive Test

GPT-5.1 and Claude 4.5 Sonnet Personality Showdown: A Comprehensive Test Rethink Your Presentations with OnlyOffice: A Free PowerPoint Alternative

Rethink Your Presentations with OnlyOffice: A Free PowerPoint Alternative OpenAI Enhances ChatGPT with Em-Dash Personalization Feature

OpenAI Enhances ChatGPT with Em-Dash Personalization Feature