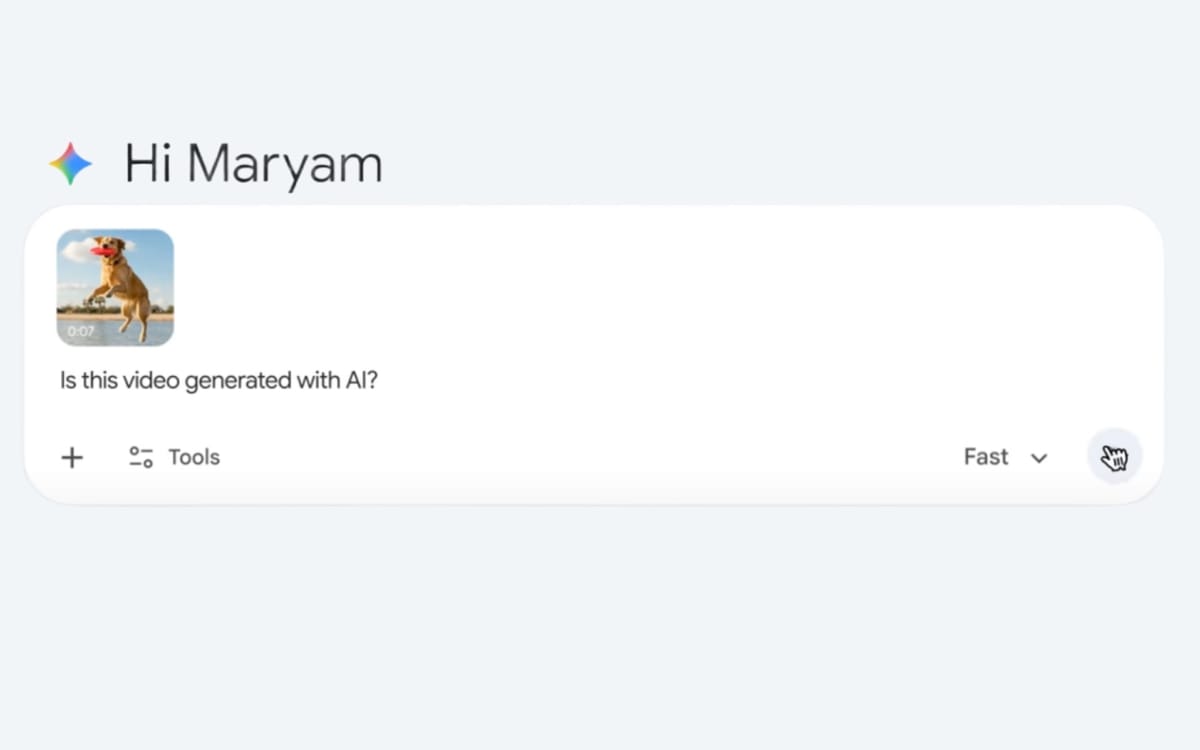

Google has unveiled a new video verification feature for its Gemini application, designed to help users authenticate whether videos were created or edited using Google AI. Announced on December 18, 2025, the feature allows users to upload videos up to 100 MB and 90 seconds in length directly to Gemini, where they can inquire if the content was generated by Google’s tools. This initiative responds to growing concerns surrounding misinformation and synthetic media as artificial intelligence technology becomes increasingly prevalent.

The verification process utilizes SynthID watermarking technology, which Google claims is “imperceptible” to human eyes but detectable by machines. This watermark is embedded across both the audio and visual components of AI-generated content during its creation. Users can ask specific questions about segments of the video, such as, “Was this generated using Google AI?” The system scans for SynthID markers and returns detailed information, indicating exactly which parts of the video contain synthetic elements. For instance, users might receive feedback like, “SynthID detected within the audio between 10-20 secs. No SynthID detected in the visuals.”

This enhancement builds on Google’s existing content transparency tools, which previously focused primarily on static images. As AI-generated media becomes more sophisticated, there has been increasing scrutiny over how platforms handle synthetic content. Digital marketing professionals have voiced concerns about the potential for misleading audiences, especially in advertising, where authenticity is crucial.

The introduction of the verification tool comes at a time when many platforms are contemplating how to establish authentication standards for synthetic media. Regulatory bodies have begun to examine disclosure requirements, with the Federal Trade Commission intensifying enforcement against deceptive practices in advertising. Google’s approach with SynthID differs significantly from metadata-based systems, which can be easily stripped or modified. The persistent nature of SynthID watermarks ensures that authenticity can be verified even when videos undergo editing or reformatting.

While the system provides a mechanism for distinguishing between fully synthetic videos and those that are partially edited, it also raises questions about its limitations. The file size and duration constraints, which restrict uploads to shorter videos, may reduce its applicability for longer commercial productions. Marketing teams often deal with extensive volumes of content, and the manual upload requirement could complicate integration into existing workflows.

Google’s announcement did not clarify whether the verification system could detect AI-generated content created using competitors’ tools, such as OpenAI‘s Sora or Meta‘s video generation technologies. This limitation may spark discussions about the need for universal verification standards that function across different platforms. The verification capability is particularly relevant for digital advertising, where transparency about synthetic media is becoming increasingly essential.

Furthermore, privacy considerations emerged as users are required to upload videos to Google’s servers for analysis. The announcement did not provide specific data retention policies or clarify if uploaded content would be utilized to train Google’s AI models. This raises concerns for marketing professionals working with proprietary or client content, who may seek assurances regarding data handling practices.

Accuracy in detecting synthetic content also remains a critical issue. The announcement did not include performance metrics or error rates associated with the verification system. Users relying on these results to make authentication decisions may find it necessary to understand the potential for false positives or negatives. Moreover, the system’s efficacy across different languages and content types, especially when considering background noise or multiple languages, has yet to be addressed.

The timing of the announcement strategically coincides with the year-end increase in advertising activity, providing marketers with a tool that may influence their content creation processes. While Google aims to democratize content verification through a consumer-facing application, the platform-specific nature of the tool is a notable constraint for users engaged with various content generation technologies.

As the digital landscape continues to evolve, Google’s verification tool highlights the pressing need for content authenticity in a world where AI-generated media is becoming the norm. The implications extend beyond consumer protection, influencing legal compliance, brand safety, and audience trust in commercial contexts. Moving forward, the industry’s response to these challenges will determine how effectively such verification tools can be integrated into broader content creation and advertising strategies.