Sahil Dua, co-leader of the team developing Google’s Gemini embedding models, recently presented a comprehensive overview of embedding models, crucial in modern search engines and machine learning applications. During his talk, he delved into the mechanics behind how systems retrieve relevant images or documents from vast online datasets, exemplified by a simple query like “show me cute dogs.”

Embedding models serve as the backbone of this functionality, generating unique digital fingerprints, or embeddings, for various inputs, whether textual or visual. Dua emphasized that embeddings for similar inputs are positioned closely in an abstract mathematical space, while those of different inputs are distanced. This fundamental principle enables sophisticated retrieval tasks across various platforms, from search engines to social media applications.

Among the various applications, Dua highlighted the role of embedding models in personalized recommendations. For instance, after purchasing an iPhone, a user might receive targeted suggestions for compatible accessories. Additionally, frameworks like Retrieval-Augmented Generation (RAG) utilize embedding models to enhance the accuracy of large language models by incorporating relevant information into the response-generation context. This innovation helps mitigate the hallucination problem often encountered in generative AI.

Dua also detailed the architecture of embedding models, which typically includes a tokenizer, embedding projection, and transformer components. The tokenizer breaks down inputs into manageable tokens, which are then transformed into embeddings using a context-aware mechanism. This process culminates in a pooled embedding that succinctly encapsulates the original input’s meaning.

Training these models effectively involves techniques such as contrastive learning, which ensures that similar inputs yield closely aligned embeddings while dissimilar inputs diverge. Dua outlined the importance of using both supervised and unsupervised learning methods to prepare training data, noting that the former might involve next-sentence prediction while the latter employs span corruption techniques to enhance model robustness.

Once trained, these models often require distillation to create smaller, production-ready variants. Dua explained three primary techniques for distillation: scoring distillation, embedding distillation, and a combined approach. The objective is to retain the performance of larger models while enabling faster, more efficient inference in real-world applications.

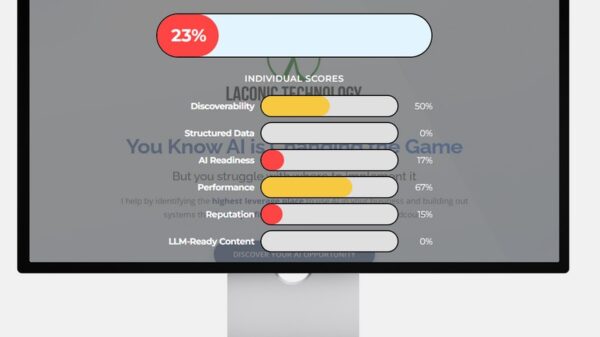

Evaluating the efficacy of embedding models, Dua stated, requires robust metrics, especially when golden labels are absent. In such cases, auto-rater models, generally based on advanced language models, can provide relevance scores for retrieved results, facilitating a more nuanced evaluation process. Metrics like recall and normalized discounted cumulative gain (NDCG) help assess the quality of retrieval outcomes.

Regarding the operational aspects of serving embedding models at scale, Dua highlighted challenges in query latency and document indexing costs. He suggested implementing server-side dynamic batching to optimize query processing times and emphasized the importance of quantization to reduce model weight without sacrificing quality. For document indexing, leveraging larger batches and maintaining a smaller embedding size can significantly enhance throughput.

As organizations increasingly adopt embedding models, Dua stressed the need for careful selection, especially for off-the-shelf models. Considerations must include the intended use case, compatibility with specific languages, and data domain relevance to ensure that the selected model meets operational needs. He also advised scrutinizing licensing agreements to avoid potential legal complications, underscoring the importance of community support and benchmarks for performance evaluation.

In conclusion, Dua’s insights offer a roadmap for leveraging embedding models in various applications, from improving search functionalities to powering personalized content delivery. As the landscape of artificial intelligence continues to evolve, the significance of embedding models in enhancing user experiences and operational efficiency will only grow.

See also Sam Altman Praises ChatGPT for Improved Em Dash Handling

Sam Altman Praises ChatGPT for Improved Em Dash Handling AI Country Song Fails to Top Billboard Chart Amid Viral Buzz

AI Country Song Fails to Top Billboard Chart Amid Viral Buzz GPT-5.1 and Claude 4.5 Sonnet Personality Showdown: A Comprehensive Test

GPT-5.1 and Claude 4.5 Sonnet Personality Showdown: A Comprehensive Test Rethink Your Presentations with OnlyOffice: A Free PowerPoint Alternative

Rethink Your Presentations with OnlyOffice: A Free PowerPoint Alternative OpenAI Enhances ChatGPT with Em-Dash Personalization Feature

OpenAI Enhances ChatGPT with Em-Dash Personalization Feature