Generative Adversarial Networks (GANs) have emerged as a significant advancement in the field of artificial intelligence, particularly in the domain of automatic image synthesis. Despite their remarkable capabilities in generating synthetic data, the challenge of objectively evaluating the quality of this generated data remains unresolved. Unlike discriminative models, which can be assessed using established metrics, generative models require evaluation criteria that effectively measure both the visual quality and diversity of the samples produced.

One of the earliest metrics introduced for this purpose is the Inception Score (IS). This metric, derived from the predictions of a pre-trained Inception network, provides a quantitative estimate of a generative model’s ability to create realistic and semantically meaningful images. An analysis of the Inception Score reveals its underlying principles, as well as the limitations that have led to the exploration of additional evaluation metrics.

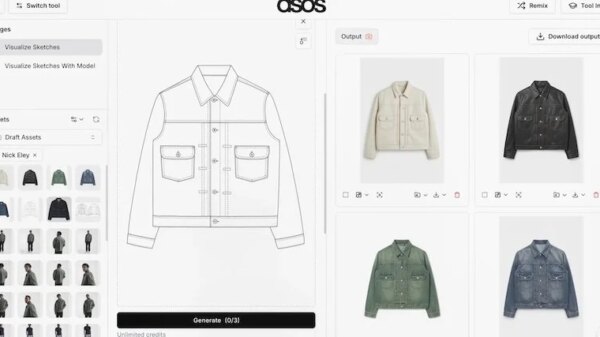

A GAN can be considered a deep learning framework that generates new data resembling an initial training set. To illustrate the concept, one can use the metaphor of a forger and an art critic. The forger (Generator) aims to create images that closely resemble authentic ones from the training set, while the critic (Discriminator) seeks to distinguish between genuine and synthetic images. In the initial phases, the forger struggles to fool the critic, but as the critic provides feedback, the forger improves, gradually mastering the art of deception.

In practical terms, a GAN consists of two primary components: the Generator (G) and the Discriminator (D). The Generator is tasked with producing synthetic data, taking a noise vector as input, which is typically drawn from a normal distribution. As this vector passes through various layers of the generator, it is up-sampled until it forms a complete image. Conversely, the Discriminator classifies images as either real or fake, performing a down-sampling process that extracts features to make its determination.

The noise vector plays a crucial role, as it allows the Generator to produce images with varying characteristics. To avoid mode collapse, where the Generator produces identical images, the input vector must have diverse values that activate the generator’s weights differently, resulting in varied outputs.

While human judgment remains one of the best ways to evaluate a GAN, the Inception Score offers a more systematic approach. The score assesses two critical parameters: image quality and diversity. Quality indicates how well an image represents its intended subject, while diversity measures the Generator’s ability to create different images. For instance, if the goal is to generate images of dogs, the resulting pictures should depict various breeds, poses, and environments.

The Inception Score is calculated using a batch of generated images and is based on the outputs of the Inception network, which has been pre-trained on the ImageNet dataset encompassing 1,000 classes. The metric incorporates two types of probability distributions: conditional probability (Pc) and marginal probability (Pm). The conditional probability assesses the Generator’s ability to create images that strongly correspond to a specific class, while the marginal probability determines if the Generator can produce images with diverse characteristics. If the images generated are identical, it may indicate mode collapse.

To derive the Inception Score, the Kullback–Leibler (KL) distance between the two probabilities is calculated and averaged over the examples used. A high score is indicative of a successful Generator, producing consistent and diverse images. However, the question arises: high compared to what?

The Inception Score calculated on real data can serve as a benchmark against which to compare the score from generated data. A generative model is deemed satisfactory when the Inception Score of synthetic data approaches that of real data, suggesting that the model accurately reproduces the label distribution and visual complexity of the original dataset.

Nevertheless, the use of the Inception Score has limitations. It is essential to interpret the value obtained within the context of the problem at hand. Given that the Inception network was trained on the ImageNet dataset, there may be instances where the class distributions learned by the Generator do not align with those within the network’s semantic space. This misalignment could yield inconsistent values, particularly in cases of mode collapse.

While the Inception Score can offer preliminary insights into the quality of generated data, it is advisable to combine it with other quantitative metrics. Such an approach would provide a more comprehensive and reliable evaluation of a generative model’s performance, addressing the need for robust assessment methods in the burgeoning field of artificial intelligence and generative models.

See also Sam Altman Praises ChatGPT for Improved Em Dash Handling

Sam Altman Praises ChatGPT for Improved Em Dash Handling AI Country Song Fails to Top Billboard Chart Amid Viral Buzz

AI Country Song Fails to Top Billboard Chart Amid Viral Buzz GPT-5.1 and Claude 4.5 Sonnet Personality Showdown: A Comprehensive Test

GPT-5.1 and Claude 4.5 Sonnet Personality Showdown: A Comprehensive Test Rethink Your Presentations with OnlyOffice: A Free PowerPoint Alternative

Rethink Your Presentations with OnlyOffice: A Free PowerPoint Alternative OpenAI Enhances ChatGPT with Em-Dash Personalization Feature

OpenAI Enhances ChatGPT with Em-Dash Personalization Feature