New York Governor Kathy Hochul signed the Synthetic Performer Disclosure Law (S.8420-A / A.8887-B) on December 11, 2025, establishing the nation’s first comprehensive framework aimed at ensuring transparency in advertising that employs synthetic human actors or AI-generated personas. The legislation comes as the advertising industry increasingly utilizes generative AI to reduce production costs, marking a significant move toward consumer awareness as it necessitates clear labeling to differentiate between human and machine-generated content.

This law is a direct response to the rapid rise of “hyper-realistic” AI avatars that have infiltrated social media and commercial advertising. By mandating a “conspicuous disclosure,” New York is setting a high standard for honesty in digital media, compelling brands to reveal when the engaging faces in their promotions are products of algorithms rather than biological heritage.

The legislation specifically identifies “synthetic performers” as digitally created entities designed to simulate real human beings without being recognizable as specific individuals. This marks a departure from previous “deepfake” regulations, which focused on the unauthorized use of actual individuals’ likenesses. Under the new law, all advertisements must include labels such as “AI-generated person” or “Includes synthetic performer,” which must be easily noticeable and understandable to consumers.

The law places the responsibility for disclosure on content creators or sponsors, making them accountable for revealing the use of platforms like Synthesia or HeyGen to create their spokespersons. However, it provides a safe harbor for media distributors, meaning that television networks and digital platforms like Meta (NASDAQ: META) and Alphabet (NASDAQ: GOOGL) will generally be exempt from liability as long as they are not the primary creators of the content.

Experts have noted that this legislation is distinct from earlier, broader AI regulations. By concentrating on “commercial purpose” and “synthetic performers,” it avoids encroaching on artistic works like films or video games. This targeted approach has garnered praise from the AI research community for balancing the need for transparency with the importance of safeguarding creative innovation.

The implications for the advertising industry are both immediate and profound. Major technology companies providing AI-driven marketing tools, such as Adobe (NASDAQ: ADBE) and Microsoft (NASDAQ: MSFT), are expected to rapidly integrate automated labeling features into their software to assist clients in complying with the new requirements. While the law validates the usefulness of these AI tools, the necessity for a discernible label could undermine the seamless, high-end appearance that many brands have sought to achieve with AI-generated content.

For global advertising firms like WPP (NYSE: WPP) and Publicis, compliance with the law will require a comprehensive revision of their creative processes. Some industry insiders worry that the “AI-generated” label might carry negative connotations, prompting brands to revert to traditional human talent, a shift that would be welcomed by labor unions. SAG-AFTRA, a key supporter of the bill, praised its signing as a significant victory, asserting that it prevents AI from surreptitiously replacing human actors without public awareness.

Startups focusing on AI-generated avatars also face new challenges. Companies that have enjoyed high valuations based on their ability to produce “indistinguishable” human-like content may need to adapt their strategies in light of the new law. A competitive edge may now favor businesses that can offer “certified authentic” human representations or develop aesthetically pleasing methods of incorporating required disclosures into their content.

The law represents a critical milestone in the broader AI landscape, aligning with global trends in “AI watermarking” and provenance standards like C2PA. It arrives at a time when public trust in digital media is dwindling, and there is a growing movement among Gen Z and Millennial consumers advocating for “AI-free” branding. By codifying transparency, New York is effectively categorizing AI-generated humans as a new type of “claim” that requires substantiation—similar to “organic” or “sugar-free” labels in the food sector.

However, the law has drawn criticism regarding potential “disclosure fatigue.” Detractors contend that as AI becomes pervasive in various production aspects—from color grading to background extras—labeling every synthetic component could lead to an overwhelming visual environment. Additionally, the law enters a complicated legal landscape, as federal authorities are also seeking to establish national AI regulations, raising the possibility of conflicts with New York’s specific requirements.

This legislation is already being viewed as the “GDPR moment” for synthetic media. Just as Europe’s data privacy framework prompted a global reevaluation of digital tracking, New York’s disclosure mandates are anticipated to emerge as the de facto national standard, as brands will likely avoid creating separate, non-labeled versions of their advertisements for other states.

Looking ahead, the “Synthetic Performer Disclosure Law” is expected to pave the way for additional regulations, particularly concerning “AI Influencers” on platforms like TikTok and Instagram, where the distinction between real individuals and synthetic avatars is often deliberately obscured. As AI actors evolve to facilitate real-time interactions, the urgency for clear disclosures will become increasingly pronounced.

Experts also foresee that enforcement will pose challenges in the decentralized realm of social media. While larger brands are likely to comply to evade penalties of $5,000 per violation, smaller creators and informal advertisers may present more significant regulatory hurdles. Furthermore, as generative AI expands into audio and real-time video communications, the definition of a “performer” will likely need to adapt. We may soon witness the emergence of “Transparency-as-a-Service” companies, offering automated verification and labeling solutions to ensure that advertisements comply with regulations across all 50 states.

The interplay between this law and New York’s recently signed RAISE Act (Responsible AI Safety and Education Act) suggests a future where AI safety and consumer transparency are intrinsically connected. The RAISE Act’s focus on “frontier” model safety protocols is expected to provide the technical foundation necessary for tracking the provenance of the avatars that the disclosure law aims to identify.

In summary, the passage of New York’s AI Avatar Disclosure Law heralds a significant turning point in the contemporary media landscape. By requiring the identification of synthetic humans, New York has firmly positioned itself in favor of consumer protection and the interests of human labor. With the law set to take effect in June 2026, the industry is poised to observe how consumers respond to the “AI-generated” labels. Will this prompt a widespread rejection of synthetic media, or will audiences become desensitized? As the landscape evolves, expect a surge of activity from ad-tech firms and legal teams striving to clarify what constitutes “conspicuous” in a world where the boundaries between the virtual and the real are increasingly blurred.

See also DuckDuckGo Launches AI Image Generator with Enhanced Privacy Features

DuckDuckGo Launches AI Image Generator with Enhanced Privacy Features Intel Launches $300 B50 GPU Offering Solid Performance for AI and 3D Tasks in 2025

Intel Launches $300 B50 GPU Offering Solid Performance for AI and 3D Tasks in 2025 AI Study Reveals Image Generation Converges to 12 Styles After 100 Cycles

AI Study Reveals Image Generation Converges to 12 Styles After 100 Cycles China Files 700 Generative AI Models as HarmonyOS Devices Exceed 1.19 Billion Units

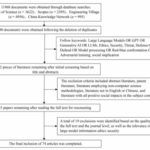

China Files 700 Generative AI Models as HarmonyOS Devices Exceed 1.19 Billion Units Study Uncovers 73 Risks of Large Language Models, Urges Urgent Ethical Governance

Study Uncovers 73 Risks of Large Language Models, Urges Urgent Ethical Governance