Researchers from Friedrich-Alexander-Universität Erlangen-Nürnberg and the University of Zurich have unveiled a groundbreaking framework for medical image segmentation, named ProGiDiff. This development addresses longstanding limitations in traditional image segmentation methods, which have relied heavily on deterministic approaches that hinder adaptability to nuanced instructions. The study, conducted by Yuan Lin, Murong Xu, and Marc Hölle, alongside Chinmay Prabhakar and their colleagues, focuses on leveraging pre-trained diffusion models, originally designed for image generation, to enhance segmentation accuracy in medical imaging.

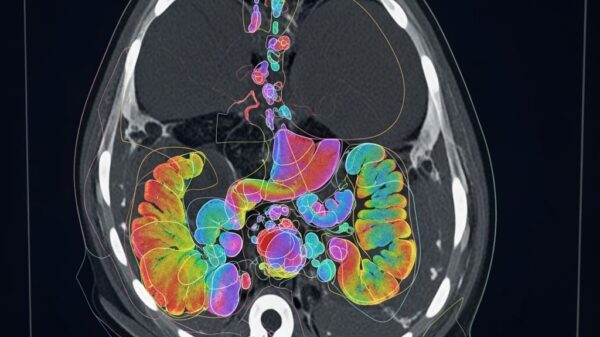

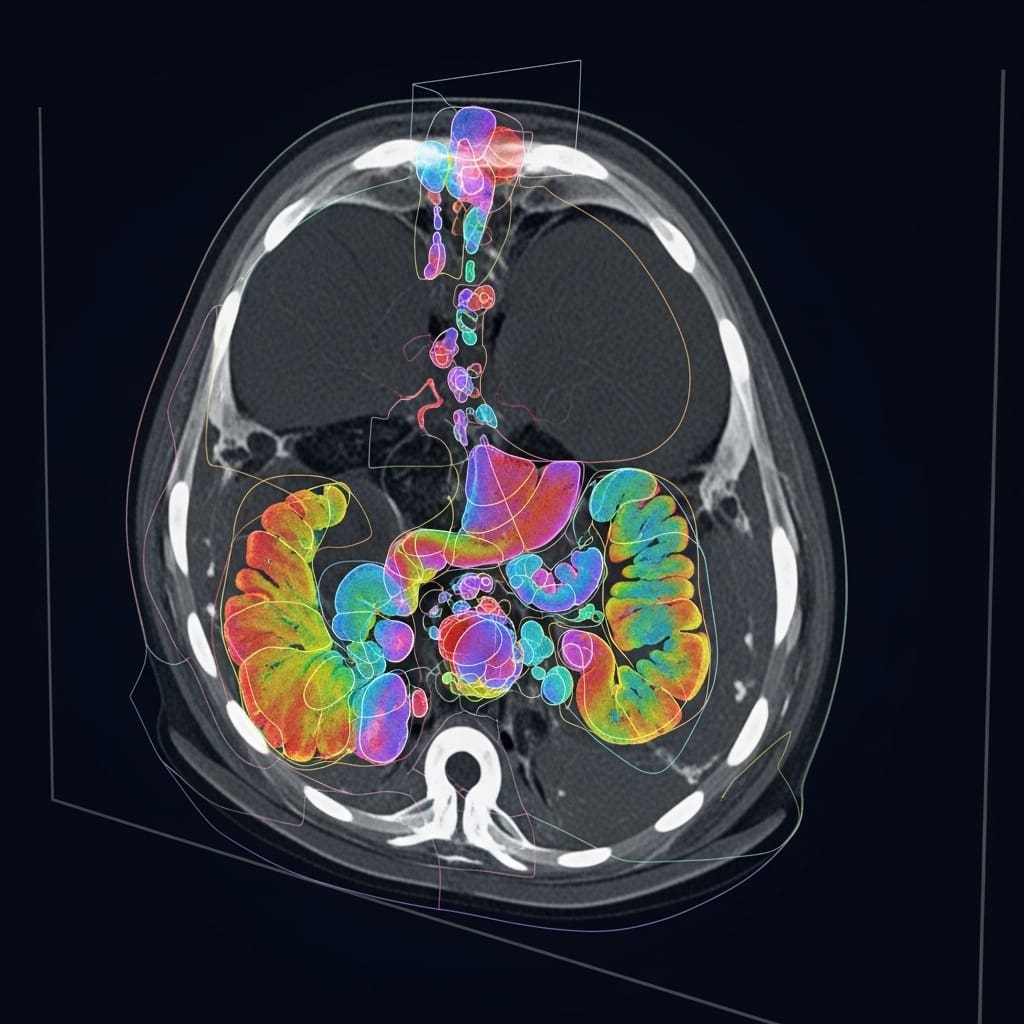

ProGiDiff’s innovative framework allows for natural language prompting, enabling precise control over segmentation outputs that extend beyond simple binary classifications. This capability is significant as it demonstrates impressive cross-modality transferability, successfully applying to both CT and MR images with minimal retraining. The researchers noted that existing methods often struggle with human interaction and fail to generate multiple interpretations, making ProGiDiff a timely advancement in the field.

The framework employs a ControlNet-style conditioning mechanism paired with a custom encoder designed for image-based conditioning. This dual approach facilitates the accurate generation of segmentation masks by guiding the pre-trained diffusion model in a targeted manner. One of the most notable aspects of ProGiDiff is its ability to handle multi-class segmentation seamlessly by allowing users to specify the target organ through prompts, thereby broadening anatomical analysis across various clinical scenarios.

Initial experiments focused on CT images, where ProGiDiff exhibited strong performance compared to existing segmentation techniques. This finding underscores its potential for expert-in-the-loop workflows, where clinicians can iteratively refine segmentation proposals. The framework not only improves segmentation accuracy but also enhances the efficiency of clinical decision-making processes.

A key innovation in ProGiDiff lies in its conditioning mechanism, which supports efficient transfer learning. By utilizing low-rank, few-shot adaptation techniques, the model demonstrates robust generalization across different imaging modalities, such as MRI. This approach emphasizes embedding semantic prior information into the diffusion process, which enhances language-driven interpretability and aligns textual prompts with visual medical representations. The custom encoder effectively translates medical image features into representations compatible with the pre-trained diffusion backbone, streamlining the segmentation process while minimizing retraining requirements.

The research also introduces a low-rank adaptation strategy, allowing for rapid adjustments to new imaging modalities without the need for large, annotated datasets. Validation experiments on abdominal CT organ segmentation confirmed ProGiDiff’s competitive performance, while few-shot domain adaptation experiments on MR images further illustrated its broad applicability in clinical settings. This adaptability is particularly critical in medical environments where labeled data is often scarce.

Despite initial lower performance on smaller structures, such as the pancreas, the integration of the custom image encoder improved the average Dice score by 6.09%, increasing from 70.72% to 75.03%. This enhanced discriminability in ambiguous regions highlights ProGiDiff’s effectiveness in challenging segmentation tasks. The framework’s performance also surpasses that of the Diff-UNet model, which achieved a Dice score of 81.09%. Moreover, in a simulated expert-in-the-loop setting, the study found that generating multiple segmentation proposals consistently improved the average Dice score, reaching approximately 86% with 50 samples.

Looking ahead, the ProGiDiff framework represents a significant evolution in medical image segmentation by transitioning from deterministic pipelines to a more dynamic, diffusion-based generative model. This generative and language-driven approach aligns well with clinical workflows, allowing for the iterative refinement of segmentation proposals. However, the authors caution against ensembling too many generated proposals, as this can lead to diminishing returns, emphasizing the need to find an optimal balance between computational cost and segmentation quality.

Ultimately, ProGiDiff’s ability to condition segmentation outputs through natural language prompts opens new avenues for enhancing control and interpretability in medical imaging. As the framework continues to demonstrate its transferability to various imaging modalities, it holds promise for broader applications across medical domains seeking to streamline workflows and improve diagnostic accuracy.

See also Sam Altman Praises ChatGPT for Improved Em Dash Handling

Sam Altman Praises ChatGPT for Improved Em Dash Handling AI Country Song Fails to Top Billboard Chart Amid Viral Buzz

AI Country Song Fails to Top Billboard Chart Amid Viral Buzz GPT-5.1 and Claude 4.5 Sonnet Personality Showdown: A Comprehensive Test

GPT-5.1 and Claude 4.5 Sonnet Personality Showdown: A Comprehensive Test Rethink Your Presentations with OnlyOffice: A Free PowerPoint Alternative

Rethink Your Presentations with OnlyOffice: A Free PowerPoint Alternative OpenAI Enhances ChatGPT with Em-Dash Personalization Feature

OpenAI Enhances ChatGPT with Em-Dash Personalization Feature