Tencent has made significant advancements in open-source image generation with the launch of HunyuanImage 3.0-Instruct, a native multimodal model designed for accurate, instruction-driven image editing and generation. Released in late 2025, the model is fully open-sourced on platforms such as Hugging Face and GitHub, building on Tencent’s Hunyuan-A13B foundation. It is notable for being the largest open-source image generation Mixture-of-Experts (MoE) model to date, marking a major milestone in the field.

At the heart of HunyuanImage 3.0-Instruct is a decoder-only Mixture-of-Experts (MoE) architecture featuring over 80 billion parameters. However, only approximately 13 billion parameters are active per token during inference, as it activates eight out of 64 experts. This design allows for high capacity while maintaining efficient computational requirements, making it one of the most powerful open models for inference currently available. The model operates within a unified autoregressive framework that simultaneously handles multimodal understanding and generation, integrating text and image modalities to enable seamless reasoning over combined inputs.

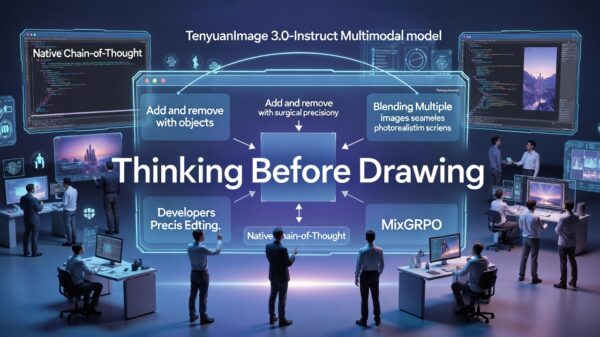

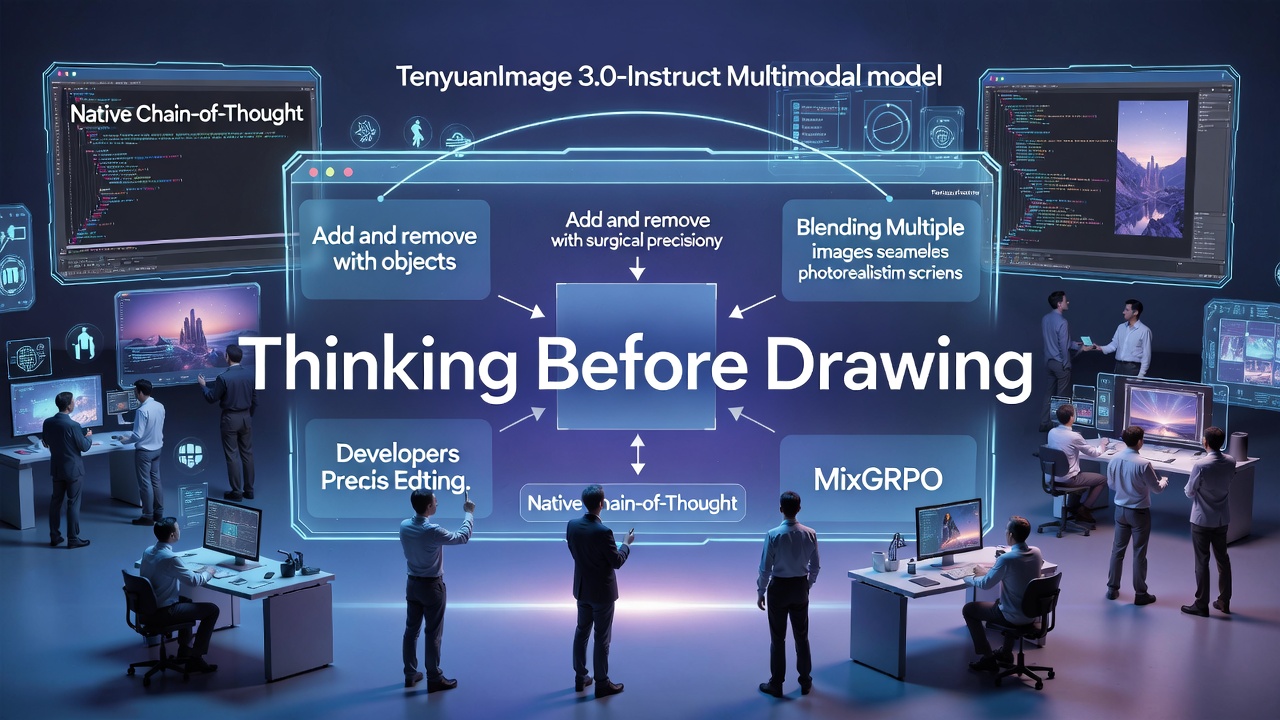

What distinguishes HunyuanImage 3.0-Instruct is its inherent reasoning process. The model employs a native Chain-of-Thought (CoT) schema during inference, allowing it to consider the user’s intent step-by-step before generating images. This is complemented by MixGRPO, a custom online reinforcement learning algorithm that optimizes for aesthetics, realism, alignment, and minimizes artifacts. During its training, the model learns to convert abstract instructions into detailed visual outputs through explicit reasoning, resulting in stronger adherence to user intent, better preservation of unchanged regions, and fewer logical inconsistencies in generated images.

HunyuanImage 3.0-Instruct excels particularly in precision editing. Users can add, remove, or replace objects while ensuring the rest of the scene remains intact. The model can modify intricate details such as clothing, lighting, and expressions with minimal leakage and can handle complex directives, such as restoring an old photograph while altering the subject’s age and attire. An impressive feature is its ability to perform advanced multi-image fusion, which allows the model to extract and blend elements from various reference images into a cohesive and photorealistic composition. This capability enhances creative workflows, enabling tasks like portrait collages and style transfers.

According to Tencent’s technical report and community evaluations, HunyuanImage 3.0 has demonstrated text-image alignment and visual quality that either matches or surpasses leading closed-source models in human blind tests. It has earned a prominent position on leaderboards like LMArena for text-to-image generation, often ranking among the top open-source entries. In structured editing benchmarks, the model shows notable semantic consistency and realism, frequently competing with proprietary systems such as Flux and Midjourney in controlled modification tasks. While it does not always claim absolute superiority, HunyuanImage 3.0 consistently ranks among the highest in open-source image generation, particularly excelling in instruction-following and photorealism.

Tencent’s strategy appears to be geared toward creating a comprehensive multimodal ecosystem. By open-sourcing the model’s weights, inference code, and detailed technical documentation under the Hunyuan Community License, the company is encouraging developers to create applications, refine variants, and integrate the model into various creative workflows. Interested users can explore HunyuanImage 3.0-Instruct directly through the official demo or utilize it via Hugging Face and GitHub repositories, though running the full model locally demands significant computational resources.

The emergence of HunyuanImage 3.0-Instruct reflects a shift toward more intelligent and reasoning-driven visual creation tools. By enabling the model to engage in thoughtful analysis of edits and compositions, Tencent is advancing the capabilities of controllable, high-fidelity image manipulation in the realm of open-source AI. This development is poised to benefit a wide range of users, from designers seeking precise edits to researchers studying multimodal reasoning, as the industry moves toward more sophisticated avenues in image generation.

See also Sam Altman Praises ChatGPT for Improved Em Dash Handling

Sam Altman Praises ChatGPT for Improved Em Dash Handling AI Country Song Fails to Top Billboard Chart Amid Viral Buzz

AI Country Song Fails to Top Billboard Chart Amid Viral Buzz GPT-5.1 and Claude 4.5 Sonnet Personality Showdown: A Comprehensive Test

GPT-5.1 and Claude 4.5 Sonnet Personality Showdown: A Comprehensive Test Rethink Your Presentations with OnlyOffice: A Free PowerPoint Alternative

Rethink Your Presentations with OnlyOffice: A Free PowerPoint Alternative OpenAI Enhances ChatGPT with Em-Dash Personalization Feature

OpenAI Enhances ChatGPT with Em-Dash Personalization Feature