WASHINGTON, Feb. 10, 2026 /PRNewswire/ — The Association for Diagnostics & Laboratory Medicine (ADLM) has issued a position statement emphasizing the potential risks associated with the incorporation of artificial intelligence (AI) in laboratory medicine. Released today, the statement focuses particularly on the implications for historically marginalized patient demographics. To address these risks and harness the benefits of AI in healthcare, ADLM calls for Congress and federal agencies to modernize existing laboratory regulations and implement policies ensuring that AI clinical systems are both safe and effective.

As laboratory medicine is crucial for accurate diagnoses and patient care, AI’s transformative potential could enhance diagnostic precision, streamline laboratory workflows, and facilitate data-driven clinical decision-making. However, the accuracy of AI models is contingent on the quality and diversity of the data upon which they are trained. There are significant concerns that AI models may replicate societal biases, leading to underestimations of risk or misdiagnoses in marginalized populations. This challenge arises from the reliance on historical datasets that often lack representation of various racial, ethnic, and socioeconomic groups.

To mitigate bias within laboratory AI and ensure effective monitoring of technology that influences test interpretations and treatment decisions, ADLM makes several recommendations to the federal government. The association urges Congress to collaborate with federal agencies to update existing laboratory laws, such as the Clinical Laboratory Improvement Amendments (CLIA), to specifically include AI systems. Furthermore, it recommends that federal health agencies work alongside professional societies to develop consensus guidelines for the validation and verification of AI tools in laboratory settings. Additionally, there is a call for federal agencies to bolster initiatives that harmonize laboratory test results and standardize data reporting.

ADLM also encourages AI developers to work in tandem with regulators and healthcare organizations to foster data diversity and reduce bias within laboratory AI applications. Ensuring that clinical laboratories have access to necessary data and technical resources is vital for independent verification and validation of algorithm performance.

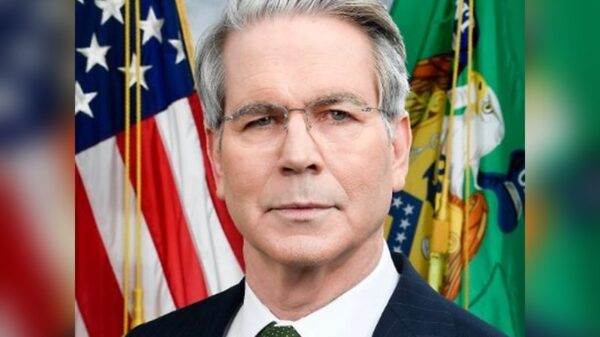

“Clinical laboratories are uniquely positioned to help develop and assess the integration of AI health tools into testing workflows and, most importantly, how they influence patient test results and health outcomes,” stated ADLM President Dr. Paul J. Jannetto. He emphasized the necessity for federal government to leverage laboratory medicine professionals’ expertise to create regulations that promote innovation while ensuring transparent and consistent performance monitoring for this groundbreaking technology.

The ADLM, dedicated to advancing health through laboratory medicine, comprises over 70,000 clinical laboratory professionals, physicians, research scientists, and industry leaders across 110 countries. This diverse community spans various subdisciplines, including clinical chemistry, molecular diagnostics, and data science. Since its establishment in 1948, ADLM has been at the forefront of laboratory medicine, advocating for scientific collaboration, knowledge sharing, and the creation of innovative solutions that enhance health outcomes. For more information about ADLM, visit www.myadlm.org.

See also AI Technology Enhances Road Safety in U.S. Cities

AI Technology Enhances Road Safety in U.S. Cities China Enforces New Rules Mandating Labeling of AI-Generated Content Starting Next Year

China Enforces New Rules Mandating Labeling of AI-Generated Content Starting Next Year AI-Generated Video of Indian Army Official Criticizing Modi’s Policies Debunked as Fake

AI-Generated Video of Indian Army Official Criticizing Modi’s Policies Debunked as Fake JobSphere Launches AI Career Assistant, Reducing Costs by 89% with Multilingual Support

JobSphere Launches AI Career Assistant, Reducing Costs by 89% with Multilingual Support Australia Mandates AI Training for 185,000 Public Servants to Enhance Service Delivery

Australia Mandates AI Training for 185,000 Public Servants to Enhance Service Delivery