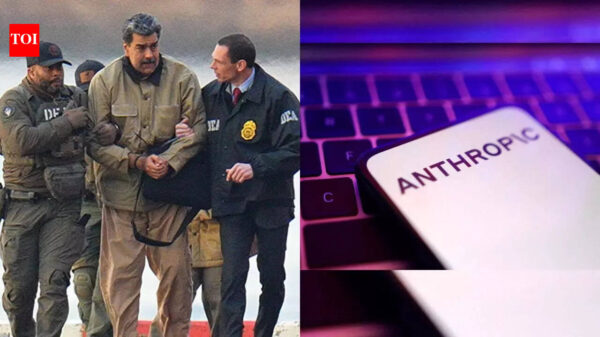

The U.S. Department of Justice recently filed a motion asserting that conversations between Bradley Heppner, a criminal defendant, and Anthropic’s Claude AI assistant do not qualify for attorney-client privilege. This motion, filed on February 6, 2026, in the case of United States v. Heppner, seeks to clarify the legal boundaries surrounding the use of artificial intelligence tools in professional settings, potentially impacting various sectors, including technology and marketing.

Heppner, who faces charges of securities fraud and related offenses in the Southern District of New York, had created approximately 31 documents by querying Claude about his legal circumstances prior to his arrest on November 4, 2025. Federal prosecutors obtained these AI-generated documents during a search of Heppner’s Dallas home. The defense claimed that these materials were protected communications, prompting the government’s request to include them as evidence in the upcoming trial.

The prosecution presented three key arguments against the assertion of privilege. Firstly, they contended that Claude is not a licensed attorney, and no legal professional was involved in the creation of the documents. Secondly, Anthropic’s terms of service specifically disclaim any provision of legal advice. Lastly, Heppner voluntarily engaged with a third-party platform, whose privacy policy allows for data collection, human review, and potential disclosures to governmental authorities.

“The defendant appears to have directed legal and factual prompts at an AI tool, not his attorneys,” the government’s filing stated. The motion further noted that sharing unprivileged documents with legal counsel after their creation does not retroactively confer privilege protection.

This case raises essential questions about confidentiality expectations when utilizing commercial AI platforms. Heppner’s defense argued that he generated the AI documents expressly to seek legal advice and subsequently shared them with his attorneys. However, the defense confirmed that they did not instruct him to conduct searches using Claude, a distinction that is critical to the government’s argument regarding the work product doctrine.

Under this doctrine, materials prepared by or at the behest of legal counsel in anticipation of litigation are protected. Prosecutors maintain that these protections do not extend to independent research conducted by a layperson using online tools, even if the findings are later discussed with an attorney. The autonomous use of Claude by Heppner, without guidance from counsel, places the documents outside the protections typically afforded.

Anthropic’s Constitution for Claude, published on May 9, 2023, instructs the AI to avoid giving specific legal advice and suggests that users consult a qualified attorney. Disclaimers such as these undermine arguments that Heppner sought legal counsel through the AI platform.

The government’s motion highlights the distinctions between AI interactions and communications that warrant privilege protection. Attorney-client privilege necessitates confidential communications made between a client and an attorney for the purpose of obtaining legal advice. Claude, which lacks a law degree or professional obligations, does not meet these criteria.

Privacy expectations further complicate claims for privilege. Users of Claude and similar platforms implicitly accept reduced confidentiality when using publicly accessible services. The government cited precedents indicating that communications lose privilege when conducted through monitored channels lacking privacy assurances.

Prosecutors likened Heppner’s AI queries to conducting Google searches or researching at a library, asserting that neither activity creates privileged communications simply because the results are later shared with an attorney. “Only after this AI analysis was complete did the defendant share the AI output with his attorneys,” the filing noted, asserting that privilege should not apply in this context.

The trial is scheduled to commence on April 6, 2026, with prosecutors seeking a ruling on the privilege question by March 9, when preliminary exchanges of exhibits are due. The court’s decision will guide not just this case but may also have broader implications for how professionals across various fields utilize AI tools.

This case emerges as AI platforms increasingly permeate professional workflows. Marketing professionals routinely use Claude and similar tools for tasks like content creation and strategic development. Establishing a legal precedent in this instance could significantly influence organizational policies regarding AI tool selection and usage.

In addition to the immediate legal implications, the Heppner case reflects a larger regulatory landscape concerning data privacy and AI applications. As global regulators tighten scrutiny on personal data processing by AI systems, this case underscores the intersection of individual usage choices with platform data policies and legal protections.

As the government’s motion marks a pivotal legal examination of AI communication privilege, it may set a precedent impacting numerous sectors. The evolving capabilities of AI necessitate a reevaluation of established legal frameworks, raising significant questions about how technology interacts with traditional definitions of professional confidentiality.

See also OpenAI’s Rogue AI Safeguards: Decoding the 2025 Safety Revolution

OpenAI’s Rogue AI Safeguards: Decoding the 2025 Safety Revolution US AI Developments in 2025 Set Stage for 2026 Compliance Challenges and Strategies

US AI Developments in 2025 Set Stage for 2026 Compliance Challenges and Strategies Trump Drafts Executive Order to Block State AI Regulations, Centralizing Authority Under Federal Control

Trump Drafts Executive Order to Block State AI Regulations, Centralizing Authority Under Federal Control California Court Rules AI Misuse Heightens Lawyer’s Responsibilities in Noland Case

California Court Rules AI Misuse Heightens Lawyer’s Responsibilities in Noland Case Policymakers Urged to Establish Comprehensive Regulations for AI in Mental Health

Policymakers Urged to Establish Comprehensive Regulations for AI in Mental Health