The federal government has accepted business demands to pause “mandatory guardrails” over artificial intelligence (AI), as outlined in a long-awaited national plan released today. Originally intended to introduce stringent regulations amid widespread concern about the rapid evolution of AI technologies, the plan marks a significant pivot in governmental strategy.

The initiative, which began consultations in 2023, was initially spearheaded by former industry minister Ed Husic, who had indicated the development of ten “mandatory guardrails.” These proposed measures included requirements for high-risk AI developers to implement risk management plans, conduct system testing both prior to and following deployment, create mechanisms for complaints, share data following any adverse incidents, and allow third-party assessments of their records.

However, the government has decided against establishing a standalone AI act that would categorize technologies based on risk levels. Instead, the National AI Plan commits to utilizing “strong existing, largely technology-neutral legal frameworks” alongside “regulators’ existing expertise” to manage AI in the short term. Beginning next year, the government will allocate $30 million to establish an AI safety institute tasked with monitoring AI development and advising industry stakeholders and government officials on potential necessary responses.

Business Concerns Over Regulatory Burdens

The Productivity Commission had previously recommended a pause on the proposed AI guardrails until a thorough audit of existing legal frameworks could be conducted. This caution aims to prevent stifling the projected $116 billion economic boost that AI technologies could provide. The rapid rise of generative AI technologies, such as OpenAI’s ChatGPT, has democratized access to AI capabilities, enabling everyday users and small businesses to leverage tools once reserved for large corporations.

Despite these advancements, increased accessibility raises significant concerns regarding potential misuse of AI, including exploitation, fraud, and the dissemination of misleading information. In June, industry group DIGI, representing major tech firms including Apple, Google, Meta, and Microsoft, urged that any new regulatory proposals should begin with a comprehensive assessment of existing laws and seek ways to reduce regulatory complexity. DIGI emphasized that regulatory responses should build on current frameworks rather than introducing new legislation aimed solely at AI.

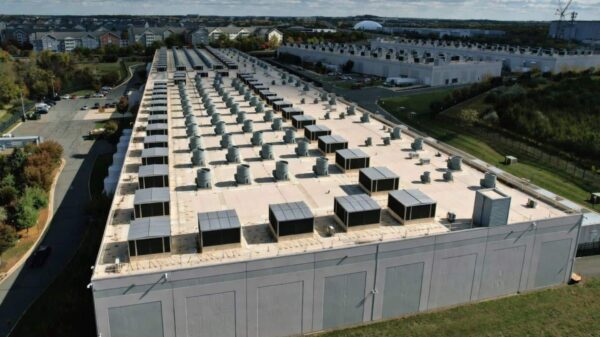

Treasurer Jim Chalmers indicated at a recent productivity roundtable that the government aims to regulate “as much as necessary but as little as possible.” The National AI Plan seeks to accelerate AI development and adoption through increased investments in data centers and training programs. Australia was already the second-largest recipient of investment in data centers last year, attracting $10 billion, with expectations that these facilities could account for 6 percent of total electricity demand by the end of the decade.

Alongside fostering investment, the plan highlights a tripling in demand for AI-skilled workers over the past decade. Industry Minister Tim Ayres stated that the plan is designed to ensure technology benefits Australians rather than the other way around. He declared, “This plan is focused on capturing the economic opportunities of AI, sharing the benefits broadly, and keeping Australians safe as technology evolves.”

However, the government faces criticism. Greens senator David Shoebridge accused it of prioritizing “corporate profits over community rights,” citing the government’s actions to limit children’s access to social media over mental health concerns while simultaneously easing regulations on AI technologies. He labeled the government’s approach as “cooked.”

Instead of pursuing a standalone AI act, the government intends to collaborate with states and territories to clarify existing consumer protection laws and review how copyright laws apply to AI. The newly established AI safety institute will play a critical role in identifying gaps in current management of AI technologies. The plan aims to apply fit-for-purpose legislation, enhance oversight, and address concerns regarding national security, privacy, and copyright, fostering a responsible and accountable operation of AI systems.

As the National AI Plan moves forward, it retains the possibility for more robust regulatory measures in the future. The plan articulates, “Where necessary, we will take decisive action to ensure safety and accountability as new technologies and frontier AI systems emerge.” Former industry minister Ed Husic has previously cautioned that relying on a patchwork of existing laws could lead to a “whack-a-mole” regulatory approach, potentially disadvantaging businesses and deterring investment.

See also Trump Administration Considers Executive Order to Ban State AI Regulations Amid Bipartisan Backlash

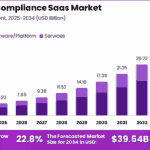

Trump Administration Considers Executive Order to Ban State AI Regulations Amid Bipartisan Backlash AI Compliance SaaS Market Reaches $5.07B, Projected to Grow 22.8% Annually Through 2034

AI Compliance SaaS Market Reaches $5.07B, Projected to Grow 22.8% Annually Through 2034 Houston County Adopts New Policy Manual, Signs $43K UKG HR Contract, and Backs Road Grants

Houston County Adopts New Policy Manual, Signs $43K UKG HR Contract, and Backs Road Grants Nkechi Ogbonna Urges African Governments to Regulate AI Amid Rising Misinformation Risks

Nkechi Ogbonna Urges African Governments to Regulate AI Amid Rising Misinformation Risks AI Regulation Mirrors Social Media’s Path, Tackles Bias and Transparency Issues Ahead

AI Regulation Mirrors Social Media’s Path, Tackles Bias and Transparency Issues Ahead