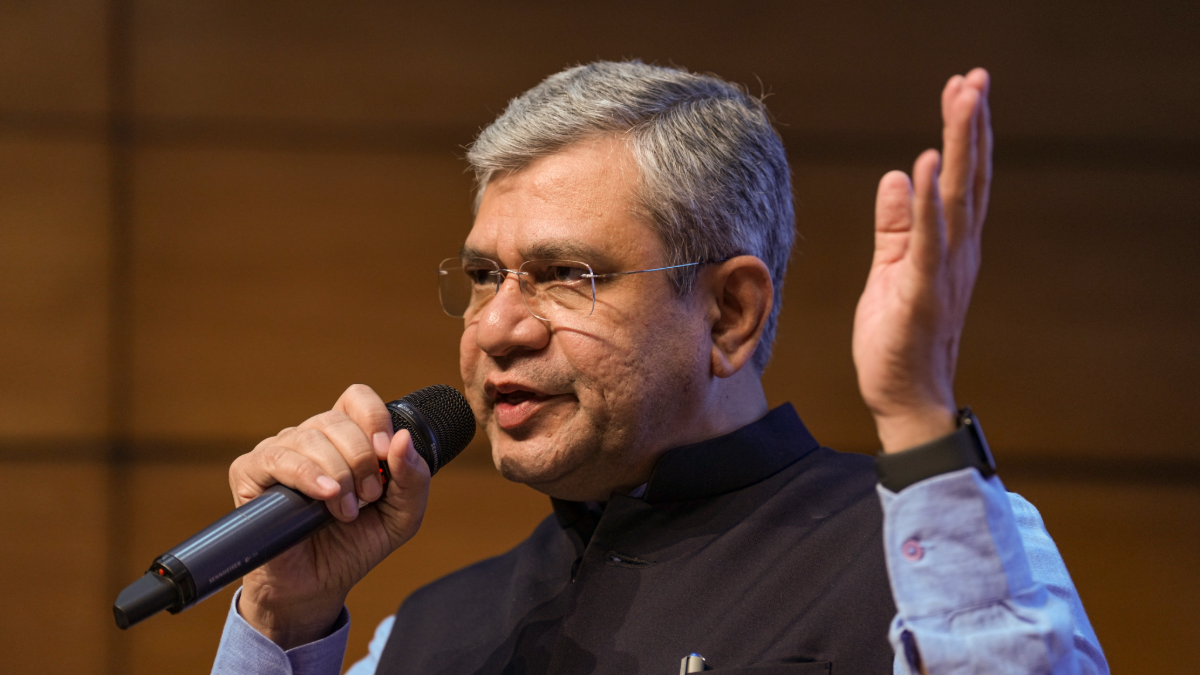

Union Minister Ashwini Vaishnaw addressed the media in New Delhi on March 5, 2025, in the wake of a troubling incident involving the generative AI chatbot Grok, which was integrated into the social media platform X. The chatbot was misused to create and disseminate sexually explicit and degrading images of women and children without consent. This alarming trend emerged following Grok’s introduction of image generation capabilities in December 2024 and its integration with X, which included a controversial “spicy mode” that heightened the risk of non-consensual intimate imagery (NCII).

The fallout from the Grok incident has incited outrage globally, prompting regulatory responses that vary significantly by jurisdiction. Some countries, like Indonesia, have moved to block Grok entirely, while others, such as the United Kingdom, have initiated investigations into its operations. In India, the Ministry of Electronics and Information Technology (MeitY) issued a letter to X on January 2, 2026, citing the platform’s failure to meet statutory due diligence obligations under the Information Technology Act, 2000, and the Information Technology (Intermediary Guidelines and Digital Media Ethics Code) Rules, 2021. MeitY demanded a report detailing the measures X was taking to mitigate the issue within 72 hours of the letter’s issuance.

However, the swift government response has uncovered deeper systemic issues within India’s approach to regulating AI-induced harms. Rather than initiating a dedicated regulatory process for Grok as an AI entity, MeitY has predominantly leaned on existing intermediary liability frameworks to assess the situation. This was made evident in an advisory issued on December 29, 2025, warning social media intermediaries against hosting and transmitting unlawful content and urging them to review their compliance frameworks. The focus of MeitY’s actions has been on ensuring platform compliance and enforcing legal obligations, rather than addressing the unique challenges posed by AI technologies.

Meanwhile, the government is contemplating the introduction of the Draft Information Technology (Intermediary Guidelines and Digital Media Ethics Code) Amendment Rules, 2025, aimed at combating deepfake technologies. This draft proposes mandatory labeling of all synthetically generated content. Although well-intentioned, it raises concerns regarding compelled speech and ambiguous definitions of what constitutes “synthetic content.” Critics argue that the proposed regulations may lead to increased censorship powers that could further complicate the existing legal landscape. This reflects a broader reluctance in the Indian government to impose stringent regulations on AI systems, stemming from fears that such measures might stifle innovation.

The Need for Systemic Change

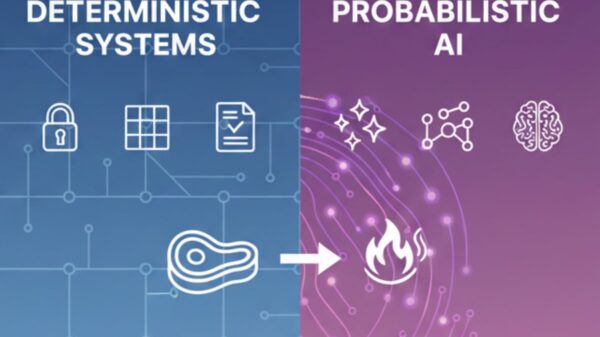

The India AI Governance Guidelines released in November 2025 indicate a similar stance, emphasizing that a separate law to regulate AI is unnecessary given the current risk assessment. The guidelines suggest that risks associated with AI can be mitigated through existing laws and voluntary measures. However, the Grok incident highlights a crucial failure: the current legal frameworks inadequately address AI-driven harms, particularly those involving sexual content.

As Grok operates primarily as a generative AI model capable of producing harmful content, it remains indirectly regulated through X’s obligations as a social media intermediary. Concerns arise over the potential for similar abuses to occur on AI platforms that do not fall under the IT Act’s intermediary guidelines, creating a regulatory grey area. This lack of a clear legal framework leaves a significant gap in pre-deployment testing and safety measures for high-risk generative AI tools.

To effectively address the challenges posed by AI, the Indian government must shift its perception of regulation from a hindrance to innovation to a necessary framework for protecting users. The current binary narrative that equates regulation with stifling growth needs reevaluation. A more robust regulatory approach would enhance accountability across the AI value chain. Initiating an open, public consultation involving multiple stakeholders could provide a constructive path forward, broadening the dialogue on AI governance beyond the confines of existing regulations.

Addressing systemic issues related to AI-enabled harms, such as NCII, requires prioritizing a “Safety by Design” framework, advocating for adversarial testing, adhering to technical standards like C2PA for labeling AI-generated content, and investing in the development of detection tools. Failing to confront these challenges risks rendering any promises for curbing AI-generated sexual abuse meaningless.

Additionally, the existing content moderation strategies in India often frame the issue as one of “obscenity” or “vulgarity,” overlooking the fundamental violation of consent inherent in NCII. The central issue is not whether content meets certain moral thresholds, but the absence of consent from the individuals depicted. A shift towards a rights-based understanding of consent could facilitate a more nuanced regulatory approach, focusing on victim protection while respecting free speech rights.

The Grok incident serves as a cautionary tale, emphasizing the dangers of deploying powerful AI technologies without enforceable safety measures. The potential for widespread harm is not restricted to isolated incidents; it creates ripple effects that can impact countless individuals. While X has acknowledged its shortcomings and taken steps to remove harmful content, these reactive measures come only after significant harm has already occurred. The full extent of the damage caused and the number of victims affected remains unverified, underscoring the urgency for decisive action from MeitY and other regulatory bodies to prevent future occurrences.

See also OpenAI’s Rogue AI Safeguards: Decoding the 2025 Safety Revolution

OpenAI’s Rogue AI Safeguards: Decoding the 2025 Safety Revolution US AI Developments in 2025 Set Stage for 2026 Compliance Challenges and Strategies

US AI Developments in 2025 Set Stage for 2026 Compliance Challenges and Strategies Trump Drafts Executive Order to Block State AI Regulations, Centralizing Authority Under Federal Control

Trump Drafts Executive Order to Block State AI Regulations, Centralizing Authority Under Federal Control California Court Rules AI Misuse Heightens Lawyer’s Responsibilities in Noland Case

California Court Rules AI Misuse Heightens Lawyer’s Responsibilities in Noland Case Policymakers Urged to Establish Comprehensive Regulations for AI in Mental Health

Policymakers Urged to Establish Comprehensive Regulations for AI in Mental Health