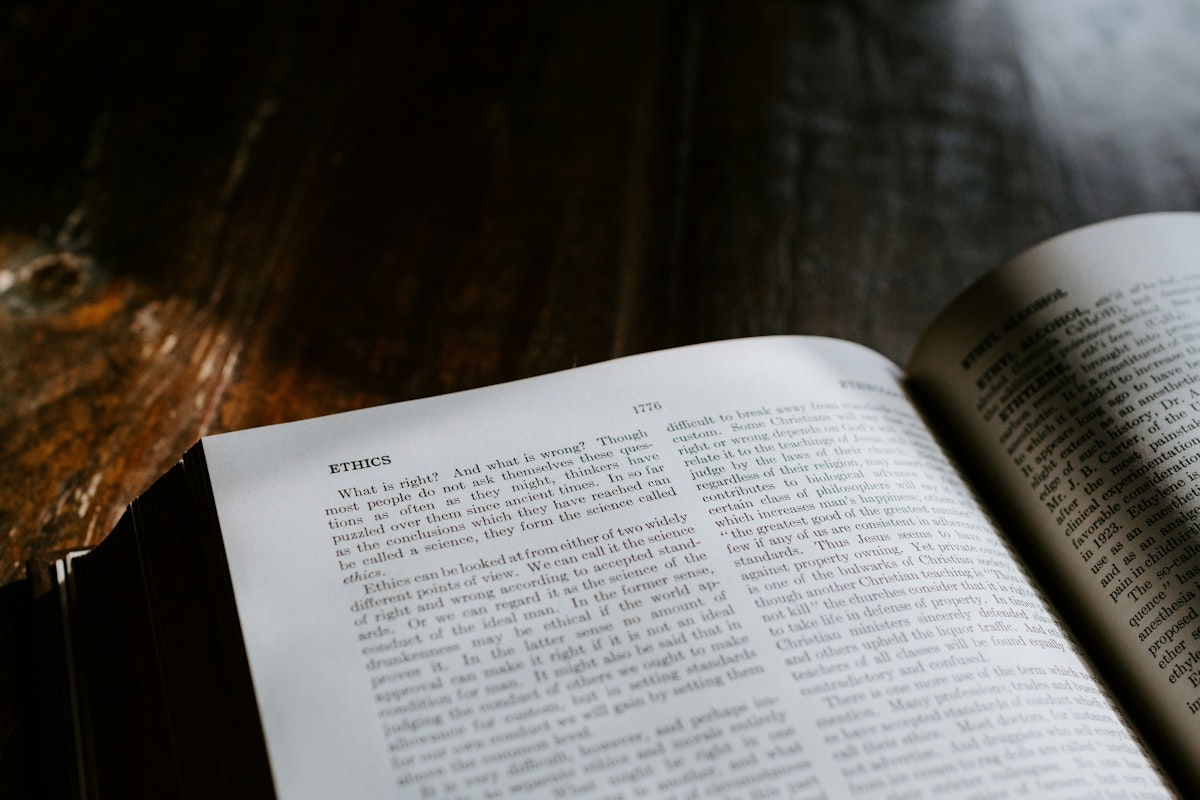

As global frameworks for artificial intelligence (AI) governance evolve, a common thread emerges: the imperative to protect individuals from harm while ensuring trust in communication. Governments, standards bodies, and international institutions are coalescing around principles that prioritize human dignity, autonomy, and the ethical deployment of technology. This shift from theoretical ideals to actionable guidelines becomes increasingly pressing in a landscape where synthetic and manipulated media challenge public perception of authenticity and intent.

Foremost among these frameworks is the UNESCO Recommendation on the Ethics of Artificial Intelligence, adopted by all UNESCO Member States in 2021. This framework emphasizes that AI systems should enhance, rather than undermine, individual agency, pointing out the risks of deception, coercion, and misuse of power. The OECD AI Principles reinforce this approach, defining trustworthy AI as accountable, fair, robust, and aligned with human rights. Significantly, they expand the definition of harm to include not just technical failures but also psychological stress and a loss of trust that can arise from AI-enabled manipulation.

Critical to these discussions is the IEEE Global Initiative on Ethics of Autonomous and Intelligent Systems, which underscores the importance of human agency and responsibility as fundamental design requirements. It warns against creating systems that deceive users or obscure accountability, an issue that is particularly relevant in the context of deepfakes. These synthetic media forms not only present new avenues for malevolent action but also manipulate trust and authority, often leading individuals to act on false premises.

The convergence of these frameworks has resulted in a clear consensus: AI systems must not enable deception that undermines human agency. Ethical guidelines increasingly categorize such deception as an unacceptable harm rather than an inevitable consequence of technological advancement. In this regard, AI ethics is framed not merely as a matter of policy but as a fundamental aspect of risk management.

The NIST AI Risk Management Framework exemplifies this trend, identifying misuse, deception, and unintended behaviors as foreseeable risks that must be managed throughout the AI lifecycle. It notably rejects the notion that responsibility for detecting AI-generated deception should fall solely on users, emphasizing the necessity of human-centered design.

International standards bodies like ISO and IEC extend this logic, focusing on robustness, reliability, and governance controls that organizations can audit and enforce. While these standards do not prescribe specific technologies, they make it clear that organizations are expected to design systems that preemptively mitigate harm. This shift means that ethical behavior must be demonstrated through concrete operational mechanisms rather than mere statements of intention.

Trust is a cornerstone across these AI ethics frameworks, especially where communication plays a critical role in decision-making. The Council of Europe’s Framework Convention on Artificial Intelligence links AI governance to the preservation of democracy and the rule of law, recognizing that AI can erode public trust when it facilitates impersonations of trusted individuals. The implications of deepfakes extend beyond the mere spread of misinformation; they can undermine the authenticity and authority necessary for reliable communication.

The World Economic Forum advocates for the protection of authenticity and authority, stressing that systems must ensure that users can accurately assess legitimacy in a digital landscape fraught with deception. The OECD further highlights the need for AI systems to be robust and secure, as these characteristics significantly affect public trust.

As these ethics frameworks evolve, they impose increasingly enforceable obligations on organizations. For instance, the Council of Europe AI Convention establishes binding requirements for states to prevent AI use that jeopardizes human rights or democracy. Similarly, the EU Artificial Intelligence Act lays out disclosure requirements for deepfake content, signaling a regulatory shift away from tolerating unmanaged deception.

Even non-binding frameworks exert significant influence, guiding government procurement and regulatory practices while prompting enterprises to adopt them as benchmarks for due diligence. This confluence of frameworks creates a shared expectation: organizations must develop AI systems that foster public trust.

In today’s environment, AI ethics are no longer a passive inquiry into organizational values; they demand rigorous accountability for the systems deployed. For organizations involved in enterprise, public-sector, or mission-critical communications, tools that actively guard against deception are essential. Implementing solutions like deepfake detection not only enhances oversight but also mitigates foreseeable risks and preserves trust in the channels upon which these organizations rely.

See also OpenAI’s Rogue AI Safeguards: Decoding the 2025 Safety Revolution

OpenAI’s Rogue AI Safeguards: Decoding the 2025 Safety Revolution US AI Developments in 2025 Set Stage for 2026 Compliance Challenges and Strategies

US AI Developments in 2025 Set Stage for 2026 Compliance Challenges and Strategies Trump Drafts Executive Order to Block State AI Regulations, Centralizing Authority Under Federal Control

Trump Drafts Executive Order to Block State AI Regulations, Centralizing Authority Under Federal Control California Court Rules AI Misuse Heightens Lawyer’s Responsibilities in Noland Case

California Court Rules AI Misuse Heightens Lawyer’s Responsibilities in Noland Case Policymakers Urged to Establish Comprehensive Regulations for AI in Mental Health

Policymakers Urged to Establish Comprehensive Regulations for AI in Mental Health