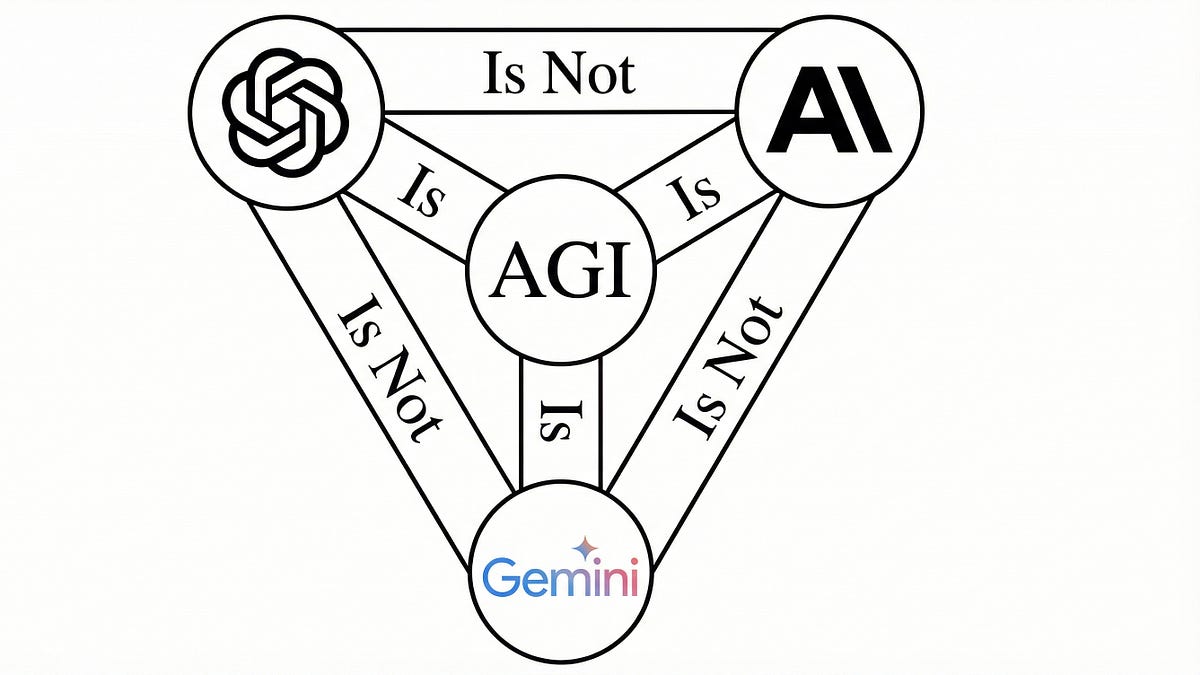

In the rapidly evolving landscape of artificial intelligence, a prominent user has shared insights into their AI stack, detailing a range of models that demonstrate distinct capabilities tailored to specific tasks. This user, identifying as Nathan Lambert, highlighted their mix of AI tools as of early January 2026, underscoring the necessity to leverage multiple models for optimal performance. This approach reflects a broader trend in the tech community where reliance on single models is becoming less viable as the capabilities of various AI systems diverge.

Leading Lambert’s toolkit is the GPT 5.2 Thinking / Pro model, which he employs primarily for research tasks. This model serves as a reliable source for retrieving information, whether it’s verifying details for his upcoming book on Reinforcement Learning from Human Feedback (RLHF) or recalling intricate facts related to academic papers. Lambert noted that while he typically uses the Thinking variant for its accuracy, the Pro version remains unparalleled for research. He described it as Simon Willison’s “research goblin,” a term that captures its efficacy in academic pursuits. Lambert expressed some frustration with the non-Thinking variants, which he finds less reliable and somewhat inferior compared to other options on the market.

Another key player in Lambert’s repertoire is Claude 4.5 Opus, which he reserves for tasks involving basic coding questions and data visualization. He appreciates the model’s refreshing tone and effective feedback mechanism, noting its relative speed compared to other models, particularly when contrasted with the GPT systems. This model’s performance has earned his trust for day-to-day coding tasks, marking a shift from the earlier preference for GPT 4.5.

For broader inquiries and multimodal tasks, Lambert relies on Gemini 3 Pro, which he describes as useful for explaining well-documented concepts despite occasional issues with search accuracy. He finds the research functionalities of Gemini lacking compared to GPT, leading him to conclude that while it is a valuable tool, it may not instill the same confidence in retrieving recent or research-related information.

Lambert also utilizes Grok 4 roughly once a month to track specific AI news or trends he recalls from social media. Despite recognizing its intelligence, he admits it does not have uniquely compelling features that would integrate it more regularly into his workflow.

In the realm of coding, Lambert primarily engages with Claude Opus 4.5, benefiting from its capabilities in managing complex data processing and assisting with substantial edits for his RLHF book. His experience with Claude Opus has led him to advocate for its capabilities, particularly in the context of recent advancements that have made it a strong contender against established models like OpenAI’s Codex. He noted that the excitement surrounding Claude Code, powered by Opus 4.5, is reflective of a significant leap in performance that many in the tech community are echoing.

As Lambert navigates this multi-model environment, he emphasizes the value of marginal intelligence over speed, particularly for tasks that are only beginning to be handled effectively by AI. He anticipates that as these models continue to evolve, the emphasis on speed will grow as a competitive factor within the marketplace. Lambert’s commitment to utilizing multiple models illustrates a shift in the industry where capabilities are unevenly distributed, necessitating a more strategic approach to model selection.

The future of AI models appears promising yet complex. Lambert’s perspective suggests that as capabilities mature, users will need to remain flexible, adapting to the ongoing enhancements in AI technology. The increasing sophistication of models like GPT 5.2 Thinking and Claude 4.5 Opus indicates that users may soon find themselves not just optimizing for performance but also navigating a diverse landscape of options to suit their needs. This evolution may challenge the notion of loyalty to any single provider, urging practitioners to experiment with a variety of emerging tools to maximize their productivity in an increasingly intricate technological environment.

See also Arizona Lawmakers Advance Psychedelic Legislation Amid Rising AI Psychosis Concerns

Arizona Lawmakers Advance Psychedelic Legislation Amid Rising AI Psychosis Concerns Deep Learning Study Reveals Personalized Entrepreneurship Education Model Enhancements

Deep Learning Study Reveals Personalized Entrepreneurship Education Model Enhancements Owkin Launches AI Agents for Drug Discovery, Enhancing Clinical Success Rates with Real Patient Data

Owkin Launches AI Agents for Drug Discovery, Enhancing Clinical Success Rates with Real Patient Data NVIDIA Expands BioNeMo Platform with $1B AI Lab Partnership for Drug Discovery Advances

NVIDIA Expands BioNeMo Platform with $1B AI Lab Partnership for Drug Discovery Advances Alibaba’s AI Division Surges as Downloads Exceed 700M Amid Favorable Regulatory Shift

Alibaba’s AI Division Surges as Downloads Exceed 700M Amid Favorable Regulatory Shift