Researchers have uncovered significant biases in artificial intelligence (AI) models used for cancer diagnosis, revealing that performance disparities exist among patients based on self-reported gender, race, and age. The findings, which highlight an emerging concern in medical AI, were led by Kun-Hsing Yu, an associate professor of biomedical informatics at the Blavatnik Institute at Harvard Medical School (HMS) and an assistant professor of pathology at Brigham and Women’s Hospital.

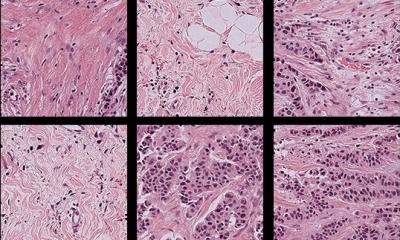

The team evaluated several AI pathology models designed for diagnosing various cancers, using a diverse repository of pathology slides encompassing 20 different cancer types. They discovered that all four models they analyzed demonstrated biased performance, particularly struggling to provide accurate diagnoses for certain demographic groups. For instance, the models found it challenging to differentiate lung cancer subtypes in African American and male patients, and breast cancer subtypes in younger individuals. These inaccuracies occurred in approximately 29% of the diagnostic tasks conducted by the models.

“We found that because AI is so powerful, it can differentiate many obscure biological signals that cannot be detected by standard human evaluation,” Yu stated. However, the team was surprised to observe that bias persisted in pathology AI, which is typically regarded as objective. “Reading demographics from a pathology slide is thought of as a ‘mission impossible’ for a human pathologist,” Yu added.

The researchers theorized that unequal representation in training data might contribute to these biases. Specifically, the models were trained on samples where certain demographic groups were overrepresented. Consequently, the AI struggled to make accurate diagnoses for samples that were underrepresented, particularly among minority groups defined by race, age, or gender. “The problem turned out to be much deeper than that,” Yu noted.

Even when sample sizes were comparable, the models’ performance varied significantly across demographic groups. The researchers found that differential disease incidence could explain this phenomenon. Some cancers are more prevalent in specific populations, allowing the models to develop heightened diagnostic capabilities in those groups. This dynamic further complicates the models’ ability to accurately diagnose cancers that are less common in other populations.

In response to these biases, the team developed a framework known as FAIR-Path, designed to mitigate bias within AI pathology models. The framework aims to enhance fairness in AI applications in medicine with minimal effort. Yu emphasized the importance of counteracting AI bias in healthcare, given its potential to impact diagnostic accuracy and patient outcomes.

The implications of this research are significant, as AI continues to play an increasingly prominent role in healthcare decision-making. As healthcare providers and institutions integrate AI into diagnostic processes, understanding and addressing biases in these technologies will be crucial for ensuring equitable care. Yu’s findings may pave the way for improvements not only in cancer pathology but also in various other medical AI applications.

Looking ahead, the ongoing challenge will be to refine these AI models to ensure they perform equitably across diverse populations. As researchers continue to explore the complexities of AI in medicine, the development of frameworks like FAIR-Path could prove essential in creating more inclusive and accurate diagnostic tools, ultimately enhancing patient care and outcomes.

See also AI Tool BMVision Reduces Kidney Cancer Detection Time by 33% at Tartu University Hospital

AI Tool BMVision Reduces Kidney Cancer Detection Time by 33% at Tartu University Hospital Carnegie Mellon Unveils Image2Gcode Framework, Directly Converts 2D Images to G-code

Carnegie Mellon Unveils Image2Gcode Framework, Directly Converts 2D Images to G-code Deep Learning Model Classifies Bamboo Density with 95% Accuracy Using PyTorch

Deep Learning Model Classifies Bamboo Density with 95% Accuracy Using PyTorch AI Research Submissions Surge 200% in 2025, Raising Urgent Novelty Concerns

AI Research Submissions Surge 200% in 2025, Raising Urgent Novelty Concerns AI Systems Projected to Consume Up to 765 Billion Litres of Water Annually, Surpassing Bottled Water Use

AI Systems Projected to Consume Up to 765 Billion Litres of Water Annually, Surpassing Bottled Water Use