Generative artificial intelligence (GenAI) is emerging as a transformative force in various fields, particularly in academia and research, though caution is urged amid its rapid evolution. Hongliang Xin, a professor of chemical engineering at Virginia Tech, presents a balanced view on the technology, emphasizing its potential while acknowledging the risks associated with its misuse. Xin argues that meaningful human oversight is essential to harness the capabilities of GenAI effectively, especially as it begins to play a critical role in complex decision-making processes.

Xin’s work in catalysis science illustrates the practical applications of AI in accelerating discoveries related to energy and environmental challenges. By leveraging AI’s ability to analyze extensive datasets and automate intricate tasks, researchers can significantly expedite scientific progress. This is particularly vital in fields like materials science, where AI has the potential to identify new compounds that could, for instance, capture carbon dioxide or purify water more efficiently.

The concept of autonomous agents in AI is not new; it has been explored since the 1960s. However, the current iteration of this technology is marked by advancements in large language models (LLMs), which allow these systems to understand human intent and break tasks into manageable components. This newfound autonomy enables AI to perform complex computational workflows that would take humans significantly longer to complete, presenting opportunities for innovation previously thought unattainable.

While the capabilities of AI are exciting, Xin warns against an exclusive focus on its risks. He stresses that the collaboration between humans and machines can lead to breakthroughs that neither could achieve alone. For example, AI’s strength in data analysis complements human creativity and ethical judgment, creating a powerful partnership that can propel scientific inquiry forward.

Harnessing AI responsibly in research

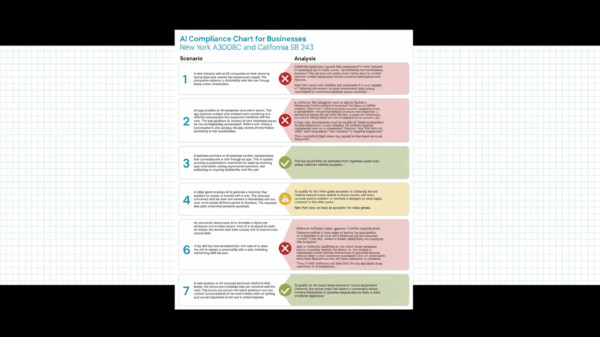

The responsible use of AI begins with clarity of purpose. Xin emphasizes the importance of defining specific goals and boundaries before integrating AI into research workflows. Critical questions must be addressed, such as: What problem are we trying to solve? What data will be utilized? Which decisions will remain under human control? By establishing these parameters, researchers can mitigate the potential for misuse.

Ensuring ethical standards encompasses both internal safeguards, like designing systems that respect operational limits, and external measures, such as institutional governance frameworks. Xin compares the future need for “anti-AI” systems—tools to detect harmful behaviors in AI models—to existing spam filters in email systems.

Amid this technological landscape, fears surrounding agentic AI often surface. Critics worry about the potential for machines to replace human researchers. However, Xin argues that such a scenario is unrealistic, as AI and humans learn in fundamentally different ways. While AI excels in pattern recognition, it often lacks the contextual understanding and common sense that characterize human thought processes. Thus, fostering a collaborative mindset is essential; AI should complement, not compete with, human skills.

As research becomes increasingly reliant on AI, collective action is necessary. No single laboratory, company, or university can navigate this transformation alone. Shared standards in ethics, reproducibility, and data literacy are imperative for responsible AI integration. Researchers must not only know how to prompt AI systems but also how to critically evaluate their outputs, ensuring that verification and transparency remain non-negotiable.

Looking ahead, Xin believes that the future of scientific research will hinge on the expansion of tools available for inquiry. Generative and agentic AI will enable exploration on a scale previously unimaginable, unlocking insights from data that could take humans a lifetime to analyze. However, the effectiveness of these tools ultimately relies on the wisdom of their users. By treating AI as a collaborator rather than a competitor, humanity has the potential to address pressing global challenges such as climate change and public health crises, all while maintaining ethical governance to prevent exacerbating existing societal issues.

In conclusion, as AI technology continues to evolve, it can amplify human purpose without replacing the human mind. The collaboration between human intelligence and machine learning is poised to redefine the landscape of research, making it not only automated but augmented, showcasing the remarkable potential of this partnership in advancing human knowledge and capability.

See also AI Study Reveals Generated Faces Indistinguishable from Real Photos, Erodes Trust in Visual Media

AI Study Reveals Generated Faces Indistinguishable from Real Photos, Erodes Trust in Visual Media Gen AI Revolutionizes Market Research, Transforming $140B Industry Dynamics

Gen AI Revolutionizes Market Research, Transforming $140B Industry Dynamics Researchers Unlock Light-Based AI Operations for Significant Energy Efficiency Gains

Researchers Unlock Light-Based AI Operations for Significant Energy Efficiency Gains Tempus AI Reports $334M Earnings Surge, Unveils Lymphoma Research Partnership

Tempus AI Reports $334M Earnings Surge, Unveils Lymphoma Research Partnership Iaroslav Argunov Reveals Big Data Methodology Boosting Construction Profits by Billions

Iaroslav Argunov Reveals Big Data Methodology Boosting Construction Profits by Billions