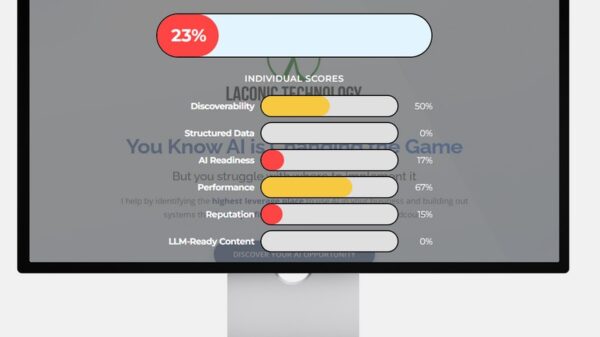

Researchers at the Georgia Institute of Technology have made significant strides in the realm of **federated optimization**, particularly in addressing **stochastic variational inequalities** (VIs), a problem that has garnered increasing attention in recent years. Despite advances in this field, a notable gap remains between the existing convergence rates and the best-known bounds for federated convex optimization. The team’s recent work aims to bridge this gap by establishing improved **convergence rates** for these complex mathematical challenges.

The study highlights the classical **Local Extra SGD** algorithm, which has been widely utilized for solving variational inequalities. Through refined analysis, the researchers demonstrate that this algorithm can provide tighter guarantees for general smooth and monotone variational inequalities. However, they also identify a significant limitation in Local Extra SGD: excessive client drift, which can compromise the effectiveness of federated learning systems.

In response to this challenge, the researchers propose a new algorithm named the **Local Inexact Proximal Point Algorithm with Extra Step** (LIPPAX). This innovative approach addresses the issue of client drift while simultaneously delivering improved guarantees across various scenarios, including settings characterized by **bounded Hessian**, **bounded operator**, and **low-variance** conditions. These enhancements indicate that LIPPAX could significantly advance the field of federated optimization by making it more robust and effective.

The implications of this research extend beyond theoretical improvements. As federated learning continues to gain traction, particularly in privacy-sensitive applications such as healthcare and finance, the ability to mitigate client drift becomes increasingly crucial. Algorithms like LIPPAX not only enhance performance but also bolster the reliability of federated systems, allowing for more efficient training without compromising user data.

Moreover, the researchers have expanded their findings to include **federated composite variational inequalities**, showcasing the versatility of their methods. By establishing improved convergence guarantees in this broader context, their work paints a comprehensive picture of the potential for federated learning to tackle various complex optimization problems.

This research not only highlights the progress made in federated optimization but also sets the stage for future developments in the field. As industries continue to explore the benefits of decentralized learning systems, advancements such as those presented by the Georgia Institute of Technology could pave the way for more effective and secure machine learning applications. The ongoing work in this area underscores the importance of refining algorithms to meet the demands of a rapidly evolving technological landscape, making it a critical area for continued exploration and innovation.

See also AI Study Reveals Generated Faces Indistinguishable from Real Photos, Erodes Trust in Visual Media

AI Study Reveals Generated Faces Indistinguishable from Real Photos, Erodes Trust in Visual Media Gen AI Revolutionizes Market Research, Transforming $140B Industry Dynamics

Gen AI Revolutionizes Market Research, Transforming $140B Industry Dynamics Researchers Unlock Light-Based AI Operations for Significant Energy Efficiency Gains

Researchers Unlock Light-Based AI Operations for Significant Energy Efficiency Gains Tempus AI Reports $334M Earnings Surge, Unveils Lymphoma Research Partnership

Tempus AI Reports $334M Earnings Surge, Unveils Lymphoma Research Partnership Iaroslav Argunov Reveals Big Data Methodology Boosting Construction Profits by Billions

Iaroslav Argunov Reveals Big Data Methodology Boosting Construction Profits by Billions