The increasing autonomy of artificial intelligence is raising important concerns about decision-making transparency and accountability, especially as these systems begin to influence real-world outcomes. A research team from Old Dominion University and the University of Oulu, including Eranga Bandara, Tharaka Hewa, and Ross Gore, has introduced a novel architecture designed to enhance both explainability and responsibility in AI systems. This architecture employs multiple AI models that independently process information and generate potential solutions, thereby illuminating areas of uncertainty and disagreement. A dedicated “reasoning agent” consolidates these diverse outputs, enforcing pre-defined policies and mitigating biases, ultimately leading to well-supported, auditable decisions. This innovative approach marks a significant step toward developing AI systems that are not only powerful and scalable but also demonstrably responsible and understandable.

Research surrounding Large Language Models (LLMs) has been rapidly expanding, particularly in the realms of applications, improvements, and explainability. A notable focus is on AI’s role in healthcare, tackling diagnoses for conditions such as Amyotrophic Lateral Sclerosis (ALS), PTSD, and dental diseases, alongside advancements in digital mental health. Additional investigations are exploring how AI can enhance security and efficiency in 5G and emerging 6G networks, with some efforts integrating blockchain and NFTs. The theme of multi-agent systems, powered by LLMs, continues to emerge, indicating a growing interest in constructing more complex and autonomous AI solutions. The inclusion of Explainable AI (XAI) research highlights the increasing necessity of understanding how AI models arrive at decisions, particularly in sensitive domains like text generation and healthcare.

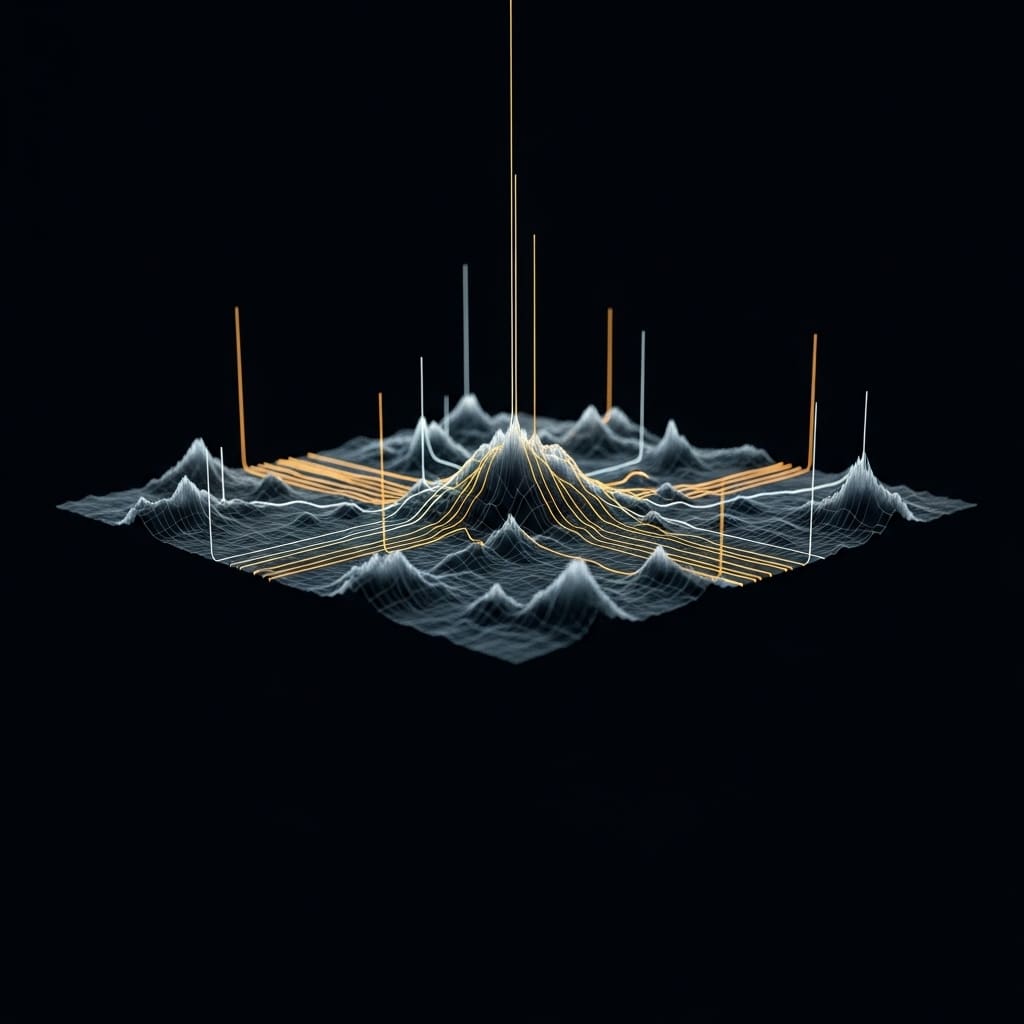

The research team has engineered an agentic architecture that significantly transforms existing autonomous systems. This new framework brings together multiple LLMs and Vision Language Models (VLMs), which operate collaboratively as a consortium, independently generating outputs from shared inputs. By doing so, the system explicitly reveals uncertainties and disagreements among the models. The architecture departs from basic statistical aggregation methods, instead utilizing a dedicated reasoning agent to perform structured consolidation of outputs. This agent not only enforces predefined policy constraints but also addresses potential hallucinations or biases, ensuring that the final decision-making process is both auditable and grounded in evidence from the model consortium. Intermediate outputs are preserved, allowing for thorough inspection of the reasoning process and facilitating cross-model comparisons.

This innovative agentic system architecture fundamentally changes how autonomous systems reason, plan, and execute complex tasks, achieving both scalability and the principles of responsible AI. The system coordinates LLMs, VLMs, and external services within a framework geared toward accountability and explainability. By generating diverse candidate outputs from a common input, the system exposes inherent uncertainty and disagreement between models, which significantly contributes to the overall explainability of the decision-making process. The central reasoning agent synthesizes these outputs, discarding any unsafe or unverifiable content to yield a single, consolidated result. This enhances the traceability of decisions back to original input sources and ensures a more robust system that is resilient to single model failures.

Ultimately, this research presents a promising new architecture for autonomous systems, known as Responsible and Explainable Agent Architecture. By coordinating multiple LLMs and VLMs, alongside dedicated tools and services, the team demonstrates that independent outputs can be reliably consolidated through a central reasoning agent. This method improves the reliability and trustworthiness of complex, multi-step tasks by highlighting areas of uncertainty and disagreement between models, making for a more transparent decision-making process than traditional autonomous systems. Evaluations across various applications, including news podcast generation, medical image analysis, and RF signal classification, affirm the architecture’s robustness and effectiveness in enhancing accountability and explainability.

See also AI Integration in Peer Review Surges to 53% Amid Calls for Responsible Governance

AI Integration in Peer Review Surges to 53% Amid Calls for Responsible Governance Delhi’s Education Reform Targets AI and Skill-Based Learning by 2026 Amid Ongoing Challenges

Delhi’s Education Reform Targets AI and Skill-Based Learning by 2026 Amid Ongoing Challenges 2025 Sees Record-Breaking Scientific Discoveries: Longest Lightning and AI-Generated Genomes

2025 Sees Record-Breaking Scientific Discoveries: Longest Lightning and AI-Generated Genomes NIT-C and JMR Infotech Launch ₹1.25 Crore AI Innovation Lab NEOHIVE for Real-World Applications

NIT-C and JMR Infotech Launch ₹1.25 Crore AI Innovation Lab NEOHIVE for Real-World Applications AI Agents’ Memory Systems Evolve: OpenAI and Google DeepMind Push for Enhanced Recall by 2025

AI Agents’ Memory Systems Evolve: OpenAI and Google DeepMind Push for Enhanced Recall by 2025