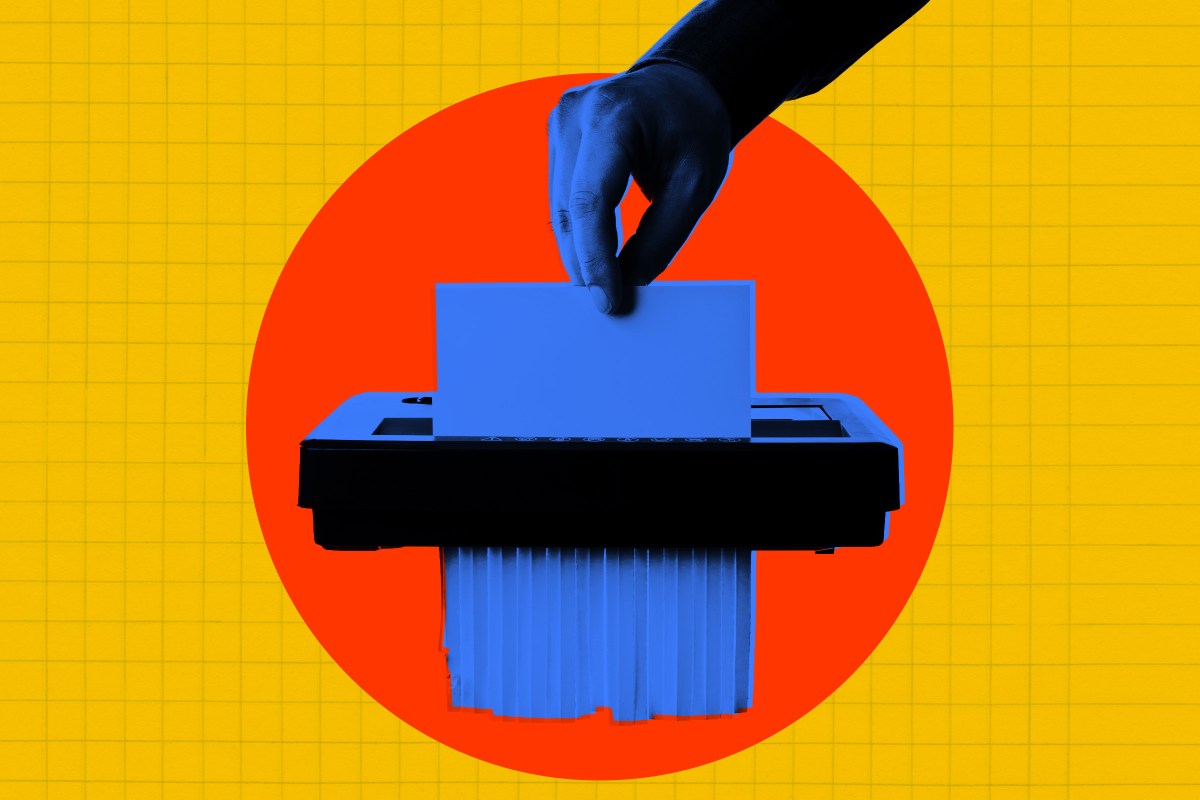

AI researchers are sounding alarms over the proliferation of low-quality academic papers generated with large language models, warning that the integrity of the field is at risk. As more individuals enter the AI research arena, they often prioritize quantity over quality, leading to a flood of subpar studies that obscure high-caliber work.

Hany Farid, a professor of computer science at UC Berkeley, described the current state of AI research as a “frenzy.” In an interview with The Guardian, he expressed concern that the overwhelming volume of publications has made it increasingly difficult for meaningful contributions to be recognized. “So many young people want to get into AI,” Farid said. “It’s just a mess. You can’t keep up, you can’t publish, you can’t do good work, you can’t be thoughtful.”

Farid’s critique of the situation was catalyzed by the case of Kevin Zhu, an AI researcher who claims to have published 113 papers in a single year. Farid questioned how one individual could engage meaningfully with that many technical papers. In a LinkedIn post, he remarked, “I can’t carefully read 100 technical papers a year,” highlighting the sheer volume of submissions overwhelming the academic discourse.

Zhu, who recently completed his bachelor’s degree at UC Berkeley, has initiated a program called Algoverse, aimed at high school and college students. Participants pay $3,325 for a 12-week online course during which they are expected to contribute to academic conferences. The involvement of these students has led to Zhu’s impressive publication record, with many coauthoring his papers.

One of the major events in the AI research calendar is the NeurIPS conference, which has seen submissions soar from fewer than 10,000 papers in 2020 to over 21,500 this year. This dramatic increase is attributed in part to the likes of Zhu, who has 89 papers being presented at the conference this week alone. The sheer influx of submissions has led NeurIPS to enlist PhD students to assist with the review process.

Farid labeled Zhu’s extensive output a “disaster,” asserting that it is unlikely he could have made meaningful contributions to so many papers. He criticized the trend as a form of “vibe coding,” a term that has emerged to describe the reckless use of AI tools in programming without thorough consideration or quality control.

When approached by The Guardian, Zhu did not confirm whether AI tools were used in the writing of his papers, but he did state that his teams utilized “standard productivity tools such as reference managers, spellcheck, and sometimes language models for copy-editing or improving clarity.” This raises questions about the role of AI in shaping research methodologies and the potential ramifications of relying heavily on these technologies.

The introduction of AI tools into academic research has sparked ongoing debate, as they can produce content that is both innovative and fraught with inaccuracies. For example, issues like fabricated citations and nonsensical figures have emerged, raising concerns about the peer review process. Some authors have even attempted to exploit AI’s capabilities by embedding hidden texts in their papers to deceive AI-powered reviewers.

The growing reliance on AI for research outputs has led to a troubling paradox: as AI technology evolves, so too does the potential for the field to undermine itself. This raises critical questions about the future of AI research, particularly for emerging scientists who may find their work overshadowed by a deluge of hastily produced studies that lack rigor and authenticity.

Farid, a seasoned expert in the field, highlighted the challenges faced by both students and established researchers. “You have no chance, no chance as an average reader to try to understand what is going on in the scientific literature,” he said. “Your signal-to-noise ratio is basically one. I can barely go to these conferences and figure out what the hell is going on.”

As the field grapples with the implications of its own advancements, the future trajectory of AI research remains uncertain. The struggle for quality amidst a flood of mediocre output may ultimately determine the sustainability and credibility of the discipline in the years to come.

See also Pentagon Unveils AI Adoption Plan for 3 Million Users Amid Complex Challenges

Pentagon Unveils AI Adoption Plan for 3 Million Users Amid Complex Challenges Trump Approves Nvidia to Sell $4.5T H200 AI Chips to China Amid National Security Concerns

Trump Approves Nvidia to Sell $4.5T H200 AI Chips to China Amid National Security Concerns Harrisburg Launches AI-Powered Parking System Across 12 City Garages

Harrisburg Launches AI-Powered Parking System Across 12 City Garages Trump Allows Nvidia to Sell H200 AI Chips to China, Boosting Revenue Potential by Billions

Trump Allows Nvidia to Sell H200 AI Chips to China, Boosting Revenue Potential by Billions Agentic AI Revolutionizes Decision-Making in 2025 with $7.28B Market Growth

Agentic AI Revolutionizes Decision-Making in 2025 with $7.28B Market Growth