China’s GPU cloud market is rapidly consolidating around a few domestic leaders, primarily Baidu and Huawei, as access to advanced Nvidia accelerators remains restricted due to ongoing U.S. export controls. A report from Frost & Sullivan indicates that these two companies together command more than 70% of the market for cloud services built on domestically designed AI chips, a significant shift aimed at vertically integrating AI hardware, software, and cloud services. Meanwhile, a surge of AI chip start-ups is racing to public markets to secure funding for the next phase of domestic silicon development.

This consolidation comes in light of U.S. export restrictions, which have forced Chinese firms to adjust their AI infrastructure strategies. Since late 2022, companies have had to rely on lower-grade GPUs or entirely domestic alternatives, resulting in a GPU cloud market that diverges significantly from its Western counterparts. The architectural choices and performance trade-offs in this environment are influenced as much by geopolitical factors as by engineering considerations.

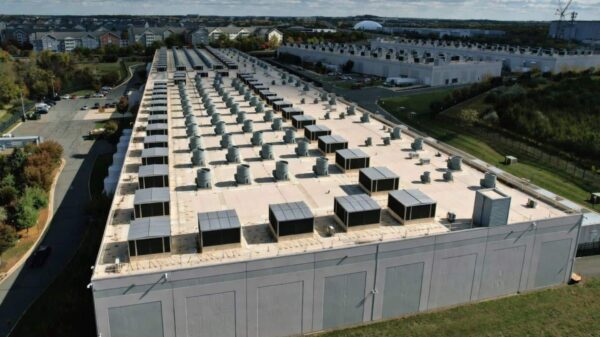

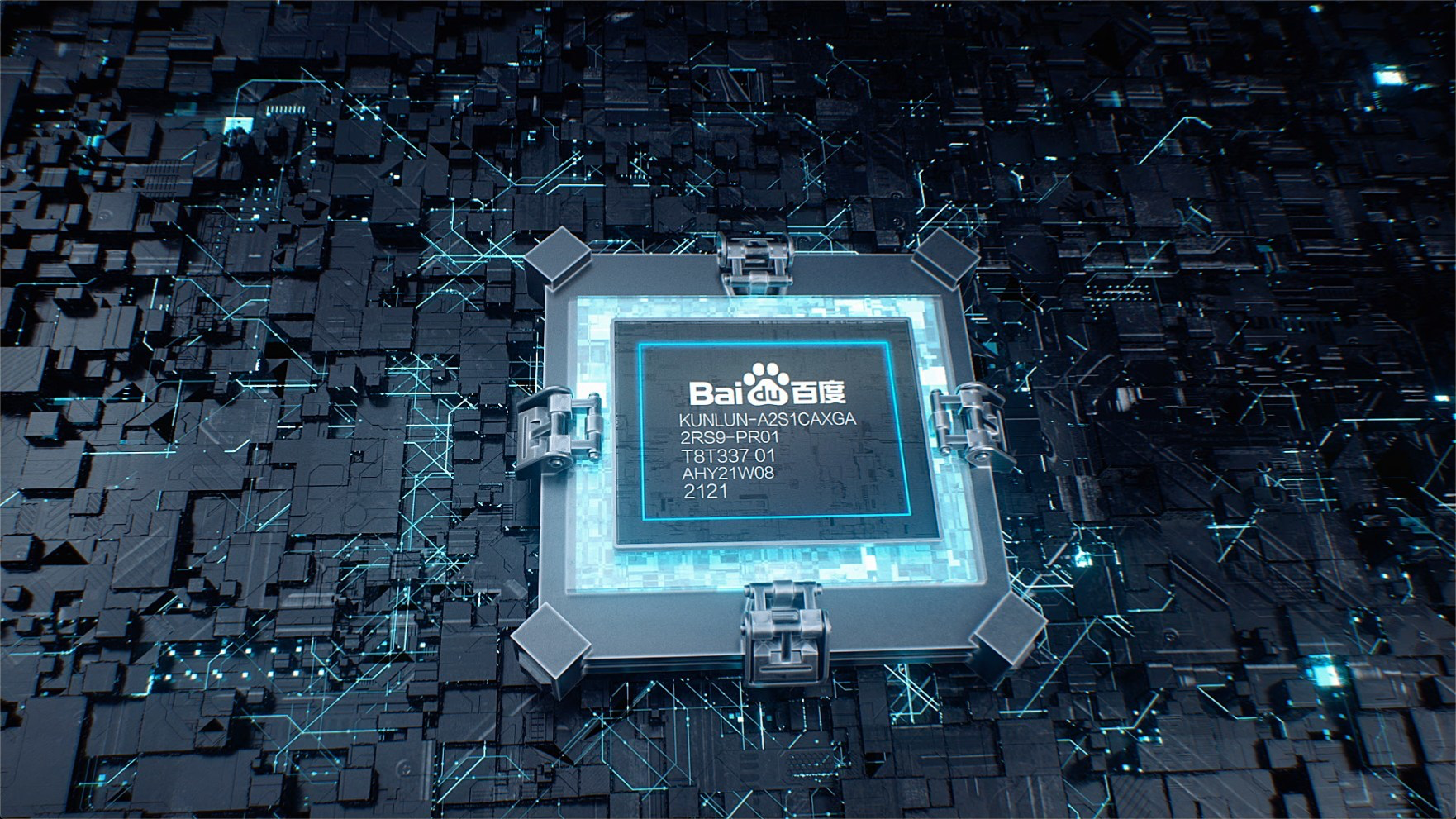

The strategy that underpins the success of Baidu and Huawei is one of comprehensive control over the stack. Rather than merely acting as neutral cloud providers, both companies design their own AI accelerators and optimize their software frameworks. Baidu’s AI cloud is centered around its Kunlun accelerator line, which is now in its third generation. In April 2025, Baidu announced it had launched a 30,000-chip training cluster powered entirely by Kunlun processors, capable of training foundation models with hundreds of billions of parameters while simultaneously supporting numerous enterprise workloads. This tight integration of Kunlun hardware with Baidu’s PaddlePaddle framework allows it to compensate for the lack of Nvidia’s CUDA ecosystem through vertical optimization.

Huawei employs a similar approach, albeit on a larger scale. Its Ascend accelerator family is increasingly utilized by state-owned enterprises and government-backed cloud initiatives. Huawei’s latest configuration, based on the Ascend 910 series chips, emphasizes dense clustering and high-speed interconnects to mitigate performance gaps compared to Nvidia’s H100-class GPUs. The company has been candid about the trade-offs involved, focusing on cluster-level scaling as its path forward while advanced manufacturing techniques remain inaccessible.

Both companies are also exploring heterogeneous cluster designs, mixing various generations and vendors of accelerators within single training or inference pools. This strategy reduces reliance on any single chip supplier but raises software complexity, requiring custom orchestration layers to manage varying performance characteristics. Baidu and Huawei are among the few firms in China equipped with the engineering resources necessary for such systems, further solidifying their market positioning.

Domestic accelerators, like Huawei’s Ascend 910B and Baidu’s Kunlun II, have narrowed the performance gap with Nvidia’s A100 and H100 models, although challenges remain in efficiency and memory subsystems. Huawei’s Ascend 910B claims competitive compute density, yet its production has been limited due to yield constraints. The upcoming Ascend 910C, designed with a multi-die architecture, improves performance but increases power consumption, complicating operational logistics in large deployments.

Meanwhile, Kunlun II is comparable to Nvidia’s A100 in specific workloads, while the newer P800 emphasizes scalability and cloud integration over peak performance metrics. Baidu has chosen a cautious approach in its performance claims, instead highlighting system-level throughput and service availability for enterprise clients.

As Baidu and Huawei fortify their positions in the cloud sector, a burgeoning ecosystem of AI chip designers is seeking to enter public markets for funding new silicon technologies. Over the last year, regulatory bodies in China have expedited approvals for tech IPOs, with companies like Moore Threads and MetaX achieving high valuations despite limited market share. This investor enthusiasm is fueled by Beijing’s prioritization of semiconductors and AI infrastructure, channeling capital into these areas.

While several new chipmakers are focusing their architectures for cloud deployment, the lack of a dominant software platform akin to CUDA may hinder broader integration. Many of these start-ups depend on established cloud providers like Baidu and Huawei for market access, inadvertently reinforcing the dominance of these GPU cloud leaders.

China’s GPU cloud market is evolving into a fundamentally different landscape compared to those in the U.S. and Europe. Instead of vying primarily for access to the latest Nvidia hardware, Chinese providers are competing based on integration depth, local supply security, and alignment with government policy goals. This tightening control over GPU cloud infrastructure enhances Baidu and Huawei’s bargaining power throughout the AI value chain, positioning them as not just service providers but gatekeepers for domestic AI compute.

In the short term, this model is unlikely to displace Nvidia’s global dominance. Chinese firms still favor Nvidia accelerators when available, as the performance-per-watt advantage of U.S.-designed GPUs remains significant. However, within China, the growth of GPU clouds will increasingly be driven by domestic solutions, even if less efficient, due to their controllability.

See also AMD Launches Ryzen AI 400 Chips, Claims 30% Multitasking Boost Over Intel’s Ultra 9

AMD Launches Ryzen AI 400 Chips, Claims 30% Multitasking Boost Over Intel’s Ultra 9 Nvidia Launches Rubin AI Architecture; AMD Pushes Generative AI for Consumer PCs at CES 2026

Nvidia Launches Rubin AI Architecture; AMD Pushes Generative AI for Consumer PCs at CES 2026 Razer Reveals AI-Powered Project AVA Companion and Next-Gen Gaming Innovations at CES 2026

Razer Reveals AI-Powered Project AVA Companion and Next-Gen Gaming Innovations at CES 2026 Siemens, NVIDIA Announce AI Industrial Revolution; Razer Unveils Game-Changing Tech at CES 2026

Siemens, NVIDIA Announce AI Industrial Revolution; Razer Unveils Game-Changing Tech at CES 2026 Quantum AI: Researchers Highlight Hybrid Systems to Tackle AI’s Computational Limits

Quantum AI: Researchers Highlight Hybrid Systems to Tackle AI’s Computational Limits