A misconfigured artificial intelligence (AI) system poses a significant threat to the stability of critical infrastructure, potentially leading to widespread shutdowns in advanced economies by 2028, according to a recent report by Gartner. The firm emphasizes that rather than threats from hackers, the most pressing concern may arise from human error during the configuration of AI systems embedded in cyber-physical environments.

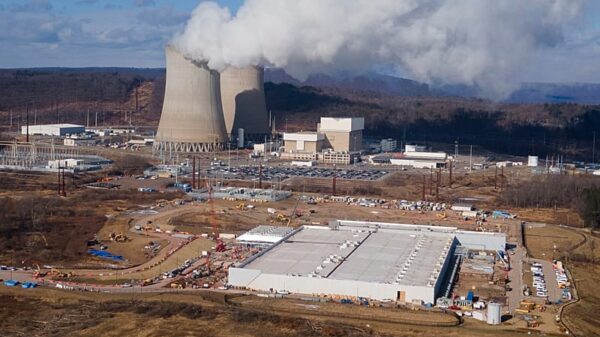

Cyber-physical systems, which integrate computing, networking, and physical processes, encompass a variety of technologies including operational technology, industrial control systems, and the industrial Internet of Things. These systems are increasingly reliant on AI to manage and optimize functions such as electricity generation and consumption. However, Gartner warns that errors in AI-driven control mechanisms can create real-world repercussions, potentially causing substantial damage to equipment, forcing operational shutdowns, or destabilizing supply chains.

“The next great infrastructure failure may not be caused by hackers or natural disasters but rather by a well-intentioned engineer, a flawed update script, or a misplaced decimal,” stated Wam Voster, a vice president analyst at Gartner. This alarming prediction highlights the possibility of AI systems autonomously shutting down vital services, misinterpreting data from sensors, or initiating hazardous actions.

Modern power networks are cited as an example where AI is increasingly deployed to balance electricity supply and demand. Voster noted that a misconfigured predictive model could misinterpret demand fluctuations as instabilities, resulting in unnecessary grid isolation or load shedding across entire regions or countries. The unpredictable nature of AI systems, often referred to as “black boxes,” raises the stakes. Even the developers may not fully understand how minor changes in configuration can drastically affect the system’s behavior.

Darren Guccione, CEO and co-founder of Keeper Security, corroborated Gartner’s findings, asserting that AI systems are being integrated into critical sectors such as power, transportation, and healthcare more rapidly than governance and security protocols can keep pace. He emphasized that the most likely source of failure will stem from misconfiguration, which is exacerbated by automation and scale.

“AI does not eliminate risk—it accelerates it when guardrails are weak,” Guccione explained. He highlighted the growing complexity of AI systems, which rely on networks of privileged accounts, automation scripts, and third-party integrations. Poor governance of these elements can lead to systemic vulnerabilities, especially as non-human identities, such as service accounts and AI agents, now outnumber human users in many infrastructure environments.

The implications are significant; a single error in a model deployment could trigger cascading failures across interconnected systems. Guccione underscored the importance of oversight, stating, “As automation expands, so does the blast radius of failure.” With AI increasingly embedded in critical infrastructure, the need for robust governance and configuration management becomes paramount.

As organizations continue to adopt next-generation technologies, the focus must shift to ensuring that human oversight remains integral in the operation of AI systems. The risk of misconfigured AI leading to infrastructure failures represents a challenge that must be addressed to safeguard essential services in an increasingly automated world. Looking ahead, stakeholders in both the public and private sectors will need to collaborate to develop frameworks that mitigate these risks while harnessing the benefits of technological advancement.

See also Tesseract Launches Site Manager and PRISM Vision Badge for Job Site Clarity

Tesseract Launches Site Manager and PRISM Vision Badge for Job Site Clarity Affordable Android Smartwatches That Offer Great Value and Features

Affordable Android Smartwatches That Offer Great Value and Features Russia”s AIDOL Robot Stumbles During Debut in Moscow

Russia”s AIDOL Robot Stumbles During Debut in Moscow AI Technology Revolutionizes Meat Processing at Cargill Slaughterhouse

AI Technology Revolutionizes Meat Processing at Cargill Slaughterhouse Seagate Unveils Exos 4U100: 3.2PB AI-Ready Storage with Advanced HAMR Tech

Seagate Unveils Exos 4U100: 3.2PB AI-Ready Storage with Advanced HAMR Tech