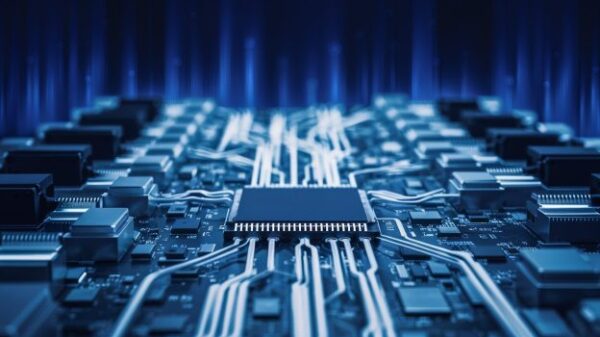

The graphics processing unit (GPU), once a humble graphics chip, has evolved into a versatile workhorse of modern technology, influencing various sectors from gaming to data centers. Originating from the 1987 VGA controller, the GPU has transformed alongside the demands for enhanced visual fidelity in gaming, ultimately assimilating capabilities from digital signal processors (DSPs), Google’s tensor processing units (TPUs), and smartphone AI accelerators. Today’s GPUs boast capacities of up to 4 trillion transistors, powering everything from mobile devices to massive data-center infrastructures.

As the GPU continues to play an integral role in daily life—driving cars, enhancing mobile connectivity, and facilitating immersive gaming experiences—it is essential to reflect on its rapid integration into various technologies. The concept of manipulating individual pixels emerged with the VGA controller in 1987, which catalyzed the adoption of advanced graphics in gaming. This demand for quality led to the development of more sophisticated graphics processors with multiple pipeline architectures, which would later be referred to as “shaders.”

The rise of dedicated graphics processors extended beyond gaming, finding applications in mobile phones as standalone graphics co-processors. This development spurred the concept of system-on-chip (SoC) designs, facilitated by the relentless progression defined by Moore’s Law. As technology advanced, single instruction multiple data (SIMD) architectures evolved into single instruction multiple threads (SIMT), leading to the adoption of unified programmable shaders in the early 2000s.

Simultaneously, DSPs transitioned from specialized music and image engines to hybrid processors with added vector capabilities, ultimately merging into what has come to be recognized as neural processing units (NPUs). Google pioneered the introduction of a dedicated matrix engine for artificial intelligence (AI) with their TPU in the mid-2010s, which was integrated into GPUs by 2017.

By the late 2010s, dedicated AI engines began to emerge as video processors (VPUs) and subsequently became integrated into smartphone SoCs, marking the inception of dedicated AI processing capabilities in consumer devices. The evolution of the GPU has been akin to a vast river, continuously absorbing innovations and technologies over its 27-year history, from early VGA sequencers to modern graphics output protocols (GOP) under UEFI established in 2011.

Today’s landscape features powerful x86 and Arm CPUs with integrated GPUs entering the AI market, signaling a significant shift in computational architecture. As these technologies converge, history suggests it is only a matter of time before Arm or RISC-V CPUs are further assimilated into the architecture of powerful GPUs. The industry stands at a crossroads, contemplating the future of integrated systems like Cerebras’ expansive designs versus AMD’s chiplet constellations.

Industry leaders are already testing hybrid approaches, incorporating area-intensive static random-access memory (SRAM) to overcome existing memory bandwidth limitations. This ongoing evolution highlights four decades of assimilation and integration that inform predictions about the future of computing hardware. Significant milestones in this trajectory can be marked by the integration of floating-point units (FPUs) into the 80386 in 1985, or the incorporation of GPU functionalities into integrated graphics in the Westmere architecture in 2010.

As the era of AI processors emerges, the GPU’s role appears poised for further expansion. A comprehensive analysis of the AI processor market, including key players, financial metrics, and growth forecasts, is available in the 2026 AI processor report. The trajectory of GPU technology shows no signs of slowing, indicating that the industry will likely continue to embrace a philosophy of assimilation, blending capabilities to optimize performance.

In conclusion, as GPUs evolve in response to the insatiable demand for processing power, their continued integration with other technologies suggests a future where resistance is indeed futile; the appetite for innovation will ensure that all elements of computing will eventually be assimilated.

See also Tesseract Launches Site Manager and PRISM Vision Badge for Job Site Clarity

Tesseract Launches Site Manager and PRISM Vision Badge for Job Site Clarity Affordable Android Smartwatches That Offer Great Value and Features

Affordable Android Smartwatches That Offer Great Value and Features Russia”s AIDOL Robot Stumbles During Debut in Moscow

Russia”s AIDOL Robot Stumbles During Debut in Moscow AI Technology Revolutionizes Meat Processing at Cargill Slaughterhouse

AI Technology Revolutionizes Meat Processing at Cargill Slaughterhouse Seagate Unveils Exos 4U100: 3.2PB AI-Ready Storage with Advanced HAMR Tech

Seagate Unveils Exos 4U100: 3.2PB AI-Ready Storage with Advanced HAMR Tech