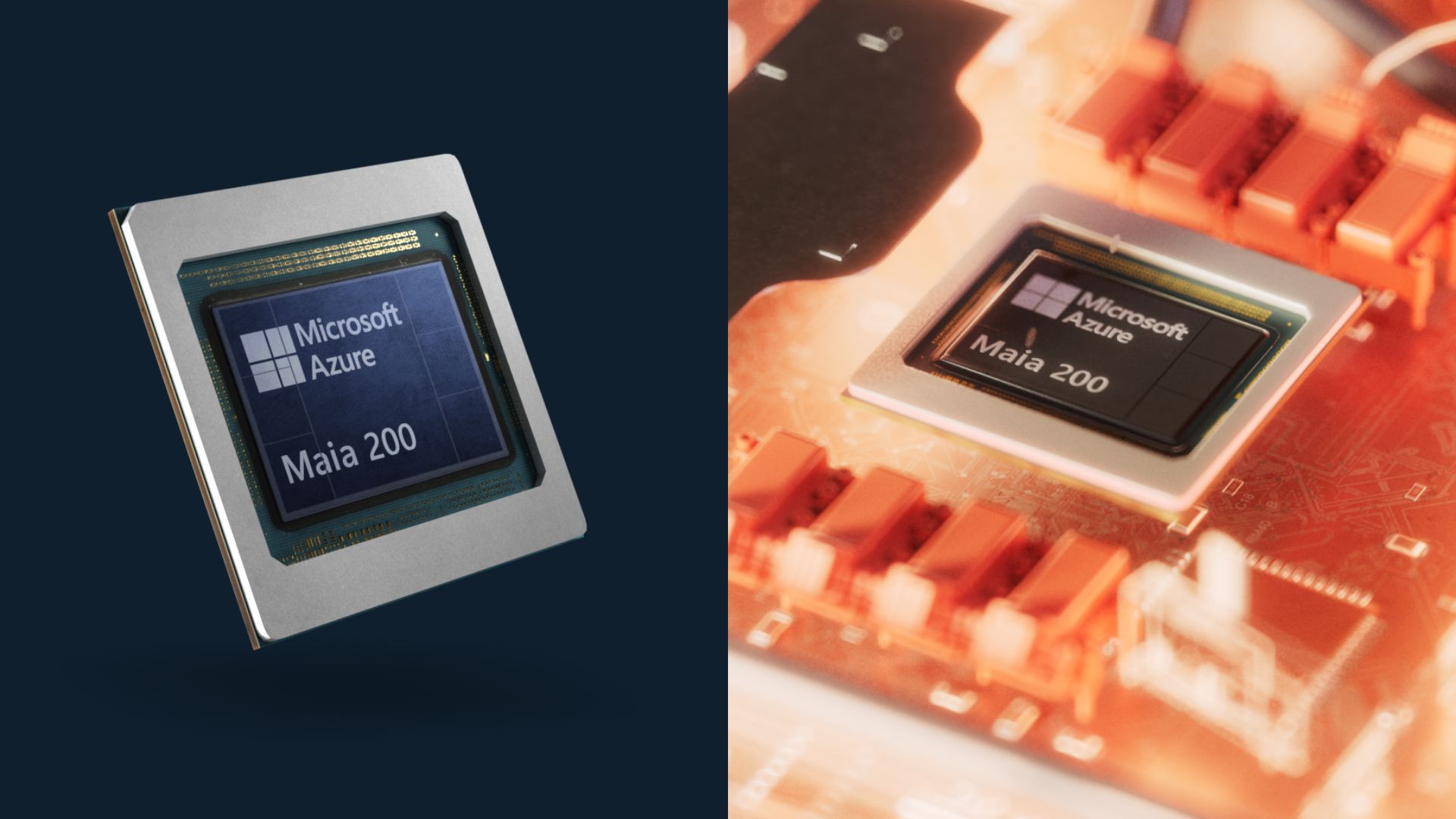

Microsoft has unveiled the Maia 200, its second-generation in-house AI chip, amid increasing competition surrounding the costs associated with running large AI models. The new chip, which goes live this week at a Microsoft data center in Iowa, is designed specifically for inference—the ongoing process of delivering AI responses to users—marking a shift from earlier hardware innovations that concentrated on training models.

As AI chatbots and digital assistants expand to millions of users, the expenses related to inference have surged. Microsoft asserts that the Maia 200 is engineered to address this growing demand, optimizing performance to support the seamless delivery of AI services. A second deployment of the chip is planned for Arizona.

The Maia 200 builds on its predecessor, the Maia 100, launched in 2023, delivering a substantial performance enhancement. According to Microsoft, the new chip incorporates over 100 billion transistors and achieves more than 10 petaflops of compute power at 4-bit precision; at 8-bit precision, it offers roughly 5 petaflops. These metrics are tailored for real-world workloads rather than merely training benchmarks, as inference prioritizes speed, stability, and energy efficiency. Microsoft claims a single Maia 200 node can handle today’s largest AI models while leaving room for future scalability.

The design of Maia 200 reflects the demands of modern AI services, where quick responses are essential, especially during surges in user traffic. To meet this requirement, the chip features a significant amount of SRAM, a type of fast memory that minimizes latency during repeated queries. This strategy aligns with trends observed among newer AI hardware developers, who are increasingly adopting memory-intensive architectures to enhance responsiveness at scale.

In a bid to reduce reliance on NVIDIA, whose GPUs have long dominated AI infrastructure, the Maia 200 serves a strategic purpose within the broader cloud computing landscape. While NVIDIA continues to lead in performance, its software and hardware ecosystem plays a crucial role in shaping industry pricing and availability. Competing cloud providers like Google and Amazon Web Services have already introduced their own AI chips, with Google offering tensor processing units and Amazon promoting its Trainium and Inferentia products. With the Maia 200, Microsoft enters this competitive arena, positioning itself alongside these major players.

Microsoft has made direct performance comparisons, stating that Maia 200 delivers three times the float point performance (FP4) of Amazon’s third-generation Trainium chips and demonstrates superior FP8 performance compared to Google’s latest TPU. The chip is manufactured by Taiwan Semiconductor Manufacturing Co. using 3-nanometer technology and employs high-bandwidth memory, albeit an older generation than NVIDIA’s upcoming offerings.

Software Closes the Gap

In conjunction with the hardware release, Microsoft has introduced new developer tools aimed at closing the longstanding performance gap that has favored NVIDIA’s software. Among these tools is Triton, an open-source framework designed to aid developers in writing efficient AI code, to which OpenAI has made significant contributions. Microsoft is positioning Triton as a viable alternative to NVIDIA’s dominant programming platform, CUDA.

The Maia 200 chip is already operational within Microsoft’s AI services, supporting models developed by the company’s Superintelligence team and powering applications such as Copilot. Furthermore, Microsoft has opened the door for developers, academics, and frontier AI labs to experiment with the Maia 200 software development kit, aiming to foster innovation within its ecosystem.

With the launch of the Maia 200, Microsoft signals a significant shift in the AI infrastructure landscape. While advancements in chip performance remain critical, control over software and deployment processes is becoming equally vital to success in the fast-evolving AI sector. This development may reshape the competitive dynamics of the industry as companies seek to balance cost, performance, and efficiency in their AI operations.

See also Tesseract Launches Site Manager and PRISM Vision Badge for Job Site Clarity

Tesseract Launches Site Manager and PRISM Vision Badge for Job Site Clarity Affordable Android Smartwatches That Offer Great Value and Features

Affordable Android Smartwatches That Offer Great Value and Features Russia”s AIDOL Robot Stumbles During Debut in Moscow

Russia”s AIDOL Robot Stumbles During Debut in Moscow AI Technology Revolutionizes Meat Processing at Cargill Slaughterhouse

AI Technology Revolutionizes Meat Processing at Cargill Slaughterhouse Seagate Unveils Exos 4U100: 3.2PB AI-Ready Storage with Advanced HAMR Tech

Seagate Unveils Exos 4U100: 3.2PB AI-Ready Storage with Advanced HAMR Tech