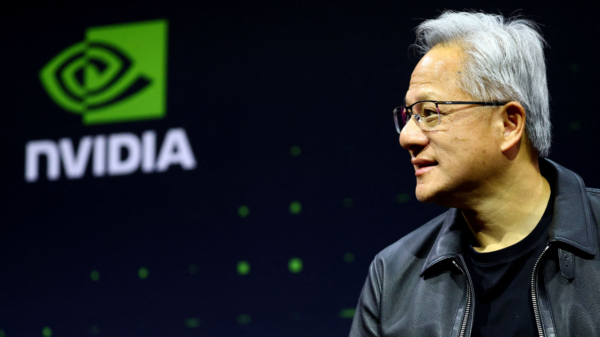

Microsoft has launched its second-generation artificial intelligence chip, the Maia 200 AI chip, aiming to provide a competitive alternative to industry leaders Nvidia as well as cloud rivals Amazon and Google. Announced on October 9, 2023, the Maia 200 is designed to enhance AI capabilities within Microsoft’s cloud services. This release follows the introduction of the first-generation Maia 100 chip two years ago, which was never made available for commercial use.

The Maia 200 chip utilizes the 3 nanometer process from Taiwan Semiconductor Manufacturing Co. (TSMC), with four chips connected within each server. Unlike many competitors, these chips rely on Ethernet cables instead of the InfiniBand standard, which is used by Nvidia after its acquisition of Mellanox in 2020. Microsoft’s executive vice president for cloud and AI, Scott Guthrie, emphasized in a blog post that the new chip will have “wider customer availability in the future.”

In a social media post, Microsoft CEO Satya Nadella highlighted that the Maia 200 is now operational on Azure and boasts “30% better performance per dollar than current systems.” With over 10 petaflops (PFLOPS) of FP4 throughput and approximately 5 PFLOPS of FP8, along with 216GB of HBM3e memory and 7 terabytes per second (TB/s) memory bandwidth, the chip is optimized for large-scale AI workloads, adding to Microsoft’s portfolio of CPUs, GPUs, and custom accelerators.

Guthrie characterized the Maia 200 as “the most efficient inference system Microsoft has ever deployed.” The chip’s introduction comes at a time when developers, researchers, and AI labs are increasingly seeking powerful, cost-effective solutions, prompting Microsoft to allow applications for a preview of a software development kit for interested developers.

Some of the initial Maia 200 units will be allocated to Microsoft’s Superintelligence team, led by Mustafa Suleyman, who noted the chip’s importance for developing frontier AI models. The new chip will also support Microsoft’s Copilot assistant and the latest AI models from OpenAI, which are available to cloud customers.

In a strategic move aimed directly at competitors, Microsoft asserts that the Maia 200 outperforms Amazon’s third-generation Trainium AI chip and Google’s seventh-generation tensor processing unit (TPU). Guthrie stated that this chip demonstrates three times the FP4 performance of Amazon’s Trainium and surpasses Google’s TPU in FP8 performance. He remarked that the Maia 200 is “the most performant, first-party silicon from any hyperscaler,” further emphasizing its 30% better performance per dollar compared to the latest generation of hardware within Microsoft’s fleet.

This initiative marks a significant escalation in Microsoft’s competitive strategy in the cloud computing space, as it seeks to catch up to Amazon and Google, both of which have long been designing their own chips. A recent report from Bloomberg indicates that the three tech giants share a common goal: to create efficient and cost-effective computing power that can be seamlessly integrated into data centers, providing substantial savings and enhanced efficiencies for cloud customers.

The demand for alternatives to Nvidia’s expensive and in-demand chips has accelerated this competitive drive, as companies look for ways to sustain growth and meet the rising needs of AI applications. Microsoft’s venture into chip manufacturing underscores a broader trend within the industry, where cloud service providers are increasingly investing in proprietary technology to enhance their service offerings.

The launch of the Maia 200 chip not only positions Microsoft as a serious contender in the AI chip market but also signals a new chapter in the ongoing race between the major players in cloud computing. As AI continues to reshape industries and drive technological advancements, the implications of this launch extend beyond hardware, potentially influencing the future landscape of cloud services and AI innovation.

See also Tesseract Launches Site Manager and PRISM Vision Badge for Job Site Clarity

Tesseract Launches Site Manager and PRISM Vision Badge for Job Site Clarity Affordable Android Smartwatches That Offer Great Value and Features

Affordable Android Smartwatches That Offer Great Value and Features Russia”s AIDOL Robot Stumbles During Debut in Moscow

Russia”s AIDOL Robot Stumbles During Debut in Moscow AI Technology Revolutionizes Meat Processing at Cargill Slaughterhouse

AI Technology Revolutionizes Meat Processing at Cargill Slaughterhouse Seagate Unveils Exos 4U100: 3.2PB AI-Ready Storage with Advanced HAMR Tech

Seagate Unveils Exos 4U100: 3.2PB AI-Ready Storage with Advanced HAMR Tech