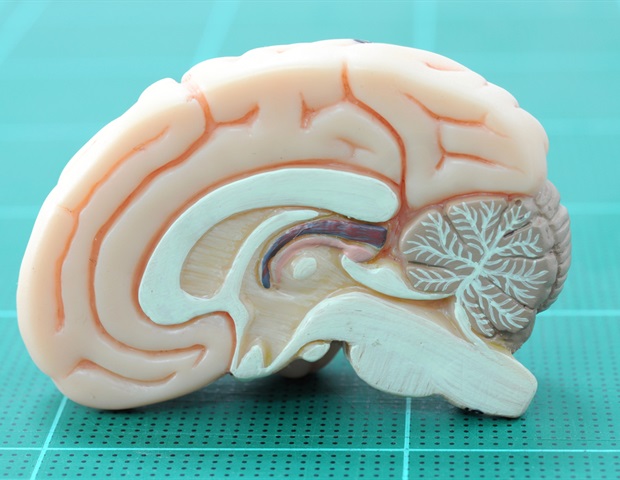

Researchers have proposed a groundbreaking framework that merges the realms of large language models (LLMs) and neuroscience, aiming to enhance both the computational efficiency and interpretability of AI systems. The study highlights the pressing need for improvements in these areas as LLMs become increasingly fundamental in the quest for artificial general intelligence (AGI). Current models face significant challenges due to high computational and memory costs, which restrict their viability as foundational tools for various sectors, including healthcare and finance. In stark contrast, the human brain operates on less than 20 watts of power while demonstrating remarkable transparency in cognitive processes, underscoring the gap that needs to be bridged.

The proposed framework, termed NSLLM, transforms conventional LLMs by integrating integer spike counting and binary spike conversion, while leveraging a spike-based linear attention mechanism. This innovative approach allows for the application of neuroscience tools to LLMs, facilitating a more profound understanding of how these computational models process information. By converting standard LLM outputs into spike representations, researchers aim to analyze the intricate information-processing capabilities of these large-scale systems.

To validate this approach’s energy efficiency, the study implemented a custom MatMul-free computing architecture for a billion-parameter model on a field-programmable gate array (FPGA) platform. Utilizing a layer-wise quantization strategy, the team assessed each layer’s impact on quantization loss. This led to the development of a mixed-timestep spike model that maintains competitive performance under low-bit quantization. Additionally, a quantization-assisted sparsification strategy reconfigures the membrane potential distribution, shifting the quantization mapping probability towards lower integer values, significantly reducing the spike firing rate and enhancing model efficiency.

On the VCK190 FPGA, the MatMul-free hardware core eliminated traditional matrix multiplication operations within the NSLLM, achieving a dynamic power consumption of just 13.849 watts while enhancing throughput to 161.8 tokens per second. This represents a staggering 19.8 times higher energy efficiency compared to an A800 GPU, along with 21.3 times memory savings and 2.2 times higher inference throughput.

In addition to improving energy efficiency, the NSLLM framework enhances the interpretability of LLMs through the conversion of their behaviors into neural dynamical representations such as spike trains. This transformation enables researchers to analyze dynamic properties of the neurons, including randomness through Kolmogorov–Sinai entropy, and information-processing characteristics via Shannon entropy and mutual information. The findings suggest that the model encodes information more effectively when dealing with unambiguous text. For example, middle layers showed higher normalized mutual information for ambiguous sentences, while the AS layer exhibited unique dynamical signatures indicative of its function in sparse information processing. The FS layer, displaying elevated Shannon entropy, pointed to a stronger capacity for information transmission.

Crucially, the positive correlation between mutual information and Shannon entropy indicates that layers with higher information capacity are more adept at preserving key input features. Integrating neural dynamics with information-theoretic measures provides a biologically inspired approach to understanding LLM mechanisms, significantly reducing data requirements in the process.

Building on insights from neuroscience, which emphasizes energy-efficient information processing through sparse and event-driven computational strategies, the research team has effectively developed a neuromorphic alternative to conventional LLMs. This approach not only matches the performance of mainstream models in tasks like reading comprehension, world knowledge question answering, and mathematical reasoning, but also paves the way for advancements in energy-efficient artificial intelligence.

As the field of AI continues to evolve, this interdisciplinary framework offers fresh perspectives on the interpretability of large language models and insights for the design of future neuromorphic chips. The implications of this research extend beyond academic interest, potentially reshaping how AI systems are developed and integrated into society, fostering a more sustainable and transparent future for artificial intelligence.

Source: Xu, Y., et al. (2025). Neuromorphic spike-based large language model. National Science Review. doi: 10.1093/nsr/nwaf551. https://academic.oup.com/nsr/advance-article/doi/10.1093/nsr/nwaf551/8365570

See also Oracle Faces 30% Stock Drop Amid AI Execution Doubts and $50B Capex Commitment

Oracle Faces 30% Stock Drop Amid AI Execution Doubts and $50B Capex Commitment Coforge Acquires Encora for $2.35B to Enhance AI Engineering and Cloud Services

Coforge Acquires Encora for $2.35B to Enhance AI Engineering and Cloud Services Urinrinoghene Omughelli Achieves 95% Accuracy with AI-Powered Cloud Security System

Urinrinoghene Omughelli Achieves 95% Accuracy with AI-Powered Cloud Security System