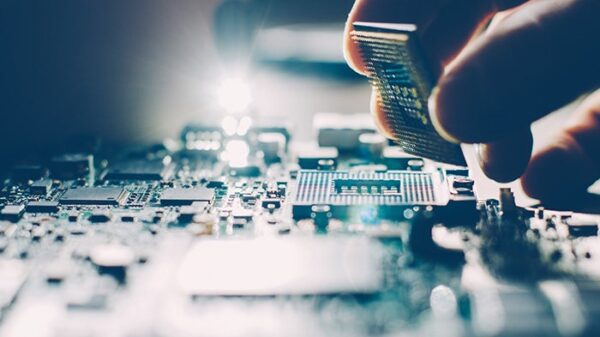

OpenAI is re-evaluating its hardware strategy as artificial intelligence transitions from training models to efficiently running them in real time, known as inference. While Nvidia continues to dominate the market for chips used in training large AI models, sources indicate that OpenAI is exploring alternatives, including Advanced Micro Devices (AMD), Cerebras, and Groq. This exploration seeks to enhance speed and efficiency for specific workloads that prioritize inference, reflecting a broader industry trend toward specialized hardware as the demand for consumer-facing AI escalates.

In San Francisco, discussions have been ongoing since last year with various hardware suppliers. Although Nvidia’s GPUs remain central to OpenAI’s infrastructure, the shift in focus towards real-time inference tasks—such as coding tools—has prompted OpenAI to seek hardware that can deliver lower latency and improved memory access. This shift is crucial as inference requires different capabilities compared to training, where massive parallel processing power is essential.

Nvidia’s chips have long been the standard for AI training, but the requirements for inference, where trained models generate responses to queries, demand rapid memory access and reduced latency. Consequently, OpenAI is evaluating alternative architectures that utilize embedded SRAM to potentially offer speed advantages for real-time applications. This reassessment may mark a significant shift in the competitive landscape of AI hardware.

Among the companies that OpenAI has engaged with, AMD has been assessed for its GPUs to expand the hardware framework, while Cerebras, known for its wafer-scale chips with extensive on-chip memory, has developed a partnership with OpenAI focused on enhancing inference performance. Discussions with Groq also took place regarding compute capacity; however, Groq’s recent substantial licensing agreement with Nvidia—valued at roughly $20 billion—has redirected the company’s focus toward software and cloud services.

Despite these exploratory efforts, both OpenAI and Nvidia assert that Nvidia’s technology continues to underpin the majority of OpenAI’s operations, providing strong value for performance. Nvidia’s CEO, Jensen Huang, characterized any notion of discord with OpenAI as “nonsense,” while OpenAI’s CEO, Sam Altman, emphasized that Nvidia produces “the best AI chips in the world” and indicated that OpenAI aims to remain a significant customer.

This reassessment by OpenAI mirrors a larger trend as AI technology transitions from research to the mass production of consumer and enterprise applications. The importance of inference costs and performance is becoming more pronounced. Notably, companies like Google are also investing in custom TPUs designed specifically for real-time AI tasks. Rather than looking to replace Nvidia entirely, OpenAI’s strategy appears to be one of diversification; this approach aims to minimize reliance on a single supplier while still keeping Nvidia as a key partner in its infrastructure.

The implications of OpenAI’s hardware diversification are significant. As AI services such as ChatGPT expand globally, inference is on track to become the dominant performance battleground. Exploring suppliers beyond Nvidia can give OpenAI leverage regarding pricing, capacity, and performance. Increased competition in the market could also spur innovations in memory-centric AI accelerators from companies like AMD, Cerebras, and others.

Moreover, reports indicating OpenAI’s shift toward hardware diversification and the postponement of Nvidia investment discussions have affected Nvidia’s stock in some markets, highlighting investor sensitivity to changes in the AI supply chain. As inference gains prominence relative to training, the hardware landscape may evolve into a more competitive environment, decreasing Nvidia’s centrality over time. This dynamic may redefine the relationships and power structures among major tech players in the AI field.

Looking ahead, OpenAI’s efforts at diversifying its hardware partnerships could significantly shape the future of real-time AI applications and their underlying infrastructure. As companies adapt to the escalating demands of consumer-facing AI, the potential for accelerated innovation in specialized chips presents both opportunities and challenges for established leaders in the market.

See also Tesseract Launches Site Manager and PRISM Vision Badge for Job Site Clarity

Tesseract Launches Site Manager and PRISM Vision Badge for Job Site Clarity Affordable Android Smartwatches That Offer Great Value and Features

Affordable Android Smartwatches That Offer Great Value and Features Russia”s AIDOL Robot Stumbles During Debut in Moscow

Russia”s AIDOL Robot Stumbles During Debut in Moscow AI Technology Revolutionizes Meat Processing at Cargill Slaughterhouse

AI Technology Revolutionizes Meat Processing at Cargill Slaughterhouse Seagate Unveils Exos 4U100: 3.2PB AI-Ready Storage with Advanced HAMR Tech

Seagate Unveils Exos 4U100: 3.2PB AI-Ready Storage with Advanced HAMR Tech