In a recent podcast, Lukasz Kaiser, a chief research scientist at OpenAI and co-author of the influential Transformer paper, offered a counter-narrative to the growing belief that artificial intelligence (AI) has reached a plateau. Despite widespread claims of a slowdown in AI development, Kaiser asserted that advancements continue to accelerate along a stable and exponential growth curve. The perceived stagnation, he argued, arises from a shift in the type of breakthroughs, moving from the creation of “large models” to the development of smarter, more capable systems.

Kaiser emphasized that while pre-training remains vital, it is no longer the sole engine driving AI progress. The introduction of inference models acts as a “second brain,” enabling these systems to learn, derive, verify, and self-correct, rather than merely predicting the next word in a sequence. This capability not only enhances the models’ performance but also improves the reliability of their outputs, allowing for significant leaps in capability with equivalent resources.

However, Kaiser acknowledged the uneven landscape of AI intelligence. He noted that while some models excel at solving complex mathematical problems, they may falter in simpler tasks, such as counting objects in a children’s puzzle. This inconsistency highlights the ongoing challenges in achieving a balanced intelligence across diverse tasks. At the same time, the need for cost-effective solutions has become paramount, as the industry grapples with the realities of serving millions of users without excessive expenditure on computing power. Model distillation has transitioned from an optional strategy to a crucial necessity, determining whether AI can achieve widespread adoption.

During the podcast, Kaiser reiterated that AI technology has consistently advanced in a stable exponential manner. He pointed to a series of recent high-profile model releases—such as GPT-5.1, Codex Max, and Gemini Nano Pro—as evidence contradicting the theory of stagnation. These developments are rooted in new discoveries, enhanced computing power, and refined engineering practices, which collectively contribute to ongoing progress.

He elaborated on the evolution from earlier language models, stating that the transition from ChatGPT 3.5 to its latest iterations signifies a pivotal change in capabilities. The latest models are now able to search the web and perform reasoning and analysis, providing more accurate and contextually relevant answers. For instance, a previous version might have fabricated outdated information when asked about current events, while the newer iterations can access real-time data for verification.

Kaiser acknowledged that while progress is evident, numerous engineering challenges remain. Improvements in data quality and the optimization of algorithms are essential, as many models still struggle with logical consistency and can forget information from extended conversations. The transition towards multi-modal capabilities—where models can process various types of data, such as images and sounds—presents additional hurdles that require substantial resource investments.

Turning to inference models, Kaiser highlighted their transformative potential. Unlike basic models, inference systems can engage in a process of “thinking” that includes forming a chain of thought and utilizing external tools to enhance their reasoning. This shift focuses on teaching models “how to think” rather than simply responding based on memory, a change that promises to refine their reasoning abilities.

The podcast also delved into the significance of reinforcement learning in AI training. Kaiser explained that while traditional pre-training methods relied on vast datasets with less stringent quality control, reinforcement learning introduced a new paradigm focused on developing the model’s ability to evaluate its own reasoning. This approach has already shown promise in fields like mathematics and programming, leading to advancements in self-correction capabilities.

As Kaiser navigated the conversation about the future of AI, he highlighted the importance of balancing technical needs with the demand for more efficient models. The transition from large-scale pre-training to more economical solutions reflects the industry’s shift towards cost-effective AI deployment, particularly as user bases expand.

In closing, Kaiser reaffirmed the enduring value of pre-training, suggesting that while current trends may prioritize efficiency and cost, the foundation of AI advancements remains rooted in robust pre-training processes. This dual focus on innovation and practicality will be crucial as the industry continues to evolve, ensuring that AI can not only meet current demands but also adapt to future challenges.

See also Alphabet’s AI Infrastructure Expansion Positions It as Top Stock for Future Growth

Alphabet’s AI Infrastructure Expansion Positions It as Top Stock for Future Growth Ukraine Develops National AI Using Google’s Gemma Framework for Military and Civilian Use

Ukraine Develops National AI Using Google’s Gemma Framework for Military and Civilian Use AI Researchers Unveil Expert System for Hydroponic Strawberry Cultivation, Enhancing Sustainability and Yield

AI Researchers Unveil Expert System for Hydroponic Strawberry Cultivation, Enhancing Sustainability and Yield Nvidia Invests $2B in Synopsys to Revolutionize AI Chip Design and Engineering Workflows

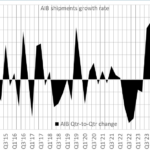

Nvidia Invests $2B in Synopsys to Revolutionize AI Chip Design and Engineering Workflows Jon Peddie Research Reveals Q3’25 AIB Market at $8.8B with -0.7% CAGR Forecast

Jon Peddie Research Reveals Q3’25 AIB Market at $8.8B with -0.7% CAGR Forecast