Lawrence Livermore National Laboratory (LLNL) celebrated a series of achievements at the International Conference for High Performance Computing, Networking, Storage, and Analysis (SC25), reaffirming its leadership in supercomputing. The event, held in St. Louis, featured over 16,000 attendees and nearly 560 exhibitors, showcasing the growing intersection of high-performance computing (HPC) and artificial intelligence (AI). LLNL’s contributions, led by Principal Deputy Associate Director for Computing Lori Diachin, highlighted its significant role in driving HPC innovation.

During a press conference on November 17, Diachin welcomed attendees and noted the record turnout and robust technical program, which included over 600 paper submissions. At this event, the latest Top500 list was unveiled, with LLNL’s flagship supercomputer, El Capitan, achieving the top ranking for the second consecutive time. With a verified speed of 1.809 exaFLOPs, El Capitan demonstrated exceptional performance on various benchmarks, including High Performance Linpack (HPL), High Performance Conjugate Gradients, and HPL-MxP mixed-precision benchmarks. This performance underscores its versatility in handling traditional simulations, memory-intensive workloads, and AI-driven tasks.

Funded by the National Nuclear Security Administration’s Advanced Simulation and Computing program, El Capitan is the result of a collaboration among LLNL, HPE, and AMD, and is supported by a comprehensive suite of software developed by LLNL for system management and large-scale AI workflows.

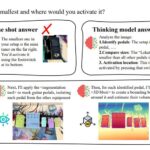

LLNL also garnered the prestigious Gordon Bell Prize for its advancements in real-time tsunami forecasting, in collaboration with the University of Texas at Austin’s Oden Institute and the Scripps Institution of Oceanography. The team leveraged the Lab-developed MFEM finite element library to convert deep-ocean pressure data into localized tsunami predictions in under 0.2 seconds, a process approximately 10 billion times faster than traditional methods. This breakthrough not only enhances rapid warning systems but significantly reduces false alarms.

The Gordon Bell Prize is widely regarded as the highest honor in supercomputing, recognizing significant achievements in scientific computing. Tzanio Kolev, a computational mathematician at LLNL and co-author of the study, expressed excitement about the award, emphasizing the project’s collaborative nature and its potential applications across various fields.

The collaborative efforts on El Capitan were sparked by a chance conversation at the previous SC conference. The impressive tsunami simulation visuals were also featured in SC25’s “Art of HPC” exhibition, which showcased artistic interpretations of scientific computing and simulation, an initiative that resonated with Diachin, who has a personal interest in art.

In addition to the Gordon Bell Prize-winning work, El Capitan was also represented as a finalist for a record-scale rocket-exhaust simulation, pushing the boundaries of fully coupled fluid-chemistry models on exascale hardware. This effort highlights the capabilities of next-generation architectures to accurately capture complex physical phenomena.

LLNL was recognized in multiple categories at the annual HPCwire Readers’ and Editors’ Choice Awards, as well as receiving a 2025 Hyperion Research HPC Innovation Excellence Award for its leadership in exascale computing and its significant scientific contributions.

During the conference, LLNL’s Harshitha Menon was a finalist for the SC25 Best Poster Award for her research on the interpretability of large language models (LLMs) in HPC code generation. Her work aims to understand how these models optimize parallel scientific software, focusing on aspects like trust and reliability, which are vital in high-stakes computing environments.

AI’s role in enhancing scientific computing was a recurring theme throughout SC25, with LLNL researchers demonstrating next-generation AI models tailored for frontier HPC systems. Nikoli Dryden, a research scientist at LLNL, presented the ElMerFold model, which set records in protein folding workflows on El Capitan, achieving a remarkable reduction in computation time.

Department of Energy Undersecretary for Science Dario Gil emphasized the importance of a coordinated national effort in AI, HPC, and quantum computing during his address at the DOE booth. He reiterated the necessity for collaboration among the DOE, industry, and academia to meet national goals.

Despite challenges, including a federal government shutdown, LLNL’s robust presence permeated the SC25 program, featuring tutorials on large-scale workflows and AI-accelerated science, among other topics. Diachin’s leadership was instrumental in shaping the event, which she noted is not just about research but fostering connections that can lead to scientific breakthroughs.

Looking ahead, LLNL continues to play a pivotal role in the HPC landscape, with ongoing innovations poised to reshape the future of computational science and AI applications across various domains.

See also Agents-as-a-Service Set to Transform Software Industry, Says Experts

Agents-as-a-Service Set to Transform Software Industry, Says Experts AI Expert Sam Leffell Evaluates Google’s $20 Polish Feature Amid ChatGPT Comparison

AI Expert Sam Leffell Evaluates Google’s $20 Polish Feature Amid ChatGPT Comparison Spacetools Achieves 12% Boost in Spatial Reasoning with Double Interactive Reinforcement Learning

Spacetools Achieves 12% Boost in Spatial Reasoning with Double Interactive Reinforcement Learning ECI Software Solutions Launches Payments Division and AI Tools, Boosting SMB Cash Flow

ECI Software Solutions Launches Payments Division and AI Tools, Boosting SMB Cash Flow Microsoft Unveils AI Tools to Drive $1B Digital Transformation in Philippines

Microsoft Unveils AI Tools to Drive $1B Digital Transformation in Philippines