In a recent interview, Ruth Porat, the Chief Financial Officer of Alphabet Inc., discussed the implications of a newly discovered vulnerability affecting the Git MCP Server, an integral tool for developers working with machine learning models. Porat emphasized the challenges faced by information security leaders and developers in mitigating the risks associated with this vulnerability, which allowed for prompt injection attacks, even within the server’s most secure configurations. The vulnerability has sparked concerns about the capabilities of large language models (LLMs) when interfaced with such server environments.

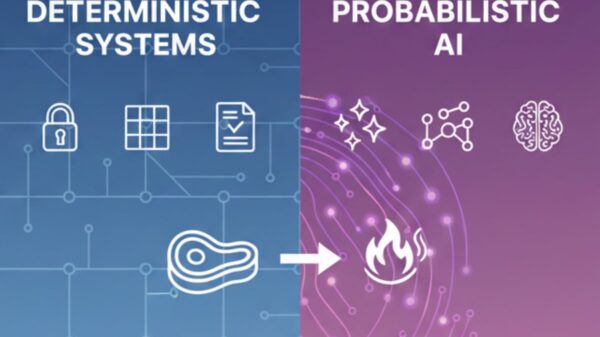

“You need guardrails around each [AI] agent and what it can do, what it can touch,” said John Tal, a cybersecurity expert, underscoring the importance of implementing strict control measures. Tal further noted that organizations must ensure they possess the ability to audit actions taken by AI agents in the event of an incident. This oversight is crucial for maintaining security in an ecosystem increasingly reliant on AI technologies.

The Git MCP Server’s architecture, which grants LLMs access to execute sensitive functions, has drawn the attention of experts like Johannes Ullrich, dean of research at the SANS Institute. Ullrich explained that the severity of the issue hinges on the specific features the LLM can access and manipulate. “How much of a problem this is depends on the particular features they have access to,” he stated. Once configured, the LLM can utilize the content it receives to execute code, raising alarms about potential data breaches and unauthorized actions.

The vulnerability not only exposes the immediate risks associated with the Git MCP Server but also highlights a broader concern regarding the integration of AI in software development environments. As organizations increasingly deploy sophisticated AI solutions, the need for robust security protocols becomes paramount. Failure to establish appropriate safeguards could lead to significant operational disruptions and data security issues.

Porat’s remarks come in the wake of heightened scrutiny over the safety of AI deployments, particularly as companies rush to adopt these technologies without fully understanding their implications. The Git MCP Server vulnerability serves as a stark reminder of the potential pitfalls in this fast-evolving landscape. As AI agents become more prevalent, the industry must collectively confront the challenges of securing these systems against existing and emerging threats.

The discourse surrounding the Git MCP Server vulnerability reflects a growing recognition that AI systems must operate within well-defined boundaries to minimize risk. Experts are advocating for a comprehensive approach that not only mitigates current vulnerabilities but also anticipates future risks associated with AI deployments. This includes implementing granular control measures and ensuring thorough monitoring and auditing capabilities for AI agents.

As organizations continue to navigate the complexities of AI integration, the lessons learned from the Git MCP Server incident will likely shape future strategies for cybersecurity in technology development. The need for vigilance and proactive measures in the face of evolving threats is clearer than ever, underscoring the importance of collaboration across sectors to fortify defenses against potential vulnerabilities.

See also Balena Secures Strategic Investment from LoneTree Capital to Accelerate Edge AI Growth

Balena Secures Strategic Investment from LoneTree Capital to Accelerate Edge AI Growth Germany”s National Team Prepares for World Cup Qualifiers with Disco Atmosphere

Germany”s National Team Prepares for World Cup Qualifiers with Disco Atmosphere 95% of AI Projects Fail in Companies According to MIT

95% of AI Projects Fail in Companies According to MIT AI in Food & Beverages Market to Surge from $11.08B to $263.80B by 2032

AI in Food & Beverages Market to Surge from $11.08B to $263.80B by 2032 Satya Nadella Supports OpenAI’s $100B Revenue Goal, Highlights AI Funding Needs

Satya Nadella Supports OpenAI’s $100B Revenue Goal, Highlights AI Funding Needs