Actors Abhishek Bachchan and Aishwarya Rai Bachchan have filed a lawsuit against Google and YouTube in the Delhi High Court, alleging that AI-generated videos depicting them in fictitious and explicit scenarios violate their personality rights. The couple claims these representations have caused them reputational and financial harm, seeking compensation alongside measures to prevent such content from being utilized in training future AI models.

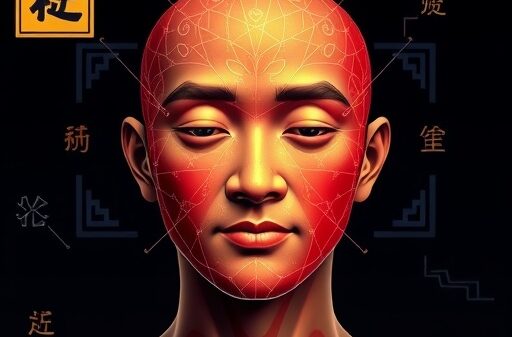

This legal battle underscores the complex intersection of artificial intelligence and personal rights, as it compels a reassessment of the frameworks governing personality rights. These rights, which include the control over one’s name, image, likeness, and voice, have historically served as protections against unauthorized exploitation. Rooted in privacy, dignity, and economic autonomy, personality rights have evolved from common law to address commercial misuse. However, with the rise of generative technologies like deepfakes, these rights face unprecedented challenges. Deepfakes, which manipulate audio and visual content to misrepresent individuals, raise concerns about misinformation, extortion, and an erosion of trust in media. While AI has the potential to drive innovation, its misuse poses a risk of commodifying human identity, highlighting the urgent need for legal safeguards.

Globally, the legal landscape surrounding personality rights is fragmented. Europe employs a dignity-based model, while the United States adopts a property-oriented approach, and India follows a hybrid system. In India, personality rights are not codified, deriving from Article 21 of the Constitution, as affirmed in Justice K.S. Puttaswamy v. Union of India (2017). Courts have begun classifying AI infringements as breaches of privacy or intellectual property. Recent cases such as Amitabh Bachchan v. Rajat Nagi (2022) recognized personality rights, while Anil Kapoor v. Simply Life India (2023) prohibited AI reproductions of Kapoor’s identity, which were found to dilute his brand value. The Bombay High Court’s ruling in Arijit Singh v. Codible Ventures LLP (2024) further protected Singh’s voice from unauthorized AI replication. These cases indicate a judicial shift towards a privacy-property hybrid framework. Nevertheless, India’s legal system remains reactive, with enforcement challenges hindered by issues of anonymity and cross-border data sharing.

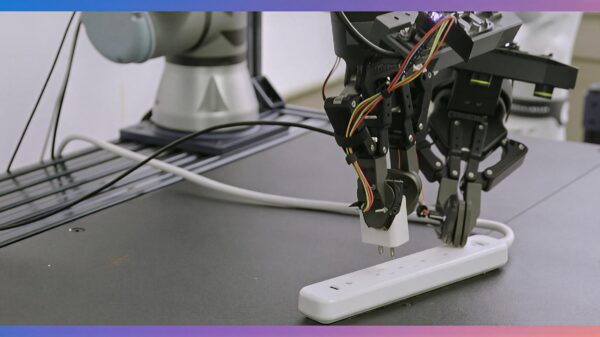

In the United States, personality rights, often referred to as the “right of publicity,” vary by state and are treated as a transferable property interest. The landmark case Haelan Laboratories v. Topps Chewing Gum (1953) established this right as separate from privacy, allowing celebrities to monetize their identities. Recent reforms, such as Tennessee’s ELVIS Act (2024), aim to curb unauthorized AI exploitation of voices and likenesses in response to the rise of deepfakes. Other legal actions against Character.AI have raised alarms, with allegations that its chatbots contributed to self-harm among users, including cases linked to teen suicides. In one 2024 Florida case, a chatbot was accused of masquerading as a therapist, which resulted in a federal judge dismissing Character.AI’s First Amendment defense.

In the European Union, personality rights adhere to a dignity-based model under the General Data Protection Regulation (GDPR) of 2016, which mandates consent for processing personal and biometric data. The EU AI Act (2024) categorizes deepfake technologies as high-risk, imposing requirements for transparency and labeling. In China, a ruling from the Beijing Internet Court in 2024 declared that synthetic voices must not mislead consumers, indicating a trend towards stricter regulation of AI content. Additionally, a voice actor successfully obtained damages after an AI replica of their voice was sold without consent, reinforcing the notion that voice is integral to personality rights.

This fragmented global framework reveals that the transnational nature of AI frequently exceeds the reach of national laws. In a recent paper titled “AI Ethics and Creators’ Feelings: Extended Personality Rights as (Property) Rights to Object,” Guido Westkamp and colleagues advocate for broader rights that encompass appropriations of style and persona, aimed at protecting creators from exploitative uses of their data by AI systems.

The discourse surrounding personality rights in the context of AI also touches on ethics, dignity, and autonomy. UNESCO’s 2021 Recommendation on the Ethics of AI presents a rights-based framework that emphasizes the importance of preventing AI from exploiting individuals. Critics of India’s current legal framework, such as Aldrich, S.T., and Smith, K.R., in their 2024 survey of anonymization strategies, argue for comprehensive statutory definitions and high-risk classifications for deepfakes utilized in misinformation. Ethical dilemmas persist, particularly regarding AI recreations of deceased artists; Indian courts often regard personality rights as non-heritable. Broader scholarship cautions against granting legal personhood to AI, warning that such a move could undermine human rights. AI’s dual nature is evident as technologies like ChatGPT enhance creativity while simultaneously posing risks of harm. However, excessive regulation could dampen innovative progress.

These ongoing controversies highlight significant gaps in the regulatory landscape. India must consider enacting legislation that clearly defines personality rights and implements measures for AI watermarking, platform liability, and international cooperation. The government’s 2024 advisory on deepfakes represents a preliminary initiative, but stronger actions are crucial to address these emerging challenges effectively. International collaboration grounded in UNESCO principles could be vital in preventing ethical decline and fostering a balanced approach to AI innovation and personal rights.

See also MIT’s BoltzGen AI Model Transforms Drug Discovery with Novel Protein Design for Undruggable Diseases

MIT’s BoltzGen AI Model Transforms Drug Discovery with Novel Protein Design for Undruggable Diseases AI Boom Fuels Tech Stocks Surge; Musk’s xAI Hits $230B Valuation Amid Chip Rivalry

AI Boom Fuels Tech Stocks Surge; Musk’s xAI Hits $230B Valuation Amid Chip Rivalry Meta Explores Partnership with Google for AI Chips to Reduce Nvidia Dependency

Meta Explores Partnership with Google for AI Chips to Reduce Nvidia Dependency U.S. AI Policy Faces Crucial Test as Utah Innovates Regulation Amid Global Competition

U.S. AI Policy Faces Crucial Test as Utah Innovates Regulation Amid Global Competition EPAM Systems Boosts 2025 Guidance Amid Rising AI Demand, Faces Profitability Concerns

EPAM Systems Boosts 2025 Guidance Amid Rising AI Demand, Faces Profitability Concerns