DeepSeek, a Chinese AI startup, has kicked off the year with a novel approach to training large language models that analysts predict could significantly influence the AI landscape. On Wednesday, the company published a research paper detailing its innovative method, titled “Manifold-Constrained Hyper-Connections,” or mHC, which aims to enhance the scalability of language models while maintaining stability.

The paper, co-authored by Liang Wenfeng, the founder of DeepSeek, addresses a common challenge in the field: as language models expand, improving internal communication among different parts often leads to instability. The mHC technique allows for richer information sharing while constraining the potential risks associated with this exchange, thereby preserving training stability and computational efficiency.

The implications of this research have drawn significant attention. According to Wei Sun, principal analyst for AI at Counterpoint Research, the method represents a “striking breakthrough.” Sun remarked that DeepSeek’s innovative approach effectively combines various techniques to minimize training costs while potentially boosting performance. The research acts as a showcase of DeepSeek’s ability to integrate “rapid experimentation with highly unconventional research ideas.”

Sun also referenced DeepSeek’s previous success with its R1 reasoning model, which, upon its launch in January 2025, was able to compete with leading products such as ChatGPT at a lower cost, marking a pivotal moment in the tech industry. The research paper signals DeepSeek’s continued capacity to “bypass compute bottlenecks and unlock leaps in intelligence,” she added.

Similarly, Lian Jye Su, chief analyst at Omdia, emphasized the potential ripple effect this research could have across the AI sector, noting that other labs may develop their versions of the approach. He highlighted that DeepSeek’s willingness to share critical findings indicates a growing confidence in the Chinese AI industry, positioning openness as both a strategic advantage and a key differentiator.

Amid this backdrop, speculation arises regarding DeepSeek’s next flagship model, R2, which follows delays attributed to Liang’s dissatisfaction with its initial performance and challenges related to advanced AI chip shortages. While the research paper does not explicitly mention R2, its timing has raised questions, particularly as DeepSeek has historically released foundational training research ahead of major model launches.

Su suggested that DeepSeek’s proven track record implies that the new architecture will likely be integrated into their forthcoming model. However, Sun expressed caution, indicating that R2 may not be a standalone release. Given that DeepSeek has already integrated updates from the R1 model into its V3 iteration, the mHC technique could serve as a foundational element for the anticipated V4 model.

Interestingly, despite previous updates to the R1 model failing to gain traction in the tech community, analysts like Alistair Barr from Business Insider have pointed out that distribution remains a critical issue. DeepSeek continues to struggle for visibility and reach, particularly in Western markets, where competitors like OpenAI and Google dominate.

As the AI sector evolves, DeepSeek’s recent innovations and research efforts reflect broader trends in the industry, where scalability, performance, and stability are increasingly paramount. The company’s commitment to sharing its findings, coupled with its ongoing development of new models, positions it as a significant player in the competitive landscape of artificial intelligence.

See also AI Chatbots’ Dark Side: 33% of Teens Use Them for Emotional Support, Raising Concerns

AI Chatbots’ Dark Side: 33% of Teens Use Them for Emotional Support, Raising Concerns AI in Healthcare: Payment Battles Intensify as 20+ Devices Await CPT Codes by 2026

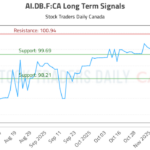

AI in Healthcare: Payment Battles Intensify as 20+ Devices Await CPT Codes by 2026 AI.DB.F:CA Trading Strategy Revealed—Buy at 99.69, Target 100.94 with Neutral Outlook

AI.DB.F:CA Trading Strategy Revealed—Buy at 99.69, Target 100.94 with Neutral Outlook Musk’s xAI Acquires Third Building, Boosting AI Compute Capacity to Nearly 2GW

Musk’s xAI Acquires Third Building, Boosting AI Compute Capacity to Nearly 2GW SoundHound AI Stock Plummets 56%: Assessing Buy Opportunities Amid Growth Potential

SoundHound AI Stock Plummets 56%: Assessing Buy Opportunities Amid Growth Potential