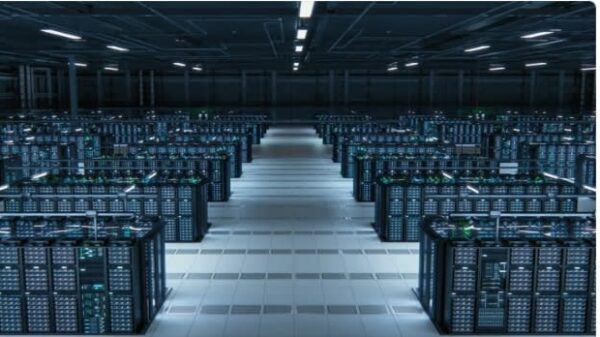

DeepSeek has unveiled a new technical methodology named Engram, which offers a novel approach for artificial intelligence models to utilize a queryable database of information stored in system memory. Released on the company’s GitHub page, the paper outlines how Engram improves performance in long-context queries by enabling AI models to commit data sequences to static memory. This reduces the computational load on graphical processing units (GPUs) by allowing them to focus on more complex tasks, thereby decreasing the reliance on high-bandwidth memory (HBM), which is increasingly under supply pressure.

The research describes how N-grams, statistical sequences of words, are integrated into the models’ neural networks, forming a queryable memory bank. Engram allows AI models to access facts directly instead of reasoning them out, which is computationally expensive. By alleviating the need for GPUs to handle basic memory tasks, DeepSeek aims to address the ongoing demand for HBM, particularly as the supply remains constrained.

According to the paper, an Engram-based model scaled to nearly 27 billion parameters demonstrated superior performance in long-context training compared to standard Mixture of Experts (MoE) architectures. Traditional MoE models often require extensive reasoning to reconstruct data with each query reference, leading to computational waste. Engram’s architecture permits the storage of facts externally, enhancing efficiency.

The Engram model allows AI systems to simply check, “Do I already have this data?” instead of engaging in extensive reasoning processes for each query. The paper emphasizes that this method minimizes unnecessary computations, freeing up resources for higher-level reasoning tasks.

In a comparative analysis, DeepSeek found that reallocating around 20%–25% of the sparse parameter budget to Engram optimized performance, achieving results comparable to pure MoE models. This suggests that balancing memory and computational resources could be key in designing efficient AI systems moving forward.

DeepSeek’s exploration extended to what they termed the “Infinite Memory Regime,” where they maintained a fixed computational budget while attaching a near-infinite number of conditional memory parameters. This led to a linear performance increase with memory size, indicating that as memory expands, performance can improve without necessitating higher computational expenses.

These findings could have substantial implications for the AI industry, as reliance on HBM may lessen if AI models can efficiently leverage system memory through methodologies like Engram. The results from an Engram-27B parameter model indicated that it outperformed a standard 27B MoE model in knowledge-intensive tasks, with an increase of 3.4 to 4 points in performance and a 3.7 to 5 point improvement in reasoning tasks. Notably, in long-context benchmarks, the Engram model’s accuracy reached 97%, a significant leap from the MoE model’s 84.2%.

As DeepSeek prepares to announce a new AI model in the coming weeks, the implementation of Engram may redefine efficiency standards in AI applications. However, the broader market implications could also raise concerns about the existing DRAM supply crisis, as the shift toward system DRAM might exacerbate ongoing shortages. With DeepSeek suggesting that conditional memory functions will be essential for next-generation models, the future direction of AI development could hinge on the successful deployment of these methodologies.

In summary, if Engram delivers as intended in real-world applications, it could signify a pivotal moment for AI technology, moving away from traditional memory constraints and paving the way for more robust and efficient models.

See also US Approves Nvidia’s H200 Chip Sales to China Amid Ongoing Tech Rivalry

US Approves Nvidia’s H200 Chip Sales to China Amid Ongoing Tech Rivalry Google AI Overviews Spread Misinformation, Forcing Brands to Revamp Digital Strategies

Google AI Overviews Spread Misinformation, Forcing Brands to Revamp Digital Strategies Arkansas AG Tim Griffin Appoints AI Advisor to Enhance Legal Framework and Public Safety

Arkansas AG Tim Griffin Appoints AI Advisor to Enhance Legal Framework and Public Safety Microsoft Launches Community-First AI Infrastructure Plan with Five Key Commitments

Microsoft Launches Community-First AI Infrastructure Plan with Five Key Commitments