Kakao has unveiled its latest language model, “Kana-2,” as an open-source project on Hugging Face, emphasizing advancements in AI capabilities. The announcement was made on December 19, following the company’s previous releases in its “Kana” AI lineup, which began last year. With each iteration, Kakao has expanded its offerings, moving from lightweight models to the more sophisticated “Kana-1.5,” designed to tackle complex challenges.

The new “Kana-2” model represents a significant leap in performance and efficiency, particularly in applications where AI needs to actively engage with user commands. This model comprises three variants: Base, Instruct, and a Thinking-specific model aimed at enhancing the execution of instructions. Notably, the inference model is also being introduced for the first time, allowing developers to fine-tune the model using their own data thanks to full disclosure of learned parameters.

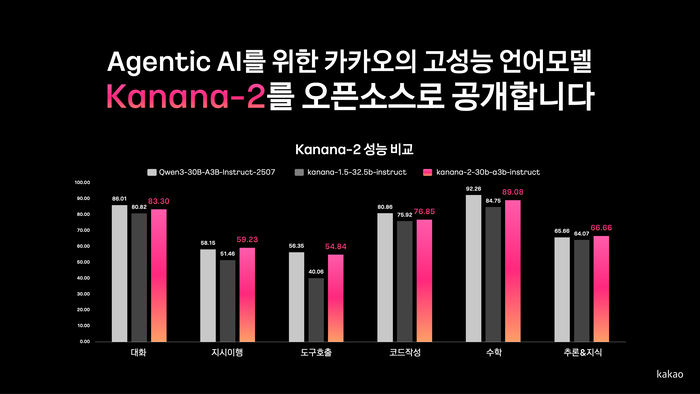

One of the standout features of Kana-2 is its enhanced tool-calling capabilities, which have reportedly improved more than threefold over its predecessor, Kana-1.5-32.5b. This improvement is critical for creating agentic AI that can follow complex, multi-step user requirements accurately. The model’s support for languages has also broadened, now including Japanese, Chinese, Thai, and Vietnamese, alongside Korean and English.

On a technical level, Kana-2 employs advanced architectures aimed at maximizing efficiency. It utilizes the “MLA” technique for effective processing of long inputs and incorporates a “MoE” structure that activates only necessary parameters during inference. This dual approach facilitates the handling of extensive contexts while minimizing memory usage, ultimately improving computational costs and response times. The model is also adept at managing a high volume of simultaneous requests, enhancing its practical usability.

Benchmark tests indicate that the Instrument model performs at a level comparable to leading models in the space, such as “Qwen3-30B-A3B.” The inference-specific model also demonstrated similar capabilities to Qwen3 under scenarios requiring advanced thinking skills, further solidifying its position in the competitive AI landscape.

Looking ahead, Kakao plans to expand the size of its models while maintaining the core “MoE” structure, aiming for superior instruction implementation capabilities. The company is also committed to developing models tailored for complex AI scenarios and lightweight on-device applications.

Kim Byung-hak, a performance leader at Kakao Kanana, emphasized the importance of performance and efficiency in AI services. “The basis of AI services with innovative technologies and functions is the performance and efficiency of language models,” he stated. He also highlighted Kakao’s commitment to sharing its developments as open-source projects to foster growth in the AI research ecosystem both domestically and internationally.

See also AI and Global Regulation Challenge Anonymization: Key Strategies for Compliance and Risk Management

AI and Global Regulation Challenge Anonymization: Key Strategies for Compliance and Risk Management Doosan Bobcat Unveils AI-Driven Jobsite Solutions at CES 2026 to Transform Construction Efficiency

Doosan Bobcat Unveils AI-Driven Jobsite Solutions at CES 2026 to Transform Construction Efficiency Trump Administration Reviews Nvidia’s H200 Chip Exports to China Amid Security Concerns

Trump Administration Reviews Nvidia’s H200 Chip Exports to China Amid Security Concerns Google DeepMind and DOE Launch Genesis Mission to Transform U.S. Scientific Research with AI

Google DeepMind and DOE Launch Genesis Mission to Transform U.S. Scientific Research with AI AI Annotation Market to Surge to $28.31B by 2033, Driven by AI Expansion in Key Sectors

AI Annotation Market to Surge to $28.31B by 2033, Driven by AI Expansion in Key Sectors