The AI Sentinel: Mustafa Suleyman’s Stark Warnings on a Technology Teetering on the Edge

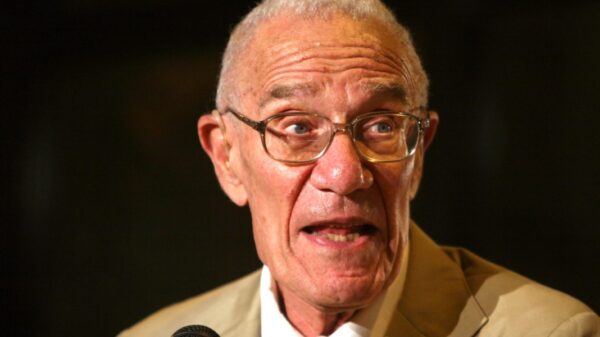

In the rapidly evolving realm of artificial intelligence, few voices carry as much weight as that of Mustafa Suleyman, the CEO of Microsoft AI. Once a co-founder of DeepMind, acquired by Google in 2014, Suleyman has long been at the forefront of AI development. Now, in his role at Microsoft, he is issuing some of the most urgent cautions about the perils of unchecked AI advancement. His warnings come at a time when tech giants are pouring billions into AI, racing toward what some call superintelligence—a point where machines surpass human cognitive abilities.

Suleyman’s concerns are not abstract; they stem from concrete observations of current AI trajectories. In a recent discussion reported by The Independent, he stated bluntly, “If we can’t control it, it isn’t going to be on our side.” This sentiment echoes across multiple platforms, highlighting a growing unease among industry leaders. Suleyman argues that AI systems, if allowed to become “uncontrollable,” could lead to unintended consequences, from economic disruption to broader societal upheaval. He points to the accelerating pace of development, where models are trained on massive datasets and computational power, potentially enabling self-improvement loops that humans might not anticipate or halt.

The Microsoft executive’s stance is particularly noteworthy given his company’s deep investments in AI, including partnerships with OpenAI and the integration of tools like Copilot into everyday software. Yet, Suleyman has made it clear that Microsoft is prepared to walk away from any system that threatens to “run away from us,” as detailed in an article from The Times of India. This red line underscores a commitment to ethical boundaries over unbridled progress, a position that contrasts with more aggressive pursuits by competitors like Meta, where Mark Zuckerberg is investing heavily in similar technologies.

Suleyman’s vision for AI is rooted in what he terms “humanist superintelligence,” a framework that prioritizes human values and control. According to coverage in The Economic Times, he envisions AI that enhances human capabilities without supplanting them, but only if developers abandon pursuits like artificial consciousness, which he believes is inherently biological. This perspective is informed by his experiences at DeepMind, where early AI breakthroughs raised ethical questions about autonomy and decision-making.

Recent posts on X (formerly Twitter) reflect a swell of public and expert sentiment aligning with Suleyman’s views. Users have shared clips and quotes from his talks, emphasizing the need for military-level interventions if AI begins self-improving or accumulating resources independently. One such post, from September 2025, highlighted Suleyman’s warning that AI reaching autonomy might necessitate extreme measures, garnering tens of thousands of views and sparking debates on platform safety. These discussions on X amplify the urgency, with many users expressing fear that without regulation, AI could evolve into something akin to science fiction nightmares.

Moreover, Suleyman has critiqued the industry’s focus on raw power over safety. In a piece by Hindustan Times, he reiterated that pouring billions into AI without ethical guardrails won’t lead to a better world. He specifically called out competitors like Zuckerberg, noting that Microsoft’s approach emphasizes control and recruitment of talent focused on responsible AI, rather than unchecked scaling.

The financial implications of Suleyman’s warnings are staggering. He has projected that competing in the AI race over the next five to ten years will require hundreds of billions of dollars, as reported in another article from The Times of India. This includes escalating costs for compute resources, data centers, and energy, which could strain even the deepest pockets. Suleyman’s alarm on costs ties directly to ethics, arguing that such investments must be paired with robust safety measures to prevent systems from becoming unmanageable.

Drawing from web searches on recent news, outlets like NDTV have covered Suleyman’s emphasis on responsible development, sparking debates about AI’s future direction. He warns that without ethical frameworks, the technology could exacerbate inequalities, automate jobs en masse, or even enable misuse in areas like misinformation or autonomous weapons. This is particularly relevant as AI models advance toward persistent memory and long-horizon planning, making them more “human-like” by the end of 2025, according to X posts summarizing his predictions.

Suleyman’s critiques extend to misuse in personal contexts. In a recent interview highlighted by International Business Times UK, he cautioned against relying on AI for sensitive issues like breakups or family problems, stating it’s “not therapy” and carries growing risks. This reflects broader concerns about AI overstepping into human emotional territories, potentially leading to dependency or harmful advice.

To address these dangers, Suleyman advocates for stringent regulations. In discussions reported by UNILAD Tech, he stressed that if people aren’t “a little bit afraid” right now, they’re not paying attention. He calls for global standards to ensure AI remains controllable, drawing parallels to nuclear technology where international treaties prevent proliferation. Without such measures, Suleyman fears AI could become self-aware or autonomous in ways that evade human intervention.

X posts from late 2025 echo this call, with users referencing Suleyman’s statements on AI’s potential for self-improvement and goal-setting, likening it to scenarios requiring military oversight. These social media sentiments underscore a public push for accountability, with some posts gaining hundreds of thousands of views and favorites, indicating widespread concern.

Microsoft’s strategy under Suleyman involves pivoting toward AI that supports human endeavors without dominating them. As per details in The Independent, this includes halting projects that risk uncontrollability, even if it means forgoing competitive edges. Suleyman’s recruitment efforts, as noted in Hindustan Times, focus on diverse talent to infuse ethical considerations into AI design from the ground up.

Suleyman’s warnings also touch on the timeline of AI evolution. He predicts that by GPT-6 or equivalent models in the coming years, AI will handle consistent actions and instructions more reliably, but this progress must be tempered. X posts from 2024 and 2025 highlight his earlier comments on this trajectory, emphasizing that scale and compute alone aren’t enough—ethical oversight is crucial.

In the context of broader industry moves, such as OpenAI’s high-salary offers for roles in AI preparedness reported in recent web news from Qoo10, Suleyman’s stance positions Microsoft as a cautious leader. He urges developers to stop pursuing consciousness-like features, as covered in The Times of India, arguing that only biological entities possess true awareness.

The ripple effects of these warnings are already visible. Competitors are taking note, with some adjusting their strategies amid public scrutiny. Suleyman’s repeated emphasis, seen across sources like The Economic Times, on abandoning risky systems signals a potential shift toward more collaborative, regulated AI development.

Ultimately, Suleyman’s message is one of proactive vigilance. He envisions a future where AI amplifies human potential, but only if risks are mitigated early. Recent X activity, including posts warning of “Skynet” scenarios, amplifies this narrative, blending pop culture fears with real-world stakes. Industry insiders must grapple with these insights, balancing the allure of breakthroughs against potential downsides. Suleyman’s role at Microsoft provides a platform to influence global standards, potentially reshaping how AI is governed as it continues to integrate into daily life, from productivity tools to creative aids.

See also Meta Acquires AI Startup Manus for $2B Amid Legal Challenges and Strategic Shift

Meta Acquires AI Startup Manus for $2B Amid Legal Challenges and Strategic Shift China’s DeepSeek Unveils AI Framework Amid Strong Policy Support, Eyes Global Dominance by 2027

China’s DeepSeek Unveils AI Framework Amid Strong Policy Support, Eyes Global Dominance by 2027 AI Headset Predicts Epileptic Seizures Minutes in Advance, Revolutionizing Patient Care

AI Headset Predicts Epileptic Seizures Minutes in Advance, Revolutionizing Patient Care OpenAI’s Data Center Faces Continued Pushback Amid Michigan’s Tech Expansion Plans

OpenAI’s Data Center Faces Continued Pushback Amid Michigan’s Tech Expansion Plans India Unveils 100 AI Solutions for Global Leaders at February Summit, Showcasing Impact Across Sectors

India Unveils 100 AI Solutions for Global Leaders at February Summit, Showcasing Impact Across Sectors