Recent advancements in artificial intelligence (AI) have been highlighted by the introduction of models utilizing a mixture-of-experts (MoE) architecture, notably the Kimi K2 Thinking from Moonshot AI, DeepSeek-R1 from DeepSeek AI, and Mistral Large 3 from Mistral AI. These models have been recognized among the top 10 most intelligent open-source options available and achieve a remarkable 10x performance increase when deployed on NVIDIA’s GB200 NVL72 rack-scale systems. The MoE approach enhances efficiency by engaging only relevant “experts” for each task, thereby facilitating faster and more effective token generation without significantly increasing computational demands.

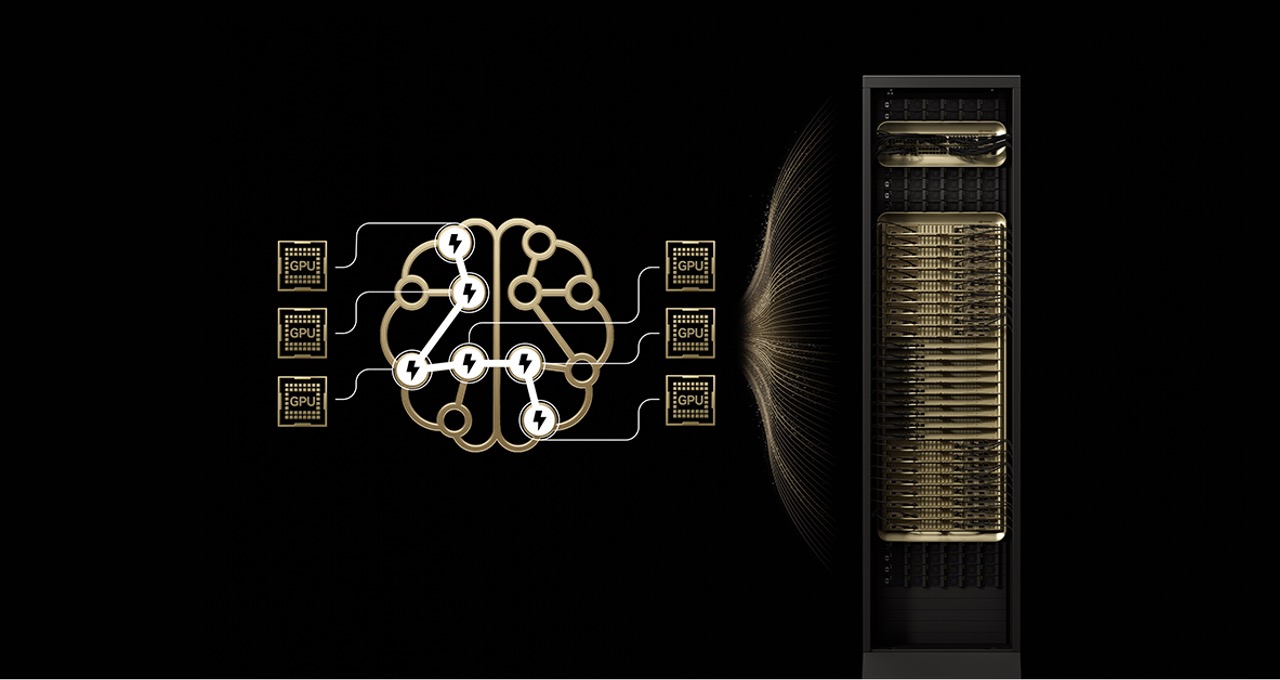

The MoE architecture, which mirrors the brain’s functionality by dividing tasks among specialized “experts,” represents a paradigm shift in AI design. Traditional models typically activate all parameters for every token, but MoE models selectively engage only a fraction of their large parameter sets—often tens of billions for each token. This strategy has contributed to a nearly 70x increase in model intelligence since early 2023, with over 60% of open-source AI models released this year adopting the MoE framework. This selective activation not only boosts intelligence but also enhances adaptability, allowing for a greater return on investment in terms of energy and capital.

However, scaling MoE models has historically encountered challenges, particularly concerning memory limitations and latency in expert communication. The NVIDIA GB200 NVL72 system addresses these issues through its design, which integrates up to 72 interconnected Blackwell GPUs via NVLink, thereby creating a high-performance interconnect fabric that facilitates rapid data exchange. This setup minimizes the parameter-loading pressure on individual GPUs and allows for enhanced expert parallelism, significantly improving inference times for demanding AI applications.

With a performance capability of 1.4 exaflops and 30TB of shared memory, the GB200 NVL72 is engineered for high efficiency. A crucial feature of this system is the NVLink Switch, which provides 130 TB/s of connectivity, allowing for near-instantaneous information exchange between GPUs. This architecture enables organizations to handle more concurrent users and longer input lengths, thereby enhancing overall performance. Companies like Amazon Web Services, Google Cloud, and Microsoft Azure are already deploying the GB200 NVL72, enabling their clients to leverage these advancements in operational settings.

As noted by Guillaume Lample, cofounder and chief scientist at Mistral AI, “Our pioneering work with OSS mixture-of-experts architecture, starting with Mixtral 8x7B two years ago, ensures advanced intelligence is both accessible and sustainable for a broad range of applications.” This sentiment reflects the growing recognition of MoE models as a viable solution for enhancing AI capabilities while maintaining cost efficiency.

Despite the significant advancements presented by the GB200 NVL72, scaling MoE models remains a complex endeavor. Prior to this system, efforts to distribute experts beyond eight GPUs often faced limitations due to slower networking communication, impeding the advantages of expert parallelism. The latest NVIDIA design, however, alleviates these bottlenecks by decreasing the number of experts each GPU manages, thereby reducing memory load and accelerating communication.

The integration of software optimizations, including the NVIDIA Dynamo framework and NVFP4 format, further enhances the performance of MoE models. Open-source inference frameworks such as TensorRT-LLM, SGLang, and vLLM support these optimizations, promoting the adoption and effective deployment of large-scale MoE architectures. As Vipul Ved Prakash, cofounder and CEO of Together AI, stated, “With GB200 NVL72 and Together AI’s custom optimizations, we are exceeding customer expectations for large-scale inference workloads for MoE models like DeepSeek-V3.”

In conclusion, the deployment of the GB200 NVL72 marks a significant milestone in the evolution of AI infrastructure, particularly for models leveraging the MoE architecture. The ongoing advancements in this area not only promise to enhance AI intelligence but also improve efficiency in handling increasingly complex workloads. As the adoption of MoE models continues to rise, the industry may witness a substantial transformation in how AI applications are developed and scaled, paving the way for future innovations.

For further details on these advancements, visit NVIDIA, Amazon Web Services, and Microsoft.

See also FDA Embraces Agentic AI for Safety Reviews Amid Ongoing Concerns Over Errors and Hallucinations

FDA Embraces Agentic AI for Safety Reviews Amid Ongoing Concerns Over Errors and Hallucinations ChatGPT Predicts Nvidia Stock Price Will Hit $180.75 by Month-End, Analysts Bullish on 2025

ChatGPT Predicts Nvidia Stock Price Will Hit $180.75 by Month-End, Analysts Bullish on 2025 Master Humanity Amid Rising AI: Essential Tools from ‘AI and the Art of Being Human’

Master Humanity Amid Rising AI: Essential Tools from ‘AI and the Art of Being Human’ Netflix Confirms $82.7B Acquisition of Warner Bros and HBO Max Amid Investor Concerns

Netflix Confirms $82.7B Acquisition of Warner Bros and HBO Max Amid Investor Concerns Join Cloudflare and Accenture’s Webinar: Tackle AI Security Challenges with Cloud-Centric Solutions

Join Cloudflare and Accenture’s Webinar: Tackle AI Security Challenges with Cloud-Centric Solutions