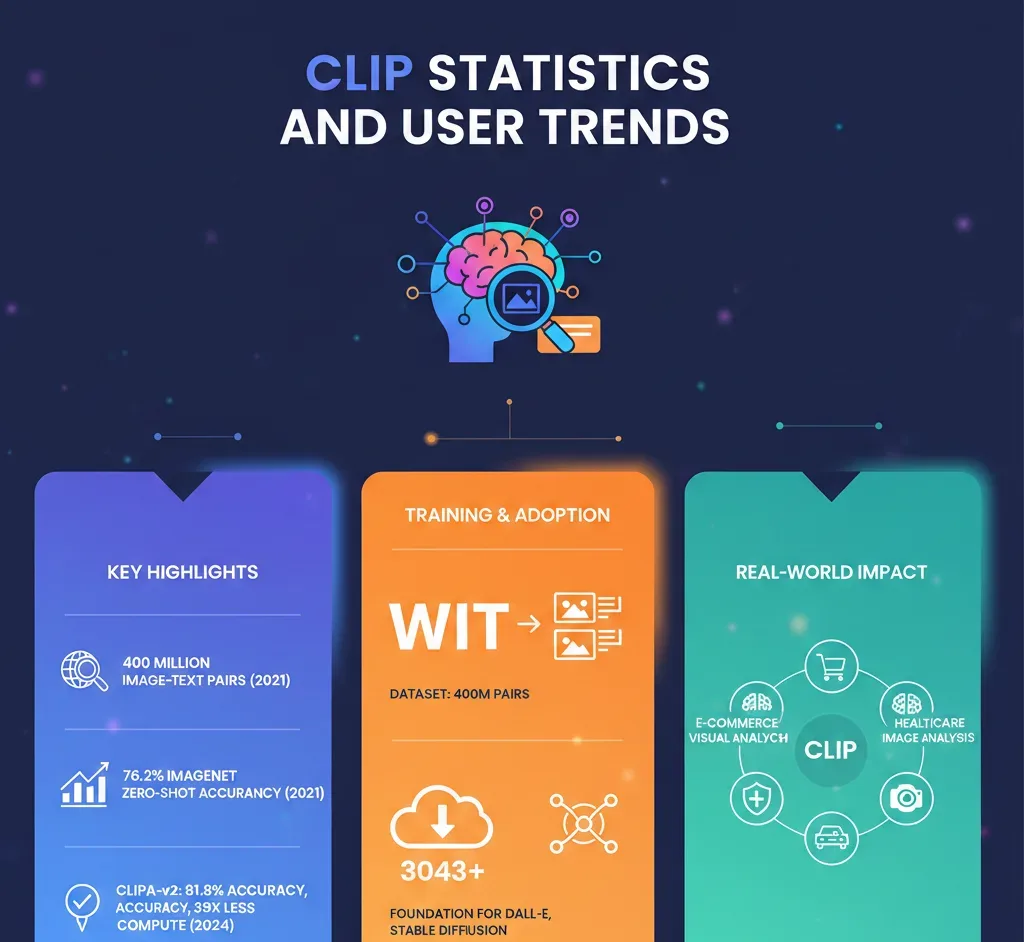

OpenAI’s CLIP model has revolutionized the field of image recognition since its launch in January 2021, achieving a remarkable 76.2% zero-shot accuracy on the ImageNet dataset. This performance is comparable to traditional supervised models that require extensive labeled training data, specifically those trained on over 1.28 million labeled examples. Leveraging a vast dataset of 400 million image-text pairs from the WIT dataset, CLIP significantly reduces the costs associated with manual annotation, paving the way for a new era in machine learning.

As of October 2024, the CLIP ecosystem has expanded dramatically, with over 3,043 CLIP-based models available on the Hugging Face platform, making it the most downloaded category of vision model. This proliferation underscores the adaptability of CLIP across various applications, from healthcare to e-commerce.

The model’s training involved 400 million image-text pairs sourced from publicly available internet content, utilizing a vocabulary of 500,000 unique queries. Unlike traditional datasets like ImageNet, which required manual labeling by a workforce of over 25,000 people, CLIP harnesses naturally occurring image-text relationships, facilitating more efficient training processes.

CLIP’s architecture features a text encoder built on a 12-layer Transformer with 512-dimensional embeddings and eight attention heads. This foundational structure is consistent across the various CLIP model variants. OpenAI has released seven such variants, each offering unique trade-offs between computational efficiency and accuracy. For instance, the CLIP ViT-L/14@336 variant achieved the top score of 76.2% accuracy in zero-shot classification, matching the performance of the ResNet-50 model while requiring less extensive training.

In a significant advancement, the CLIPA-v2 model variant reached an even higher zero-shot accuracy of 81.8% on ImageNet while concurrently reducing computational costs by approximately 39 times. This progress exemplifies the continuous evolution of CLIP’s capabilities and its relevance in contemporary AI applications.

CLIP has demonstrated its versatility through impressive performance across multiple benchmarks and datasets. In specific evaluations, it achieved 94.8% accuracy in CIFAR-10, 77.5% in CIFAR-100, and over 99% accuracy in the Imagenette classification task. Such results highlight its effectiveness in diverse visual recognition tasks, reinforcing its standing as a state-of-the-art model.

The impact of CLIP extends beyond academic research into practical applications across numerous industries. With enterprise AI spending projected to reach $37 billion in 2025—up from $11.5 billion in 2024—the demand for advanced AI solutions is surging. Industries are integrating CLIP technology for various use cases, including visual product searches in e-commerce, medical image analysis in healthcare, and zero-shot detection in content moderation.

The model also plays a crucial role in generative AI systems. Notably, CLIP is foundational to OpenAI’s DALL-E, where it assists in image-text alignment scoring, and it serves as a text encoder for Stability AI’s Stable Diffusion. This versatility showcases CLIP’s broad applicability in driving innovations in AI, particularly in image captioning and visual question answering.

Looking ahead, the future of CLIP appears robust as it continues to evolve. The open-source community has further expanded its capabilities through initiatives like OpenCLIP, which enable the training of larger models on extensive datasets. These developments suggest that CLIP will play an increasingly significant role in the next generation of AI technologies.

See also Survey Reveals AI Knowledge Gaps Among Medical Students at Top Tertiary Care Center

Survey Reveals AI Knowledge Gaps Among Medical Students at Top Tertiary Care Center DeepSeek Unveils mHC Architecture for Enhanced Large-Model Training Efficiency

DeepSeek Unveils mHC Architecture for Enhanced Large-Model Training Efficiency Wall Street Faces Earnings, Fed Cuts, and AI Spending Tests After 2025 Rally; Strategists Warn of Risks

Wall Street Faces Earnings, Fed Cuts, and AI Spending Tests After 2025 Rally; Strategists Warn of Risks Invest in Tomorrow: 5 Must-Buy AI Stocks Set to Soar in 2026

Invest in Tomorrow: 5 Must-Buy AI Stocks Set to Soar in 2026