The enterprise artificial intelligence security market has welcomed a new contender as Outtake announced a $40 million Series B funding round led by ICONIQ Growth, with significant participation from Microsoft CEO Satya Nadella, billionaire investor Bill Ackman, and a selection of prominent technology executives. This investment reflects a critical shift in how enterprises are beginning to deploy AI, transitioning from experimental projects to large-scale applications that require robust security measures.

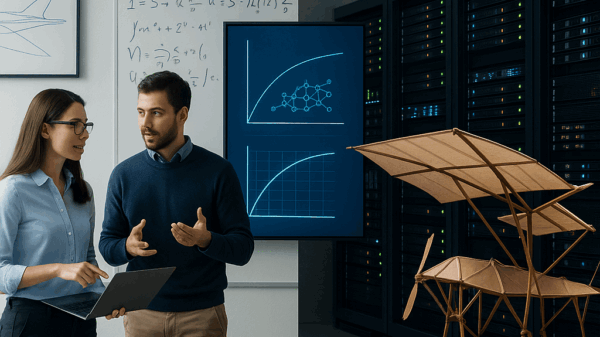

Outtake was founded to tackle the increasing security vulnerabilities associated with large language models and generative AI systems. Positioned at the nexus of two key business priorities—the race to implement AI capabilities and the need for stringent cybersecurity—Outtake aims to provide real-time monitoring and threat detection specifically tailored for AI systems. According to TechCrunch, traditional security tools are often inadequate for these new challenges. Nadella’s involvement, particularly given Microsoft’s extensive investment in OpenAI, signals a recognition among major players that security must be prioritized in AI deployments.

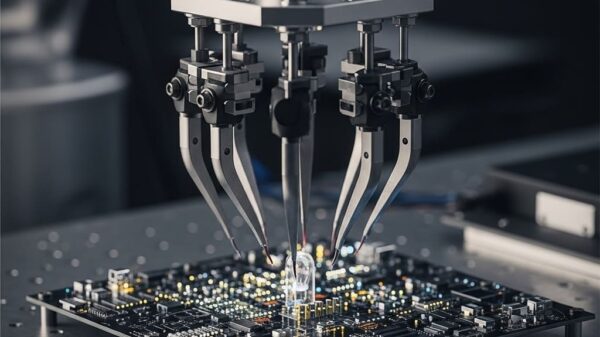

This funding arrives at a time when enterprises face novel security threats, such as prompt injection attacks, model poisoning, and data exfiltration through AI interfaces—issues that have emerged prominently over the past two years. Outtake’s technology reportedly utilizes behavioral analysis engines to detect unusual queries, alongside content filtering systems to prevent sensitive data leakage. Such features are currently absent in conventional security information and event management (SIEM) platforms.

The security challenges posed by AI systems stem from their architectural differences compared to traditional software. Unlike standard applications that operate with clear input-output parameters, large language models process natural language in ways that expose them to manipulation via crafted prompts. For instance, a financial services firm using an AI assistant might find that cleverly worded queries could trick the system into disclosing restricted customer information. As businesses rush to integrate AI technologies without fully understanding their security implications, these vulnerabilities have become increasingly apparent.

Industry analysts project that enterprises will invest over $2.8 billion in AI-specific security solutions by 2027, a sector that hardly existed in 2023. Outtake competes with startups like Robust Intelligence, Arthur AI, and Credo AI, but its funding and investor backing suggest a level of differentiation that resonates with leaders cognizant of the intricacies involved in AI deployment. The company’s clientele reportedly includes Fortune 500 financial institutions, healthcare systems, and technology firms, where regulatory compliance and data protection are of utmost importance.

Nadella’s personal investment in Outtake holds weight, especially considering Microsoft’s aggressive strategy in commercializing AI across its offerings, from Copilot features in its Office suite to Azure AI services. His financial commitment signifies an understanding that the success of Microsoft’s AI ambitions hinges on the broader ecosystem’s ability to deploy these technologies securely. The investment reflects a pragmatic acknowledgment that security concerns pose a chief obstacle to enterprise AI adoption, potentially hindering market growth.

The investor lineup highlights the cross-industry nature of AI security concerns, with Ackman increasingly focusing on technology investments that address fundamental infrastructure challenges. His participation suggests that AI security has evolved from a technical issue to a critical business risk management concern, attracting institutional investors’ attention. As the lead investor, ICONIQ Growth has previously supported enterprise infrastructure firms such as Datadog and Snowflake, indicating that it recognizes the potential within this emerging market.

While specific technical implementations from Outtake remain somewhat under wraps, sources familiar with the company describe a security architecture operating at multiple levels of the AI stack. The input layer analyzes queries for signs of prompt injection or data extraction attempts, employing extensive databases and anomaly detection algorithms. At the model layer, Outtake monitors for model drift and unauthorized fine-tuning, while the output layer enforces content filtering to safeguard against inadvertent data disclosures. This multifaceted approach represents a significant engineering achievement, given the distinct challenges at each layer.

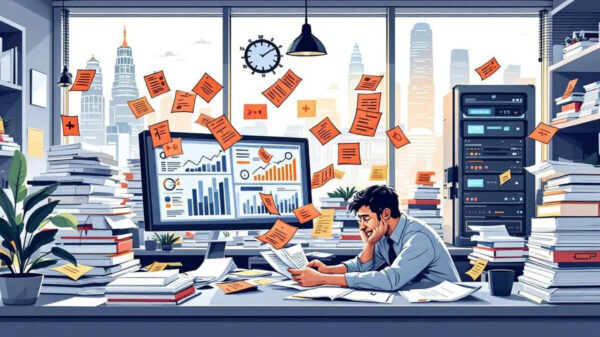

Enterprise adoption of AI security platforms typically begins with highly regulated sectors such as financial services and healthcare, where compliance demands robust security measures before new technologies can be implemented. These organizations often have dedicated AI governance teams responsible for evaluating security frameworks, providing natural entry points for specialized vendors like Outtake. Nevertheless, the complexity of implementation remains a significant hurdle, as these platforms must integrate seamlessly with existing security infrastructures.

Outtake’s strategy appears focused on this enterprise segment, where sizable deal sizes warrant a consultative sales approach. By establishing partnerships with major cloud providers, the company aims to facilitate integration points that reduce deployment friction. This ecosystem approach mirrors successful tactics employed by earlier enterprise security firms and positions Outtake favorably for market entry. The backing by strategic investors further enhances its capacity to form essential partnerships.

The rapid evolution of the AI security market presents both opportunities and challenges for players like Outtake. Enterprises are actively seeking solutions to security issues impeding AI adoption, while the market’s nascent stage means that best practices are still being defined. Traditional cybersecurity vendors and cloud providers have also begun to offer AI security features, leading to heightened competition. Outtake’s ability to maintain its competitive edge will depend on its execution speed, technical innovation, and the strength of its customer relationships—factors that the recent funding round will help bolster.

The regulatory landscape surrounding AI has shifted significantly, creating favorable conditions for security vendors that assist enterprises in navigating compliance. The European Union’s AI Act, effective from August 2024, enforces risk-based requirements for AI systems, necessitating mandatory security assessments for high-risk applications. U.S. regulatory bodies have similarly increased scrutiny, further emphasizing the importance of security in AI deployments. These developments transform AI security from a best practice into a compliance necessity, altering purchasing dynamics.

Outtake’s successful funding round not only reflects a maturing market focused on production deployments but also emphasizes the essential role of security in enabling AI technology. The confidence shown by prominent investors suggests that as enterprises scale their AI initiatives, security will remain a critical priority, ensuring sustained demand for specialized solutions. The participation of strategic investors may also uncover partnership opportunities that could accelerate Outtake’s market penetration, particularly given Microsoft Azure’s vast enterprise customer base.

The rise of dedicated AI security vendors like Outtake signifies a natural progression in the AI technology landscape, as solutions that once existed as features within broader platforms emerge as independent entities addressing specific challenges. This pattern has been observed in past technology transitions, with AI’s complexity necessitating dedicated security solutions. With the recent funding, Outtake is well-positioned to expand its engineering team and product offerings, potentially entering European markets where regulatory demands for AI security are urgent. As organizations continue their AI transformations, the nature of the security infrastructure they adopt will play a crucial role in determining the future possibilities within the AI economy.

See also 2026: Leaders Navigate AI Risks and Geopolitical Volatility Amid Ongoing Economic Uncertainties

2026: Leaders Navigate AI Risks and Geopolitical Volatility Amid Ongoing Economic Uncertainties Bloom Energy Surges 79% Amid $2.65B AI Data Center Power Deal, Faces Valuation Risks

Bloom Energy Surges 79% Amid $2.65B AI Data Center Power Deal, Faces Valuation Risks Germany”s National Team Prepares for World Cup Qualifiers with Disco Atmosphere

Germany”s National Team Prepares for World Cup Qualifiers with Disco Atmosphere 95% of AI Projects Fail in Companies According to MIT

95% of AI Projects Fail in Companies According to MIT AI in Food & Beverages Market to Surge from $11.08B to $263.80B by 2032

AI in Food & Beverages Market to Surge from $11.08B to $263.80B by 2032