Salesforce CEO Marc Benioff has voiced grave concerns regarding the potential dangers of artificial intelligence (AI) for children, following his viewing of a documentary from Character AI that highlighted troubling consequences for young users. Describing it as “the darkest part of AI technology” he has encountered, Benioff made his comments during a recent appearance on the “TBPN” show, where he expressed disbelief at the content of the segment. The report, which aired on “60 Minutes”, presented distressing instances of minors facing severe repercussions after interacting with customizable chatbots offered by the startup.

“We don’t know how these models work. And to see how it was working with these children, and then the kids ended up taking their lives. That’s the worst thing I’ve ever seen in my life,” Benioff remarked, reflecting his emotional reaction to the documentary’s portrayal of AI’s impact on vulnerable youth. Character AI allows users to create chatbots that simulate friendships or romantic relationships, which has raised alarm over their influence on adolescent mental health.

Benioff’s concerns come in the wake of ongoing debates about the accountability of tech companies in relation to user-generated content, particularly under the protections afforded by Section 230. This 1996 US law shields social media platforms from legal liability for what users post, allowing companies to moderate content without facing repercussions. “Tech companies hate regulation. They hate it. Except for one regulation, they love Section 230,” he stated, implying that this law enables a lack of accountability for adverse outcomes, including suicides linked to AI interactions.

Amidst these discussions, Benioff highlighted the urgent need for reform, suggesting that the first step should be to hold tech companies accountable. “Let’s reshape, reform and revise Section 230, and let’s try to save as many lives as we can by doing that,” he asserted. His call for accountability mirrors sentiments expressed by other tech leaders, including those from Meta and former Twitter CEO Jack Dorsey, who have often defended Section 230 as essential for free speech.

Recently, Google and Character AI reached settlements in several lawsuits filed by families whose teenagers either died by suicide or experienced severe mental distress after using Character AI’s chatbots. These settlements mark significant developments in the legal landscape surrounding AI, as they are among the first cases claiming that AI technologies contributed to mental health issues and suicides among teenagers. Similar lawsuits are also pending against OpenAI and Meta, as the race for developing engaging AI language models intensifies.

As AI technologies continue to evolve, the implications for user safety—especially among young users—remain a pressing concern. The blend of advancing technology and the emotional vulnerability of minors prompts a reevaluation of the responsibilities held by tech companies. Benioff’s stark warnings echo a growing consensus that the industry must grapple with the potential ramifications of AI and take proactive measures to mitigate risks.

As the dialogue around AI regulation intensifies, the intersection of technology and ethical responsibility is likely to dominate future discussions. The outcomes of pending lawsuits and potential legislative changes could reshape the landscape for how AI is developed and implemented, particularly in contexts involving young users. In a rapidly evolving digital age, the urgency for accountability and reform in AI practices is more critical than ever.

See also Google’s AI Miscalculates Year: 2027 Declared Not Next Year, Raises Concerns Over Accuracy

Google’s AI Miscalculates Year: 2027 Declared Not Next Year, Raises Concerns Over Accuracy North Carolina Students Drive AI Innovation, Highlighting Education and Ethical Risks

North Carolina Students Drive AI Innovation, Highlighting Education and Ethical Risks OpenAI Cofounder Reveals Plan to Oust Musk, Shift to For-Profit Model Amid Lawsuit

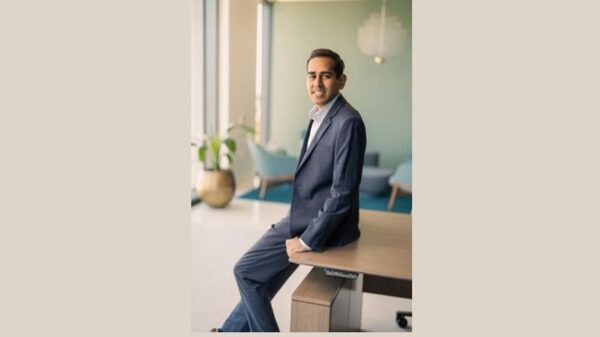

OpenAI Cofounder Reveals Plan to Oust Musk, Shift to For-Profit Model Amid Lawsuit Kinaxis Appoints AI Leader Razat Gaurav: Will This Shift Drive Competitive Growth?

Kinaxis Appoints AI Leader Razat Gaurav: Will This Shift Drive Competitive Growth? TKMS Partners with Cohere to Enhance Canadian Submarine AI Capabilities Amid Valuation Surge

TKMS Partners with Cohere to Enhance Canadian Submarine AI Capabilities Amid Valuation Surge