At this year’s CES, a significant transformation in automotive technology was evident, where the most critical “horsepower” now derives from silicon rather than steel. Automakers and chipmakers are increasingly viewing vehicles as rolling AI computers, prioritizing autonomy, advanced copilots, and safety intelligence over traditional mechanical engineering. This shift underscores that AI is no longer a supplementary feature; it has become integral to modern driving.

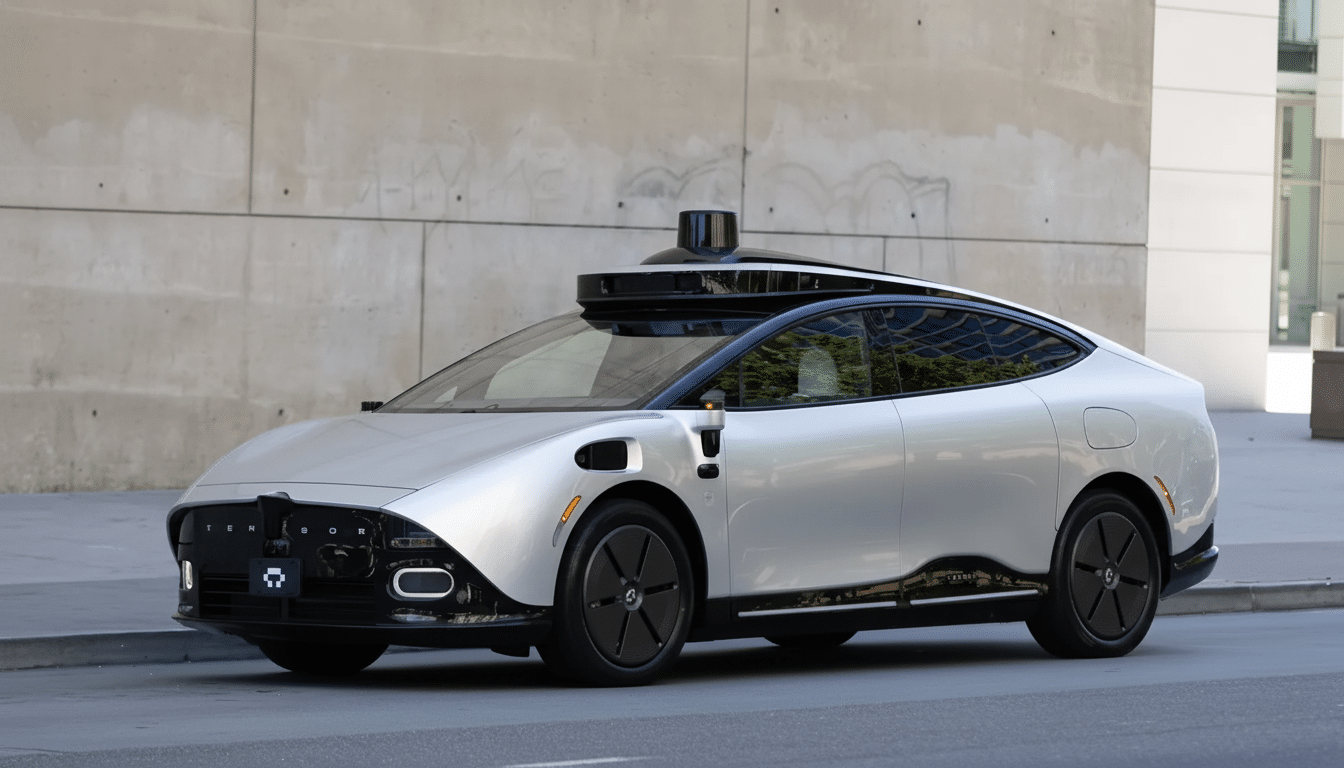

The focus on self-driving technology highlighted two prevailing themes: the importance of cognitive capabilities and the readiness of vehicles for autonomous operation. While less glamorous than high-speed performance, the underlying technologies—such as sensor fusion, predictive models, and on-vehicle computing—are essential for reliable autonomy. One standout, Tensor Auto, showcased its Robocar, an advanced electric vehicle designed for heavy automation. This vehicle functions as a social robot, capable of conversing, navigating with context-aware routing, and learning schedules, all while aiming for SAE Level 4 autonomy in designated areas. This production-intent concept vehicle illustrates the progression of autonomous technology from mere hype to tangible capabilities.

Similarly, Sony Honda Mobility‘s AFEELA 1, a production sedan priced at $89,900, emphasizes computational intelligence and in-cabin technology. Its innovative “interior-as-platform” approach integrates cameras, sensors, and GPUs, marking a shift in how vehicles are designed and experienced by consumers.

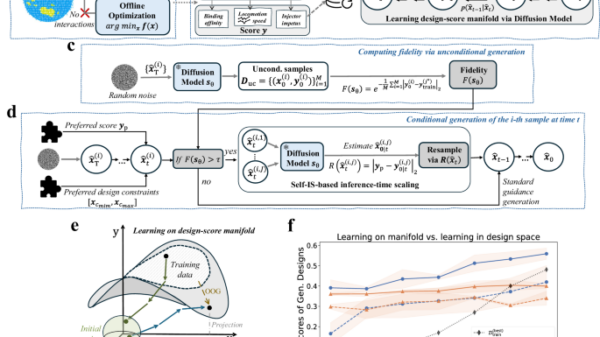

Chipmakers are framing cars as complex robotics systems. At the forefront, Nvidia introduced “physical AI,” which manages real-time perception and action loops through powerful domain controllers. This paradigm shift in autonomous driving research leverages Nvidia’s Alpamayo reasoning model, moving away from rigid rules-based code toward adaptive learning systems capable of interpreting everything from inferred driver intentions to handling novel driving scenarios. The computational requirements for such technologies are considerable, supported by an array of sensors and high-performance processors that manage vision, mapping, driver monitoring, and cabin AI in today’s electric vehicles.

If autonomy serves as the brain of modern vehicles, voice assistants are becoming their face. BMW previewed an Alexa-based assistant set to debut with its first Neue Klasse model, the iX3. This assistant transcends basic commands, engaging in human-like dialogue and offering contextual suggestions, such as current events and charging status. Meanwhile, Ford is developing an AI assistant beginning on smartphones, eventually extending its functionality into vehicles for seamless user experience. Tensor Auto has advanced this concept further by integrating cabin signals, vehicle sensors, and user preferences into a long-term memory profile that personalizes interactions.

Such advancements are significant due to their impact on consumer purchasing decisions. Surveys from firms like McKinsey and J.D. Power have indicated that customers are willing to switch brands for superior software and connectivity. When assistants effectively understand user intent and manage multi-part requests, they enhance driver engagement, reducing reliance on smartphones.

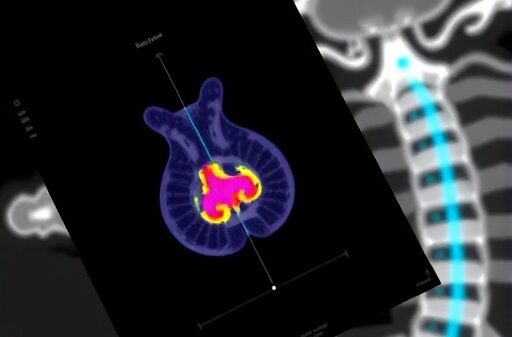

On the safety front, AI is not just a convenience but a critical factor in accident prevention. The Insurance Institute for Highway Safety notes that automatic emergency braking can halve rear-end collisions. Additionally, new protocols from Euro NCAP emphasize features such as junction automatic emergency braking and vulnerable road user detection, which depend heavily on robust perception and inference capabilities. Regulatory bodies like the NHTSA are also increasing their scrutiny on driver monitoring and human factors within partial automation, suggesting that standardized rules for these technologies are on the horizon.

While not all announcements at CES focused solely on high-tech AI, companies like Xiaomi and Volvo made strides in practical improvements. Xiaomi updated its SU7 electric sedan with enhanced safety features, while Volvo’s EX60 combines a solid real-world range with its renowned safety suite. Both models illustrate that steady improvements, built on AI foundations, are likely to keep consumers engaged.

The automotive landscape is evolving into a software-first paradigm, reshaping the relationship between drivers and their vehicles. Over-the-air updates promise to enhance functionality, but issues surrounding pricing models and data governance will be crucial in maintaining consumer trust. Drivers will increasingly demand transparency regarding what data is processed locally versus in the cloud, how preferences are managed, and the testing of automotive features.

The implications of this shift are clear: effective voice assistants can alleviate driver workload, advanced driver-assistance systems can prevent accidents, and full autonomy can relieve drivers of monotonous tasks. The take-home message from CES is straightforward: the future of automotive design lies in prioritizing intelligence before all else, lest manufacturers risk being left in the rearview mirror.

See also Doqfy Embeds AI-Driven Compliance in BFSI Contracts to Enhance Efficiency by 90%

Doqfy Embeds AI-Driven Compliance in BFSI Contracts to Enhance Efficiency by 90% Meta Launches Two Super PACs to Influence AI Regulation in California and Beyond

Meta Launches Two Super PACs to Influence AI Regulation in California and Beyond Law Firms Must Embrace Generative and Answer Engine Optimization to Thrive in 2026

Law Firms Must Embrace Generative and Answer Engine Optimization to Thrive in 2026 Türkiye Issues Ethical Guidelines for AI in Schools with Mandatory Declarations

Türkiye Issues Ethical Guidelines for AI in Schools with Mandatory Declarations