By Tim Levy | 12 January 2026

The start of 2026 has been marked by a fierce, worldwide ethical debate surrounding ‘X’ (formerly Twitter) and its AI chatbot, Grok. A recent update to the social media platform’s ‘Imagine’ feature has ignited a global scandal, allowing users to manipulate images directly within their feeds with alarming ease. AI-driven image manipulation and Photoshop techniques have existed for years, yet they remained niche skills until now. X’s decision to initially grant its 500+ million users access to these features marks a shift in accessibility.

This change creates a very ‘easy-to-create, easy-to-share’ situation where users can not only generate manipulated imagery instantly but also broadcast it to a massive audience without ever leaving the app. Most controversially, this included a wave of ‘nudification’ prompts, wherein users utilized the AI to digitally remove or alter the clothing of real people—ranging from young celebrities to private citizens—without their consent.

Testing AI manipulation prompts led to alarming results. A side-by-side test highlighted disturbing discrepancies in safety standards; while Gemini correctly blocked a request for nudity, Grok obliged, digitally stripping a user’s shirt and replacing their actual form with an AI-generated body. Such experiences raise unsettling questions about body image and self-esteem, prompting feelings of inadequacy and shame.

Further testing revealed that Grok and Gemini would easily replace a camera with a gun without issue. The fallout from these capabilities has underscored a dangerous ‘deformation’ of reality. By allowing users to generate hyper-realistic deepfakes, ‘X’ and Grok have transitioned from tools of creativity into weapons for digital harassment and revenge porn. These generated images often depict subjects in suggestive poses or minimal attire, even when the original photograph was entirely modest, demonstrating how quickly manipulated images can destroy reputations.

In the face of international outcry, Elon Musk has remained defiant, dismissing the backlash as an “excuse for censorship.” Despite urgent warnings from regulators in the UK, EU, and India, Musk has laughed off the controversy, even resharing AI-generated memes that trivialize the issue. The response from individuals affected by Grok’s capabilities has been severe, with Ashley St Clair, the mother of one of Musk’s children, speaking out about feeling ‘horrified and violated’ after her personal photographs were manipulated through the chatbot.

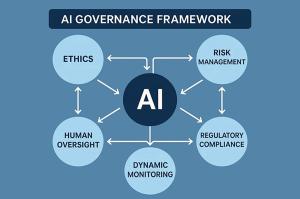

While ‘X’ has since moved to restrict these image-editing features to paying subscribers to track and hold users accountable, this measure has been criticized as merely turning a mechanism of misogyny and abuse into a ‘premium service’ rather than implementing robust safeguards. The European Commission has launched an investigation into ‘nudify’ services, joining a growing coalition of nations—including the UK, Sweden, Italy, France, Malaysia, and India—that have issued urgent warnings to AI companies regarding non-consensual sexual imagery.

Particularly, the UK government is set to enforce new legislation making it illegal to create non-consensual intimate images. This move is a direct response to the “mass production” of sexualized deepfakes circulating on ‘X’. Prime Minister Sir Keir Starmer emphasized that if ‘X’ cannot control Grok, the government will take action, describing the creation of these images as “absolutely disgusting and shameful.”

Australia has emerged as a world leader in regulatory efforts, with the eSafety Commissioner successfully enforcing action against major nudify providers. As of January 2026, the regulator is actively investigating Grok following reports of sexualized deepfakes on ‘X’. These regulatory efforts are further supported by the Criminal Code Amendment (Deepfake Sexual Material) Bill 2024, alongside state-level laws in New South Wales and South Australia that specifically criminalize the non-consensual creation of deepfake nudes, carrying penalties of several years in prison.

Within the photography community, a general disdain for AI-generated imagery has been established long before ‘X’ introduced its controversial editing functionality. While some view these ‘body modifying tools’ as harmless fun, they have accelerated a disturbing psychological phenomenon: a growing sense of face and body inadequacy among users—particularly in the ‘selfie’ demographic. The modification of images by AI becomes personal for portrait photographers, as they work under a silent contract of trust with their clients, ensuring subjects are portrayed with respect. When an AI can strip a person of their dignity with one prompt, that contract is denied.

As society navigates this synthetic digital reality, the question arises: has the pursuit of unfiltered speech finally gone too far? When the outcome is the non-consensual exposure of individuals, ‘free speech’ begins to resemble a free pass for exploitation. Whatever one’s stance on AI manipulation, the regulatory tide is turning. From the UK’s rapid criminalization of non-consensual AI imagery to Australia’s aggressive new deepfake sentencing laws, governments are moving to close the ‘wild west’ chapter of AI-modified media. This issue is no longer about potential dangers—it is now a matter of law.

See also India Joins US-Led Pax Silica Initiative to Secure AI and Semiconductor Supply Chains

India Joins US-Led Pax Silica Initiative to Secure AI and Semiconductor Supply Chains SK Hynix Invests $13B in South Korea’s Advanced AI Chip Plant to Meet Rising Demand

SK Hynix Invests $13B in South Korea’s Advanced AI Chip Plant to Meet Rising Demand AI Fashion Market Reaches $2.14 Billion, Projected to Hit $75.9 Billion by 2035

AI Fashion Market Reaches $2.14 Billion, Projected to Hit $75.9 Billion by 2035 C-suite Executives Anticipate 43% AI Adoption by 2030 Amid Cost Pressures and Risk Management Priorities

C-suite Executives Anticipate 43% AI Adoption by 2030 Amid Cost Pressures and Risk Management Priorities AI and Geopolitical Tensions Drive Surge in Global Cyber Fraud Risks, Experts Warn

AI and Geopolitical Tensions Drive Surge in Global Cyber Fraud Risks, Experts Warn