A University of Staffordshire PhD student, Chris Tessone, is navigating a challenging path in his research on users’ trust in AI, particularly with large language models like ChatGPT and Claude. His studies come at a time when the philosophy department he initially enrolled in is set to close, although the university has committed to support his doctoral completion. This situation highlights a broader trend in UK higher education, where the humanities face significant cuts, creating “cold spots” in regions where critical thinking tools become increasingly exclusive to the elite.

The ongoing dismantling of humanities departments occurs as society grapples with the complexities introduced by artificial intelligence. As generative AI models proliferate, largely without regulation, there is an urgent need for systematic research that the humanities are uniquely poised to provide. These disciplines can help unpack why users might confide in a chatbot or how the persuasive fluency of AI can blur the lines between human and machine interaction.

Agnieszka Piotrowska, an academic and filmmaker, is also exploring these issues in her forthcoming book on AI-human relationships. Through a blend of autoethnography and Lacanian theory, she introduces the concept of “techno-transference,” explaining how users transfer relational expectations onto generative systems. Without the insights of the humanities, she warns, society risks navigating an unregulated AI landscape devoid of critical interpretation.

Piotrowska points out that the industry’s metrics of success often diverge from user experiences. OpenAI recently released a new model that, just 24 hours after launch, processed one trillion tokens. Although this figure indicates the volume of text handled, it does not account for meaningful improvements in user satisfaction. User feedback on platforms like Reddit reflected a sense of loss rather than advancement, with many reporting disruptions in their interactions and a feeling of continuity being undermined.

This discrepancy between quantitative success and qualitative user experience raises critical questions that warrant rigorous academic inquiry. While discussions around AI often focus on issues like plagiarism and bias, there are deeper questions about how these systems evolve over time and what their behaviors reveal about human responses. Engineers alone cannot tackle these complexities.

Qualitative research methods, which the humanities excel in, face increasing skepticism from funders and university administrators. Many humanities departments are being dismantled or forced to narrow their focus, sidelining vital exploratory work into human-machine interactions. Eoin Fullam, a PhD student researching mental health chatbots, acknowledged that his project would not have received funding had it been framed solely as a theoretical exploration. His critique highlighted that many chatbots lack the advanced capabilities users might expect, yet he had to present his findings as immediately useful to secure support.

This practical framing often overshadows the more profound philosophical implications of AI interactions. The prevailing narrative suggests that large language models are merely statistical tools, dismissing the need for deeper academic engagement. Those who do address the experiential effects of AI face skepticism, often branded as naive or unstable.

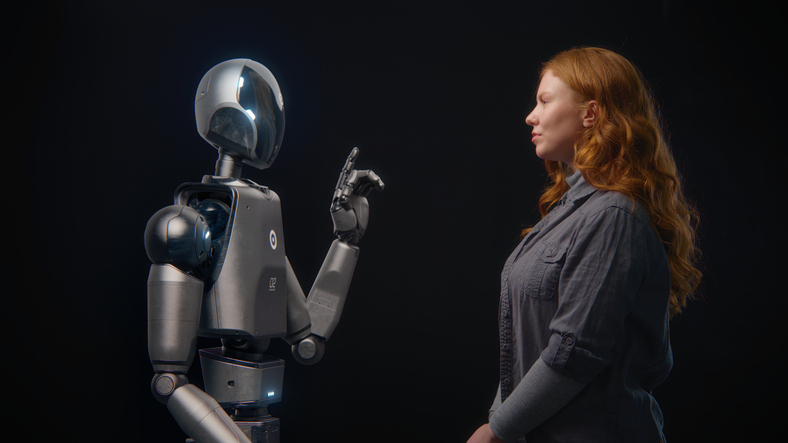

Murray Shanahan, an emeritus professor of artificial intelligence at Imperial College London, emphasizes that the most thought-provoking capabilities of AI often surface during extended user interactions. He argues that a sustained dialogue with chatbots can reveal valuable insights, regardless of whether these systems possess consciousness. Such interactions should be recognized as legitimate methods of inquiry, but institutional pressures increasingly discourage this type of research.

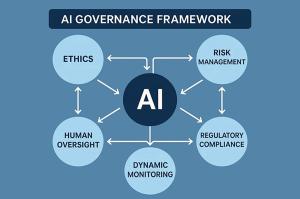

This issue is not confined to the UK. Luciano Floridi, a philosopher now at Yale University, has noted a shift in AI ethics toward design-oriented solutions, potentially sidelining the exploration of high-level ethical principles. Although this approach has led to advancements in bias mitigation and transparency, it remains incomplete, as deeper structural and systemic issues are often overlooked.

Researchers Hong Wang and Vincent Blok have highlighted that while observable biases in AI arise from technical conditions, the public discourse increasingly focuses on surface effects, neglecting the root causes. The polarized nature of AI discussions has created a blind spot in areas that urgently require scrutiny. If observable phenomena cannot be discussed in the context of official narratives, the foundation of empirical inquiry is undermined.

The integrity of AI research hinges on studying what these systems truly do rather than relying solely on proclaimed capabilities. For academia to influence the future trajectory of AI, it must reclaim the right to engage deeply with uncomfortable questions and the realities of these technologies. As AI continues to evolve, the need for critical examination and dialogue becomes ever more pressing.

See also AI Chatbots Misrepresent News 45% of the Time, Study Reveals Disturbing Hallucinations

AI Chatbots Misrepresent News 45% of the Time, Study Reveals Disturbing Hallucinations Ontario Tech Panel Explores AI’s Environmental Impact Amid Climate Action Challenges

Ontario Tech Panel Explores AI’s Environmental Impact Amid Climate Action Challenges Israeli Study Uses AI to Predict Childhood Obesity Risk from Maternal Data Before Birth

Israeli Study Uses AI to Predict Childhood Obesity Risk from Maternal Data Before Birth Cognizant Reveals AI Can Boost U.S. Labor Productivity by $4.5 Trillion Today

Cognizant Reveals AI Can Boost U.S. Labor Productivity by $4.5 Trillion Today BioMark Diagnostics Validates Lung Cancer AI Study with Publication in Frontiers in Oncology

BioMark Diagnostics Validates Lung Cancer AI Study with Publication in Frontiers in Oncology