A series of artificial intelligence (AI)-related cheating scandals at South Korean universities may pose long-term risks for their global rankings and reputations. As institutions grapple with the implications of rapid AI advancement, many have yet to implement robust measures addressing these challenges.

QS, a global higher education analytics firm known for its university rankings, highlighted that controversies surrounding AI-related academic misconduct could indirectly influence how universities are scored in their rankings. According to QS Communications Director Simona Bizzozero, while such incidents are not directly assessed, they may affect academic and employer reputation scores, which are critical components of global standings.

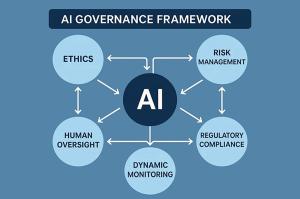

“History shows that sustained reputational damage from governance failures to academic misconduct can, over time, shape how institutions are viewed by global academic and employer communities,” Bizzozero stated. The firm emphasized that universities’ ability to manage AI responsibly is becoming increasingly crucial in higher education assessments.

As generative AI technologies proliferate, there is a heightened focus on the integrity of academic processes. Bizzozero noted that this urgent dialogue encompasses a range of issues, including assessment design and governance, reflecting a broader concern for academic integrity.

Despite the growing criticism following several AI-related misconduct incidents, South Korean universities have been slow to adapt, often only taking remedial actions after the fact. For instance, Yonsei University has faced multiple instances of technology-assisted cheating since introducing its AI ethics guidelines. Most recently, a group of 34 students from its College of Dentistry submitted altered clinical training photographs, raising alarms about accountability in practical training settings.

Moreover, in November 2022, around 194 of approximately 600 students enrolled in a fully online course on natural language processing were found to have used AI to cheat on a midterm exam. While Yonsei’s guidelines recommend that faculty members outline AI policies in course syllabi, the university admitted that these recommendations lack enforceability. “The guidelines function more as recommended practices than enforceable rules,” a university official commented.

Yonsei University revealed plans to revise its AI guidelines before the upcoming semester, although the timeline for these updates remains uncertain. When asked about QS’s remarks regarding the reputational implications of academic misconduct, the university declined to provide further comment.

In a similar vein, Seoul National University has identified AI misconduct since October 2022, with incidents reported during midterm and final exams across various courses despite implementing oversight measures, including a system to monitor off-screen activity during assessments. In response to ongoing issues, the university announced new AI guidelines that permit the use of AI tools while placing the onus for responsible use on students.

Under this framework, instructors are empowered to determine their own policies regarding AI use in their courses, with penalties established for students who violate these guidelines. However, the university was unavailable for comment regarding QS’s assertion that such misconduct could affect its reputation.

The prevalence of AI-related cheating incidents in South Korean higher education underscores a wider issue regarding the integration of AI in academic environments. As universities strive to maintain their standings in global rankings, the need for effective governance and ethical standards around AI usage is becoming increasingly urgent. The reputational implications of failing to address these challenges could resonate beyond individual institutions, potentially shaping the future landscape of higher education in the region.

The evolving discourse on AI in academia illustrates the necessity for universities to not only adapt to technological advancements but also to proactively safeguard their integrity and reputation in an increasingly competitive global environment.

See also Critical Hydra Flaw Exposes Hugging Face Models to Remote Code Execution Risks

Critical Hydra Flaw Exposes Hugging Face Models to Remote Code Execution Risks xAI Enforces Stricter Limits on Grok Image Editing to Counter Regulatory Risks

xAI Enforces Stricter Limits on Grok Image Editing to Counter Regulatory Risks ELAXIR Tool Revolutionizes Patient Engagement in Ethical AI Healthcare Discussions

ELAXIR Tool Revolutionizes Patient Engagement in Ethical AI Healthcare Discussions DigitalOcean Achieves 2x Inference Throughput with Character.ai, Halves Costs per Token

DigitalOcean Achieves 2x Inference Throughput with Character.ai, Halves Costs per Token China Imposes Limits on Nvidia AI Chip Purchases Amid U.S. Export Policy Shift

China Imposes Limits on Nvidia AI Chip Purchases Amid U.S. Export Policy Shift